Import data from any REST API in to HDFS using SQOOP

Introduction

One of the most popular interfaces for exposing and consuming data is using a REST API, and one of the best ways to store data at a low cost and analyze the data later is HDFS.

Data Architects encounter scenarios where the business wants to store data from REST API’s as they get updated information, so that the BI/Analytics team can run analytics on top of this data. To do this currently we need a custom plugin/solution to bring that data from the REST API to HDFS. Wouldn’t it be great if you could use an existing tool like Sqoop to bring in the data from REST API with out having to write a single line of code?

That is exactly what we are going to do with the help of Sqoop and Progress DataDirect Autonomous REST Connector. Autonomous REST Connector allows you to query API data using standard SQL by normalizing the semi-structured JSON response data into structured data that can be used with SQL.

In this tutorial we will use Autonomous REST Connector with Sqoop, where Autonomous REST Connector will connect to Alpha Vantage REST API (this is an example, you can use any REST API) to get stock exchange data. Sqoop will ingest this data into HDFS for your future analytical needs. Let’s take a look at how you can do this.

Prerequisites

Before you start the tutorial, we assume you have the following environment and tools setup:

- HDFS and a Hive instance

- Sqoop tool

- An Alpha Vantage API key. Get yours here.

Install Autonomous REST Connector

- Download Progress DataDirect Autonomous REST Connector for JDBC from our website.

- Install the connector by running the setup executable file on your machine.

- After you have finished installation, you should find the Autonomous REST Connector at the below default path, unless you have chosen to install it at a different location.

/home/<user>/Progress/DataDirect/JDBC_60/lib/autorest.jar - Copy the Autonomous REST Connector from the above path to Sqoop’s lib folder by running the below command.

cp /home/<user>/Progress/DataDirect/JDBC_60/lib/autorest.jar $SQOOP_HOME$/lib/autorest.jar

Configuring Autonomous REST Connector

- To connect to the Alpha Vantage API using Autonomous REST Connector, you can point it to the endpoint with some default values.

- For the purpose of the tutorial, we will connect to Stock Time series endpoints offered by Alpha Vantage. Let’s say we want to get all the time series intraday values for a stock with an interval of 1 minute. Here is the request:

- To configure the driver to connect to the above endpoint, all you must do is use the below JDBC URL

jdbc:datadirect:autorest:sample=https://www.alphavantage.co/query?function=TIME_SERIES_INTRADAY&symbol=PRGS&interval=1min&apikey=<apikey>

- When you connect to any REST API using Autonomous REST Connector, it will automatically sample the API and create a configuration which you can access by querying the _CONFIGURATION table. You can get this configuration by using the Autonomous REST JDBC driver in any SQL querying tools like Dbeaver, Squirrel SQL, etc.

- For the tutorial, we are providing the configuration below. Save this in a file called alphavantage.rest file.

{"query":{"#path":[],"Meta Data":{"1. Information":"VarChar(84)","2. Symbol":"VarChar(64),#key","3. Last Refreshed":"Timestamp(0)","4. Interval":"VarChar(64)","5. Output Size":"VarChar(64)","6. Time Zone":"VarChar(64)"},"Time Series (1min){Timestamp(0)}":{"1. open":"Double","2. high":"Double","3. low":"Double","4. close":"Double","5. volume":"Integer"},"function":{"#type":"VarChar(64),#key","#virtual":true,"#default":"TIME_SERIES_INTRADAY","#eq":"function"},"symbol":{"#type":"VarChar(64),#key","#virtual":true,"#default":"MSFT","#eq":"symbol"},"interval":{"#type":"VarChar(64),#key","#virtual":true,"#default":"1min","#eq":"interval"},"apikey":{"#type":"VarChar(64),#key","#virtual":true,"#default":"<your api key>","#eq":"apikey"}}}Note: Please use your api key in the #default property under apikey json object.

- In the configuration, you may notice that Autonomous REST Connector has detected all the objects and their data types.

- To learn more about Autonomous REST Connector, how you can configure it to your needs, and how to connect to multiple endpoints, we recommend you go through these tutorials after you have finished this one.

Import Data from any REST API to HDFS

- To import data from Alpha Vantage API we are using Autonomous REST Connector which will provide SQL interface for the API.This driver will be used with Sqoop to import the data into HDFS.

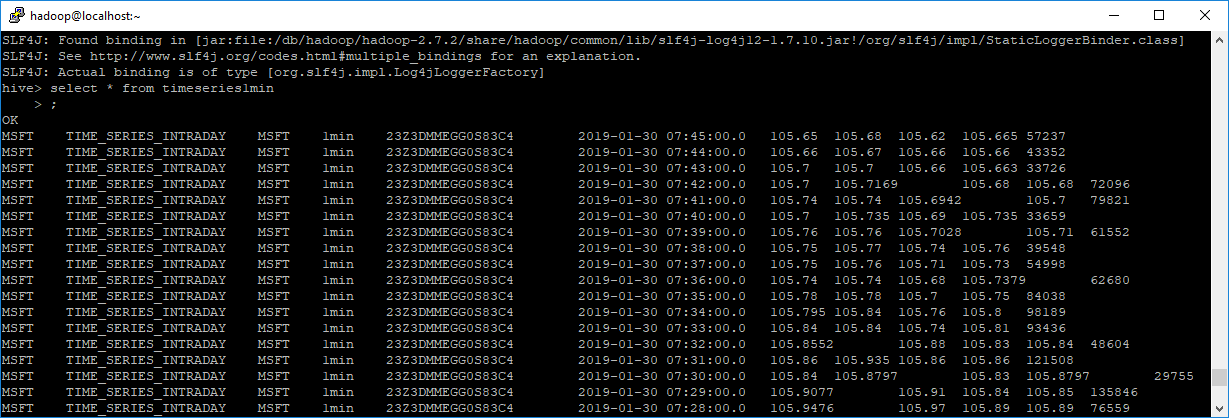

- To start importing the data from the table TIMESERIES1MIN, all you have to do is run the below Sqoop command. If you want to use the default values that you used when you sampled the endpoint.

sqoop import --connect'jdbc:datadirect:autorest:config=/db/hadoop/scripts/AlphaVantage.rest;TransactionMode=ignore'--table TIMESERIES1MIN --hive-import --create-hive-table --hive-table TIMESERIES1MIN --driver com.ddtek.jdbc.autorest.AutoRESTDriver - If you want to use the custom SQL Query in Sqoop to use custom stock symbols, you can run the following command.

sqoop import --connect'jdbc:datadirect:autorest:config=/db/hadoop/scripts/AlphaVantage.rest;TransactionMode=ignore'--query"select * from TIMESERIES1MIN where QUERY_SYMBOL='AAPL' AND \$CONDITIONS"--hive-import --create-hive-table --hive-table TIMESERIES1MIN --driver com.ddtek.jdbc.autorest.AutoRESTDriver --target-dir /usr/hadoop/timeseriesquery --split-by key - After the Sqoop command has completed execution, you can find the data from the REST API ingested into Hadoop.

It’s so easy to import any REST API to HDFS using Progress DataDirect Autonomous REST Connector and you won’t have to write a single line of code to do so. Feel free to try out Autonomous REST Connector with any API. If you have any questions, feel free to talk to us.