Using IBM DB2 JDBC Driver to Integrate DB2 with Amazon S3

Step-by-step tutorial to import on-premises IBM Db2 data into Amazon S3 using the Progress DataDirect JDBC Driver.

Update: Looking for the best in connectivity for your data in the cloud? While DataDirect Cloud is no longer available, Hybrid Data Pipeline has all the same features and more! Learn more about Hybrid Data Pipeline.

AWS Data pipeline is a web service that lets you process, transform and move the data securely between AWS Storage and compute services in regular intervals. But, at some point, you would want to import data from your on-premise data sources to your AWS Storage or compute services. AWS data pipeline has a neat little feature which enables you to connect to any database with Progress DataDirect JDBC drivers and import the data into S3 which you can use later to move it across either compute or storage services in AWS.

But how do you access your On-premise database that’s behind a firewall through JDBC drivers?

Progress DataDirect Cloud is our connectivity service that enables you to create secure connections to all of your cloud (SaaS) and on-premise databases (Relational/ Big Data) even if they are behind the firewall. The following tutorial shows how you can leverage DataDirect Cloud JDBC driver to import the data from IBM Db2 which is behind firewall, in to S3 storage engine with minimal effort and by creating a pipeline which you can essentially re-use multiple times.

Setting up:

Set up a DataDirect Cloud Account by signing up here.

Create a new data source for IBM Db2 by following the instructions here. If your server is behind a firewall, you should also install DataDirect Cloud On-premise connector which can be found in the Downloads section once you are logged in to DataDirect Cloud account.

This On-premise connector when installed gives you a connector id which would be reflected in your DataDirect Cloud account when you are configuring the data source. It is labeled as connector id which is a dropdown from which you can select the connector id that is shown on the machine you have installed the On-premise connector.

Test the connection and save it.

From downloads section in DataDirect cloud, download the DataDirect Cloud JDBC driver and install it on your machine. If you need help installing it, follow the instructions here.

Sign up for Amazon Web Services by visiting this link.

Create an Amazon S3 Bucket from the AWS console by opening the Amazon S3 console at this link.

Next, upload the DataDirect Cloud JDBC driver (ddcloud.jar) which can be found at \install_directory\Progress\DataDirect\Cloud_for_JDBC_20\lib to your newly created S3 bucket.

Creating the Data Pipeline:

From your AWS Console, go to Data Pipeline console and click on the button “Create New Pipeline.”

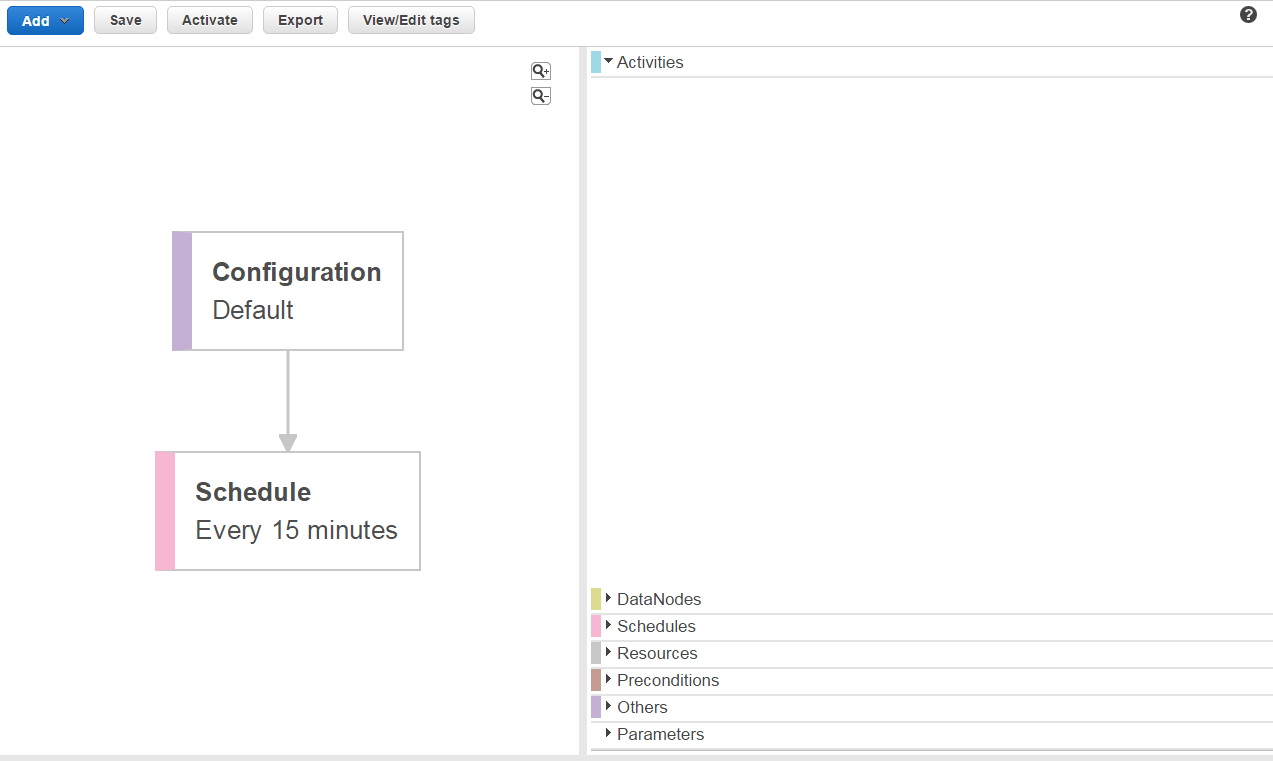

Fill in the Name of your choice, select Source as "Build using Architect," choose your schedule that you would want to run this pipeline, choose the location for saving the logs on your S3 bucket and leave other options to default. At the end of the form, click on the button "Edit in Architec" to create the data flow. By default, you should see the following Pipeline.

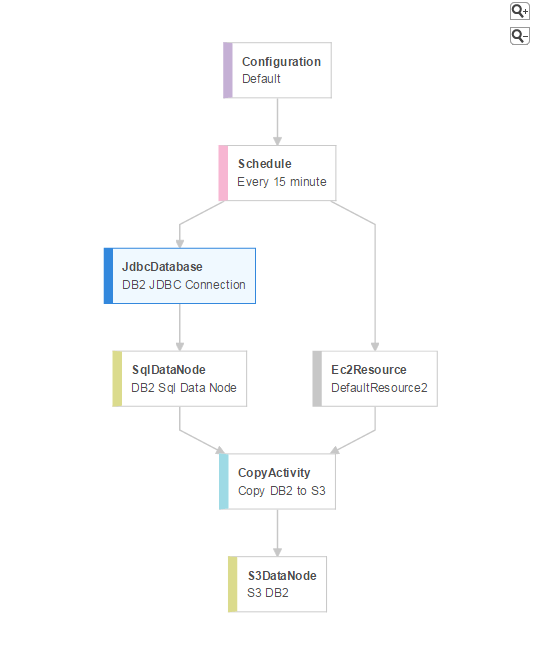

Add a New SQL Data Node, by clicking on Add button. Go to its configuration and click on drop down "Add an optional field" and select "Database." You should see a new database component in your pipeline.

Click on the Database component and you should see its configuration. Configure it as below:

- Type: ‘JDBC Database’.

- Username: <Your DataDirect Cloud username>

- Password: <Your DataDirect Cloud password>

- Connection String: jdbc:datadirect:ddcloud:database=<Data Source name in DataDirect

- JDBC Driver class: com.ddtek.jdbc.ddcloud.DDCloudDriver

- JDBC Driver Jar Uri: s3://<your S3 bucket>/path/ddcloud.jar

Revisit the SQL Data Node that you have created above and configure it as follows. For the sake of the tutorial, I am fetching the data from Account table that I have in IBM Db2 in to S3.

- Table: Db2.ACCOUNT

- Select Query: SELECT * FROM Db2.ACCOUNT

Next, add an S3DataNode. Configure it as follows. File path is location of file on your S# bucket where the data read through Salesforce JDBC gets saved.

- Type: S3DataNode

- File Path: s3://<Your S3 Bucket>/path/<filename>.csv

Now add an CopyActivity and configure it as follows:

- Type: CopyActivity

- Input: <SQL Data Node> that you have configured above

- Output: <S3 Data Node> that you have configure above

- Runs On: <Create a new Resource>. This will create a new resource component in the workflow.

Go to the newly created resource component and configure it as follows:

- Type: EC2 resource

- When you change it to EC2 resource, you should see Resource role and Role populated to default values

Save the Pipeline and you should see no errors. If there are any they will be displayed on the bottom of the screen. Resolve them before proceeding ahead. Your final pipeline should look similar to the below screenshot.

Activate the Data Pipeline

Once the pipeline is saved without any errors, you can activate the pipeline. Depending on the schedule that you have configured, the pipeline should run and create a CSV file which contains Accounts Table data read from IBM Db2 database configured on DataDirect Cloud using its JDBC drivers. It should be present in the path that you have configured in the S3DataNode.

We hope this tutorial helped you to import IBM Db2 data into Amazon S3 using Amazon Data Pipeline, which you can use to import it in to various Amazon Compute services. This demonstration is not limited to IBM Db2, in fact you can create Amazon Data pipeline with DataDirect Cloud JDBC drivers to connect and import data from over 50+ data sources which includes your favorite SaaS, Relational and Big Data sources even if they are behind the firewall.

Try Free for 30 Days