Tutorial: Using Google Cloud Dataflow to Ingest Data Behind a Firewall

In this tutorial, you'll learn how to easily extract, transform and load (ETL) on-premises Oracle data into Google BigQuery using Google Cloud Dataflow.

Google Cloud Dataflow is a service for processing and enriching real-time streaming and batch data. Dataflow uses the Apache Beam SDK for Java for data inflow and outflow. As you might expect with a cloud-based solution, the Java I/O has a list of predefined data stores which are primarily cloud and Big Data.

However, Dataflow can be expanded broadly beyond Big Data and the Cloud to many other sources through the JDBC interface. Using Progress DataDirect JDBC connectors, you can open Google Dataflow's processing power to a wide range of on-premises data including Oracle, SQL Server, IBM DB2, Postgres and many more. The capability to expand your data sources means that you can integrate diverse external databases with the Google ecosystem, eliminating non-Google data silos.

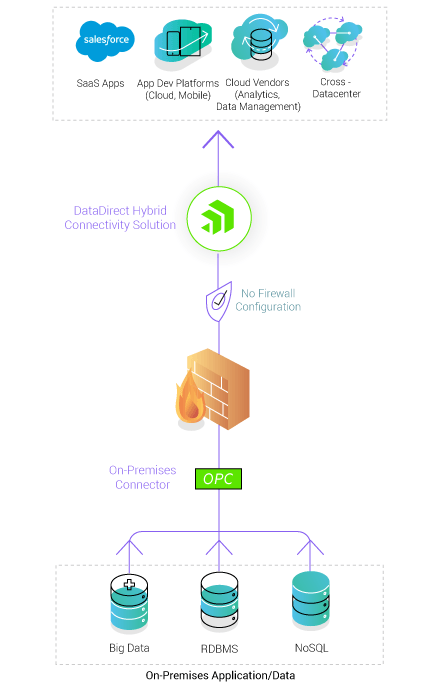

Combining on-premises data with cloud technologies almost always raises immediate concerns about security, but the DataDirect Hybrid Data Pipeline lets you securely access data behind any firewall without the requirement to make complex network configurations such as SSH tunnels, reverse proxies or VPNs. It can also be deployed to work with existing network configurations, which is often required in industries such as financial services.

The DataDirect Hybrid Data Pipeline JDBC driver can be used to ingest both on-premises and cloud data to Google Cloud Dataflow through the Apache Beam Java SDK interface. We've written a detailed tutorial to show you how to extract, transform and load (ETL) on-premises Oracle data into Google BigQuery using Google Cloud Dataflow.

Our tutorial demonstrates how to connect to an on-premises Oracle database, read the data, apply a simple transformation and write it to BigQuery. This does not require any additional components from the database vendors.

You can use a similar process with any of the Hybrid Data Pipeline’s supported data sources like SQL Server, Hive, IBM DB2, Salesforce, Amazon Redshift, etc. Check out the tutorial and please contact us if you need any help or have any questions.