Implementing Retrieval-Augmented Generation (RAG) with JSON Output Using Nuclia RAG-as-a-Service

Previously published on Nuclia.com. Nuclia is now Progress Agentic RAG.

Goal

Historically, when interacting with machines, humans had to adapt to the machine’s language to ask questions—typically using SQL queries—and then interpret the machine’s response. This response usually comes in a structured data format, such as a table or a JSON string, following a specific schema.

Generative answers are beneficial because they allow users to ask questions in natural language, as humans would, and receive human-readable responses.

However, human-readable answers are not ideal when the response needs to be processed by another machine. In such cases, a structured data format is more appropriate.

For example, if you want to know the last book Isaac Asimov wrote in the Foundation series, you can ask a Large Language Model (LLM) and get a clear answer. But if you want to order this book automatically, you need the answer in a structured format to send to the ordering system.

In this scenario, having the best of both worlds is ideal: Ask the query in natural language (“Please, order the last Isaac Asimov book in the Foundation series,”) and receive a structured data response.

This is the purpose of the JavaScript Object Notation (JSON) output option on Nuclia’s "/ask" endpoint.

Note: Regarding the example, you might wonder if it’s important to say “Please” in the query. The answer is yes. First, LLMs are known to be more accurate when the query is polite. Second, it’s good practice to be polite when interacting with any entity—especially one that mimics human conversation—to avoid developing a habit of rudeness.

Principle

To obtain a JSON output from the LLM, you need to define the expected data structure. Using the book ordering example, you would likely expect a JSON object with the following fields:

title: the title of the bookauthor: the author of the bookISBN: the ISBN of the bookprice: the price of the book

To achieve this, you need to specify the JSON Schema you want the response to follow. This schema is passed to the endpoint in the "answer_json_schema" parameter of the request. It uses the JSON Schema format. Here is an example of a schema for the book ordering use case:

{

"name": "book_ordering",

"description": "Structured answer for a book to order",

"parameters": {

"type": "object",

"properties": {

"title": {

"type": "string",

"description": "The title of the book"

},

"author": {

"type": "string",

"description": "The author of the book"

},

"ref_num": {

"type": "string",

"description": "The ISBN of the book"

},

"price": {

"type": "number",

"description": "The price of the book"

}

},

"required": ["title", "author", "ref_num", "price"]

}

}The most important attributes are the description attributes because that’s what the LLM will use to understand what you expect.

Sometimes the description is not needed; for example, author is explicit enough as attribute name and the LLM will understand it properly.

Sometimes, you have to be more specific, like for ref_num, which cannot be easily related to the ISBN of the book without a proper description. Or, let’s say you want the publication date, publication_date would be sufficient for the LLM, but if you add the following description, the LLM will format the date in ISO format for you:

"Publication date in ISO format"The JSON Schema format is quite powerful and allows you to define complex structures, like nested objects, arrays, etc., or even define constraints on the values (like a minimum or maximum value for a number, or the list of required properties) as such:

{

"name": "book_ordering",

"description": "Structured answer for a book to order",

"parameters": {

"type": "object",

"properties": {

"title": {

"type": "string",

"description": "The title of the book"

},

"price": {

"type": "number",

"description": "The price of the book"

},

"details": {

"type": "object",

"description": "Details of the book",

"properties": {

"publication_date": {

"type": "string",

"description": "Publication date in ISO format"

},

"pages": {

"type": "number",

"description": "Number of pages"

},

"rating": {

"type": "number",

"description": "Rating of the book",

"minimum": 0,

"maximum": 5

}

},

"required": ["publication_date"]

}

},

"required": ["title", "details"]

}

}Use Cases

The use cases are endless, but one of the most obvious ones is to offer a conversational interface where your users can ask questions in natural language, obtain both a regular plain-text answer and a set of actions to perform based on the answer.

In most cases, you do not want to entirely bypass the human-readable answer because it is a good way to check if the LLM understood the query correctly.

In our example, if the LLM is a proper Isaac Asimov fan, it will probably answer the last book of the Foundation series as either Forward the Foundation (if you meant last according to the publication date), or Foundation and Earth (if you meant last according to the story chronology). So, the LLM may be left thinking which one do you actually want to order here, huh?!

So, you will add a human-readable answer in the JSON output to allow the user to continue interacting with the LLM until the query is correctly understood:

{

"name": "book_ordering",

"description": "Structured answer for a book to order",

"parameters": {

"type": "object",

"properties": {

"answer": {

"type": "string",

"description": "Text responding to the user's query with the given context."

}

// then the rest of the schema

}

}

}And you can use the structured data to automate the next steps.

Extracting Structured Information from Images

As Nuclia offers a RAG strategy providing images along with the text, it is possible to extract structured information from images if you select a visual LLM.

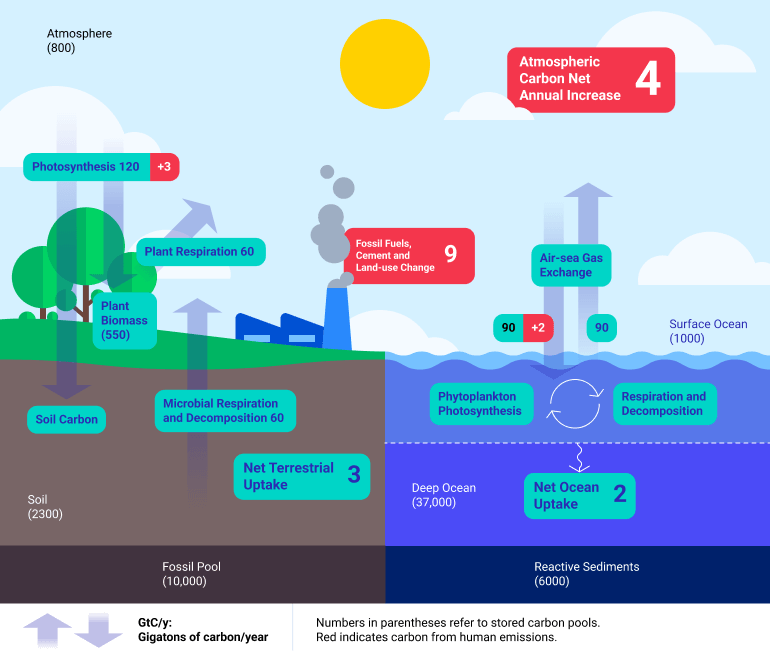

For example, if you index a document containing an image like this:

Then ask the LLM “How much carbon is stored in the soil?” and get the answer as a number with:

{

"query": "How many carbon is stored in the soil?",

"answer_json_schema": {

"name": "carbon_cycle",

"description": "Structured answer for the carbon cycle",

"parameters": {

"type": "object",

"properties": {

"carbon_in_soil": {

"type": "number",

"description": "Amount of carbon stored in the soil in gigatons"

}

},

"required": ["carbon_in_soil"]

}

}

}You will also get the following JSON output:

{

"answer": "",

"answer_json": {

"carbon_in_soil": 2300

},

"status": "success",

…

}It would work this way with the Nuclia Python SDK as well:

from nuclia import sdk

search = sdk.NucliaSearch()

answer = search.ask_json(

query="How many carbon is stored in the soil?",

generative_model="chatgpt-vision",

rag_images_strategies=[{"name": "paragraph_image", "count": 1}],

answer_json_schema={

"name": "carbon_cycle",

"description": "Structured answer for the carbon cycle",

"parameters": {

"type": "object",

"properties": {

"carbon_in_soil": {

"type": "number",

"description": "Amount of carbon stored in the soil in gigatons"

}

},

"required": ["carbon_in_soil"]

}

}

)

print(answer.object["carbon_in_soil"]) # 2300

See the documentation for more details.

Use Metadata

You might need to retrieve extra information not present in the text, like the publication date, the author, the URL of the source, etc. This information can be stored in the metadata of the resource and then used in the JSON output.

To let the LLM access these metadata, you can use the "metadata_extension" RAG strategy and then define the JSON Schema accordingly.

Try it out today and see the difference for yourself!