Embeddings 101: The Building Blocks of Modern AI

The embeddings are a way of representing text as numerical vectors. Although the term may seem new, the reality is that they have been around for some time. In this article, we will explore what they are, how they work and examples of their use in real-life AI solutions.

What Are Embeddings Really?

One thing we know is that computers do not work with text at a low level but always with numerical representations. This also applies in the field of neural networks when processing words—from completing text to writing source code.

To understand embeddings simply, let’s take one-hot encoding as a basis, which is a technique that converts a category into a vector. For example, suppose you have a dictionary composed of five words: apple, pear, plane, car and moon. Each of the words is represented in a vector with a dimension equal to the number of words in the dictionary. In the vector, a value of 1 represents the position of the category, while a value of 0 represents the absence of the category, resulting in a different vector for each word:

- apple: [1,0,0,0,0]

- pear: [0,1,0,0,0]

- plane: [0,0,1,0,0]

- car: [0,0,0,1,0]

- moon: [0,0,0,0,1]

With the above, we have converted words into a vector representation, known as one-hot vector. However, there are some issues with this approach:

- The larger the dictionary of words, the larger the vectors that represent each of them.

- A lot of space is wasted because the majority of values in each word are zeros.

- The separation between vectors will always be the same, as there is no way to semantically differentiate them.

To solve the previous problems, word embeddings are used, which represent words using real numbers and dense vectors of fixed dimensions. A very important point about embeddings is that words with similar meanings will have similar vectors.

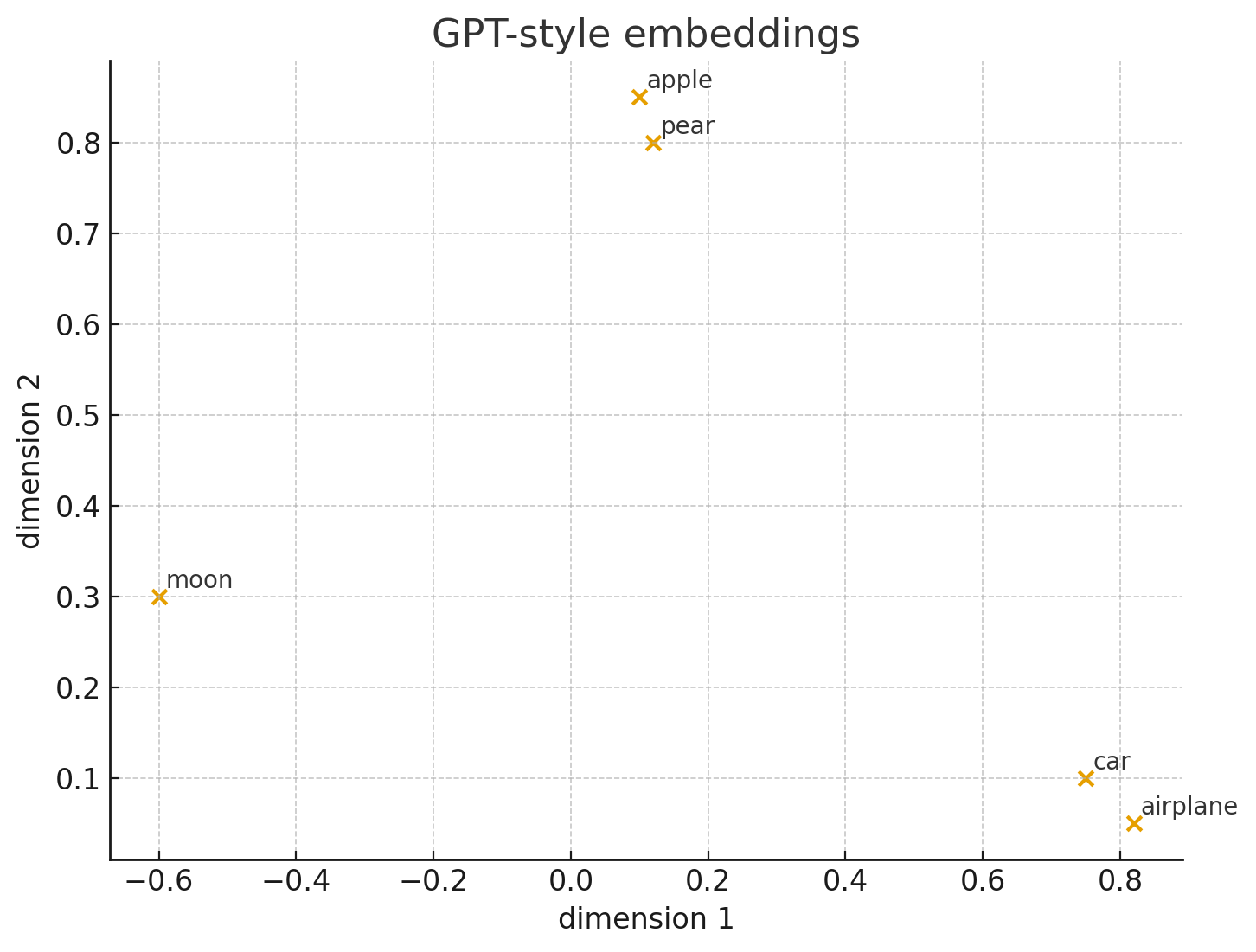

Continuing with the same words from the example, an approximate representation of their embeddings could be as follows:

- apple: [0.10, 0.85, 0.05]

- pear: [0.12, 0.80, 0.04]

- car: [0.75, 0.10, 0.20]

- plane: [0.82, 0.05, 0.18]

- moon: [-0.60, 0.30, 0.90]

You may notice that the values of semantically similar words are very close, resulting in the following graph that takes into account the first two values of the vector, called dimensions:

In the previous image, you can see that related words are plotted close together, while those with different meanings are positioned further apart.

In recent years, different techniques have been developed to generate these vectors, with some of the most popular being Word2Vec, GloVe and FastText. The problem with these models is that they generate a single vector per word, which prevents them from distinguishing their semantic meaning in different contexts (it is as if the same weight is given to the words regardless of the context).

With the rise of generative AI, even more powerful models have been created that allow the use of context for generating embeddings, such as BERT or GPT, such that if we had these two sentences:

- A bat flew out of the cave.

- He hit the ball with a bat.

Each sentence would use a different vector for the word bat, as they are semantically different words in each sentence.

Use Cases for Embeddings

In the previous section, you learned what embeddings are. Now the question is, what are they used for? The answer is that the number of practical cases is vast.

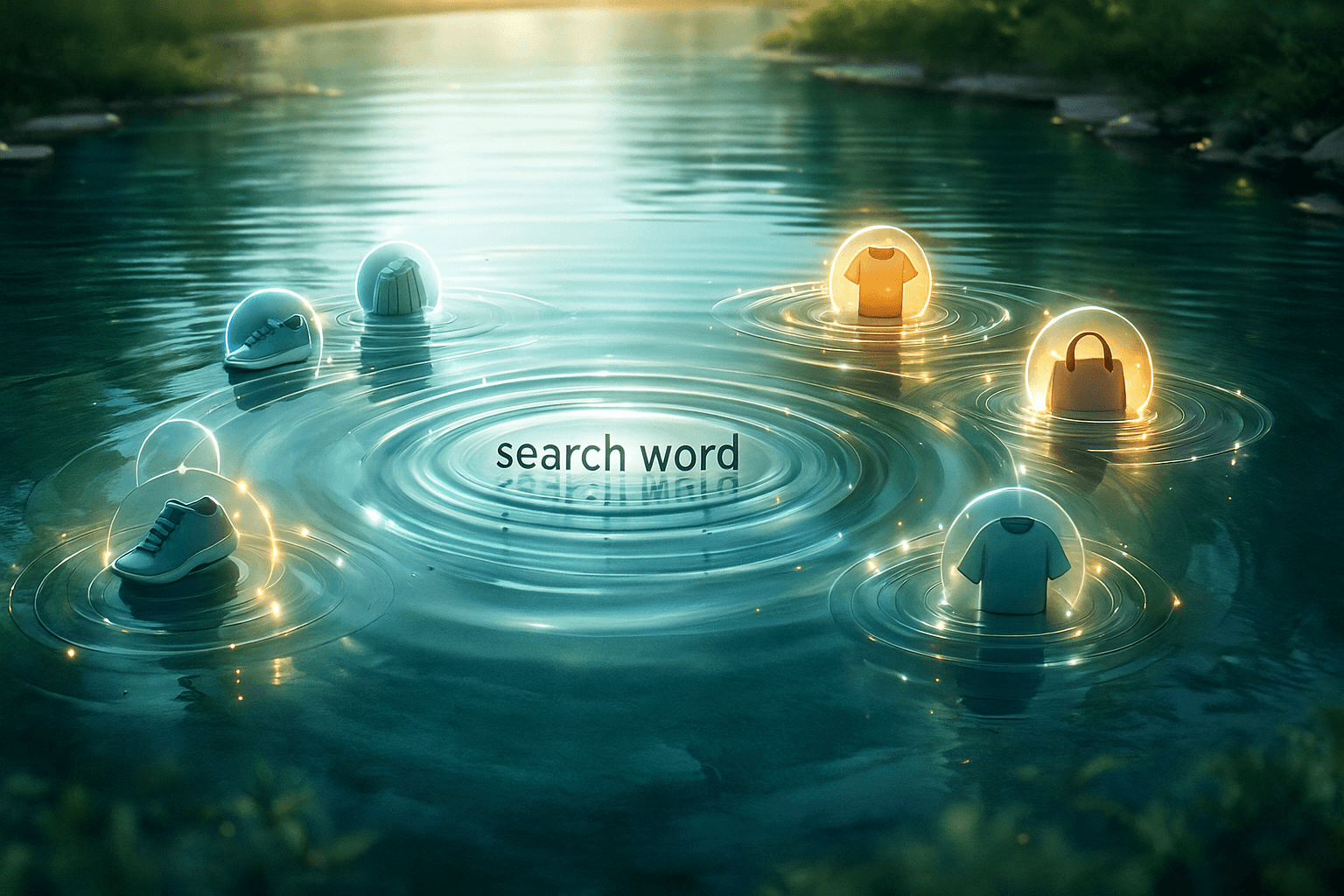

One of the most common uses is for performing semantic searches. Imagine an online store where products are sold to the public. In a basic system, the user must enter exact search terms to find the desired product, which leads to situations where if they misspell a term or simply do not remember the product, they will receive no suggestions. However, by using embeddings, related results can be found for the entered keyword, which significantly enhances the user experience. Shopify is an example of a company that has implemented embeddings to improve searches with better intent from users.

Another well-known use case for embeddings is in the RAG pattern. This pattern allows for searching text in large document databases. In simple terms, the first part of this pattern consists of splitting the document information into chunks, storing them in a database while extracting the embeddings from the text. The second part involves vectorizing the user's search to find the most relevant chunks according to their embeddings stored in the knowledge base, allowing the extraction of the most relevant information for the search. There are companies that have created RAG-as-a-Service solutions that automate this entire process.

It is also possible to use embeddings for working with text matching, as some today are doing with editors like VS Code. This can be useful for tasks such as similar searches, spam detection, duplicate content detection, finding duplicate records in databases and even finding similar multimedia files, such as images, audio and videos.

Creating and Using Embeddings

To give you a better overview of the use of embeddings in real life, I will show you some Python code.

You should know that there are many projects and services today that allow the creation of text embeddings including open source projects, however, for practical reasons, I will use the OpenAI embeddings service, as it is one of the most well-known and widely used.

The first step we will take is to import the package OpenAI, which will allow us to obtain the embeddings of a text, in addition to the numpy package to perform the similarity calculation between vectors. Next, we create a OpenAI client and a list of phrases that we will simulate are part of different documents:

from openai import OpenAI

import numpy as np

client = OpenAI()

sentences = [

"The sun rises in the east and sets in the west every day.",

"Python is a popular programming language for data science.",

"Regular exercise and healthy eating contribute to wellness.",

"The stock market experienced significant volatility this week.",

"Classical music concerts feature orchestras and solo performances."

]

Once we have the list of phrases, we will create the embeddings and store them in a new list, simulating that we are storing them in some type of knowledge base:

embeddings = []

for i, sentence in enumerate(sentences, 1):

print(f"\nGenerating embedding for sentence {i}...")

response = client.embeddings.create(

input=sentence,

model="text-embedding-3-small"

)

embedding = response.data[0].embedding

embeddings.append(embedding)

Each of these embeddings will give us a vector with values like the following:

First 10 values: [-0.0692155659198761, -0.029784081503748894, 0.024596326053142548, 0.02780454233288765, 0.031194785609841347, 0.05301520973443985, 0.05269666388630867, -0.016268614679574966, 0.023060478270053864, -0.013651984743773937...]

...

Once we have the embeddings of the phrases stored in the knowledge base, we can move on to the information query part. In this step, the user could enter some text to search for records with the highest possible similarity. In this example, for simplicity, I have created a variable to store the query:

query_text = "What are the best practices for coding in Python?"

Since the previous variable contains plain text, we need to get its corresponding embedding:

query_response = client.embeddings.create(

input=query_text,

model="text-embedding-3-small"

)

query_embedding = query_response.data[0].embedding

Once we have all the embeddings ready, we will calculate the similarity between the search text and the stored embeddings. To simplify it as much as possible, in the following code I use the dot method from numpy as follows:

def cosine_similarity(vec1, vec2):

return np.dot(vec1, vec2)

In the previous code, we assume that the OpenAI embeddings are normalized to a length of 1, which means they range from -1 to 1, although there are recommended normalization techniques in case this is not the case. Next, we obtain the similarity between each element of the list and the search text:

similarities = []

for i, embedding in enumerate(embeddings, 1):

similarity = cosine_similarity(query_embedding, embedding)

similarities.append(similarity)

print(f"Sentence {i}: {similarity:.6f}")

max_similarity_index = np.argmax(similarities)

max_similarity_score = similarities[max_similarity_index]

Finally, we display the most similar result:

print(f"\nMost similar sentence:")

print(f"Index: {max_similarity_index + 1}")

print(f"Similarity score: {max_similarity_score:.6f}")

print(f"Sentence: '{sentences[max_similarity_index]}'")

The result of the execution is as follows:

Calculating cosine similarity with each sentence:

Sentence 1: 0.021425

Sentence 2: 0.436147

Sentence 3: 0.139182

Sentence 4: -0.001479

Sentence 5: 0.034793

Most similar sentence:

Index: 2

Similarity score: 0.436147

Sentence: 'Python is a popular programming language for data science.'

The previous exercise shows the basics of how embeddings work step by step.

Conclusion

Throughout this article, you have learned about the power of embeddings, which allow you to find similar information. They are undoubtedly a very important part of the current AI ecosystem, as their use allows solving cases that were previously difficult to implement or that resulted in imprecise information.

When using AI-based solutions, always remember to consider issues such as information privacy, costs and even model bias, which could yield some type of imprecise information. I encourage you to continue exploring the topic, trying different free and paid solutions, so that in the end you can choose the one that best fits your needs.

Finally, the code shown is just a starting point that you can adapt and improve according to your particular use case.

Héctor Pérez

Héctor Pérez is a Microsoft MVP with more than 10 years of experience in software development. He is an independent consultant, working with business and government clients to achieve their goals. Additionally, he is an author of books and an instructor at El Camino Dev and Devs School.