Demystifying AI: Cutting Through the Hype

Since the launch of ChatGPT on November 30, 2022, nothing has been the same. Nowadays, search engines perform summaries using AI from the found results, applications create images and videos that look like art in just a few seconds, nonexistent music groups trend on Spotify, you can program vibrating using programming agents, among many other things.

However, have you ever wondered what the term “artificial intelligence” means, and what lies behind an LLM? In this article, we will debunk some myths related to the AI hype, but before that, we need to know the history and functioning of LLMs.

Birth of the Term Artificial Intelligence

Whether we like it or not, the use of artificial intelligence is here to stay. Although it may seem like a recent term, the truth is that it has been among us for longer than you think, to be precise, since 1956.

That year, a conference was held at Dartmouth College where topics concerning the study of the premise that any aspect of learning or another feature of intelligence could, in principle, be described with such precision that it could be manufactured with a machine to simulate it.

This conference is attributed to the birth of the term artificial intelligence. You can see a photo of the conference participants below:

Early Years of Artificial Intelligence

You might be surprised, but in the 50s and 60s, the first algorithms and programs capable of playing chess emerged. The very Alan Turing published an essay indicating that chess was perfect for obtaining ideas about artificial intelligence.

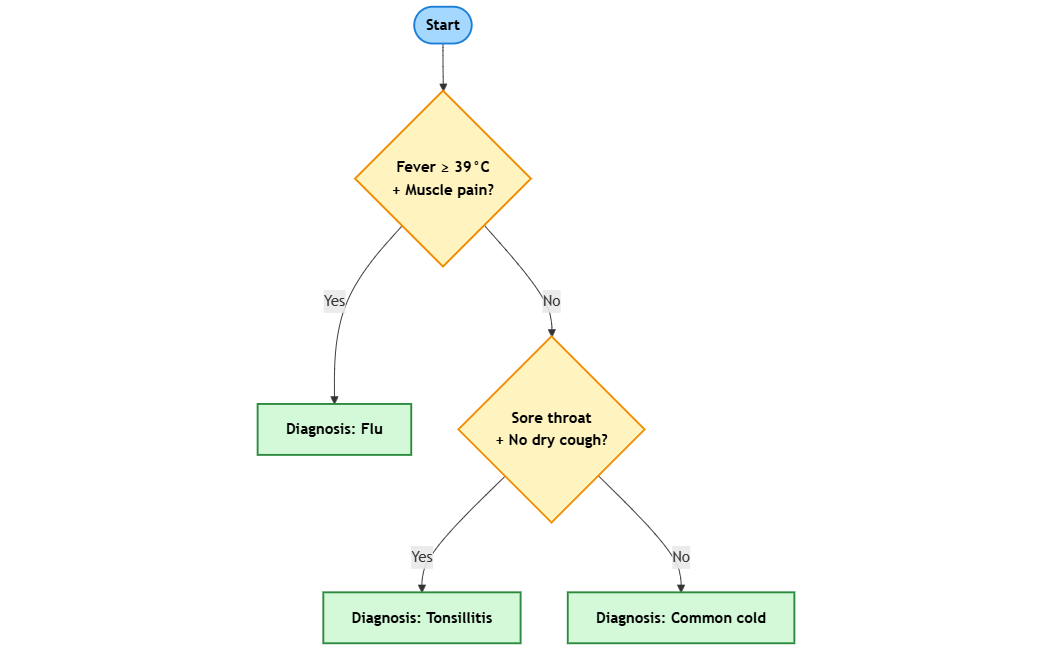

A few years later, during the 80s and 90s, expert systems were developed, which emulated the decision-making of a human expert in different fields, such as medicine, finance, technical diagnosis, etc. They were built from rules, facts and domain knowledge, allowing conclusions, diagnoses or recommendations to be reached.

In the previous image, you can see a very basic example of the flow of an expert system.

Understanding Large Language Models

To break any myths related to modern AI models, we need to understand broadly how they work.

Firstly, artificial intelligence is the field that seeks machines to perform tasks that normally require human intelligence. This term is quite broad, and we could understand it better by using the analogy of a restaurant, whose purpose is to serve food to dinners through cooks, recipes, utensils, etc.

The next term we need to understand is machine learning, which is a subfield of artificial intelligence focused on recognizing patterns in data. Following the restaurant analogy, you can think of it as a chef who can learn to cook by observing and practicing.

Previously, we stated that the purpose of AI is to imitate human intelligence, which is why I recommend an exercise for you to check the pattern recognition for yourself. Next, I will show you examples of 2 languages identified as Language A and Language B:

Example 1

Language A: 안녕

Language B: こんにちは

Example 2

Language A: 감사합니다

Language B: ありがとう

Example 3

Language A: 고양이

Language B: 猫

From the previous examples, could you determine which language the following text belongs to?

Example 4

엄마

I’m sure you guessed the answer by selecting Language A, which is Korean. Language B is Japanese. This happened because there are some patterns, which we will call variables, that your brain has detected, such as square syllable blocks that made you automatically calculate a higher probability for the correct language to be A.

The previous exercise is related to the third term you should identify, which is neural networks, a type of model that allows creating complex relationships to identify variables in more complicated tasks. Once again, let’s use the restaurant analogy, where neural networks would be a team of helpers with specific tasks like chopping, seasoning, etc., who would work in a series of tasks, one after another, to create the perfect dish.

With all the above, would it be possible to treat the prediction of a word in a sequence as a Machine Learning problem? The answer is yes. If after training a model to identify sequences in text, it is likely that when asking for the next word in the phrase: "The color of the pulp of the mango is…", the result with the highest probability of being selected would be the word yellow. Among the other probabilities, there would probably be other words with lower percentages like bright, pale, etc.

What I want to convey with this is that LLMs are essentially models that generate words based on probability, knowledge they have learned after being trained through books, blogs, scientific articles and practically any available document. This means that an LLM does not understand as a human does, and it also lacks consciousness and common sense.

Common Myths About AI

Massively used LLM models are recent, so it is common for myths to exist around them, just as has happened before with technologies like electricity or computers.

One of the most heard myths is that AI models are conscious and even have feelings, which is far from reality. Let’s remember that there are companies that literally have an ocean of chat conversations between users, customer support tickets, among other types of conversations and these have been used to make LLM responses sound increasingly human-like.

Another myth that I constantly see is that AI will replace humans in jobs. Although with each new model it seems that this is becoming a reality, we must remember that since generated content is based on probability, there is truly no reasoning, critical thinking, ethics or genuine creativity behind the generated content. Therefore, human oversight over the results is critical.

The ideal scenario is to use AI as a complementary tool and not as a substitute. For example, a doctor could use it to detect anomalies through X-rays, a programmer to create sections of code following a nomenclature, a civil engineer to detect possible structural failures, with it being essential in all the previous cases to verify the information before accepting any suggestion as truth.

The last common myth is that AI knows everything and therefore always tells the truth. Once again, this is incorrect, as it is more common than you think for an LLM to invent data or confirm facts as if they were true when in reality they are made up. These are called hallucinations, and it is common to find them when the AI model does not have enough information about the question, or when it has outdated information.

For example, within the AI models of OpenAI, there was one called gpt-3.5-turbo-instruct, which is currently status deprecated. However, when using this prompt in ChatGPT with the GPT-5 model:

I want to use OpenAI's GPT-3.5 model because I've heard it's more affordable. Give me a list of suggestions explaining why it might even be better than the current models.

The model gives us information about the advantages of using this model that were described in many places at the time; however, it never mentions that we should avoid using it because it is deprecated, as a true expert in the field would, as shown below:

Without a doubt, this is a powerful reason to always check the responses generated by AI models in any field.

Conclusion

Understanding how AI models work allows us to better appreciate their scope and limitations. Far from being infallible models with unlimited knowledge, they are models that only know what has been used to train them and function through probability.

This has allowed us to demonstrate and understand that the myths surrounding them are completely erroneous, so we must avoid falling into the habit of blindly accepting everything generated by them, regardless of the field.

What we can do is use them and guide them correctly, turning them into our allies and co-pilots that help us with everyday and complex tasks.

Héctor Pérez

Héctor Pérez is a Microsoft MVP with more than 10 years of experience in software development. He is an independent consultant, working with business and government clients to achieve their goals. Additionally, he is an author of books and an instructor at El Camino Dev and Devs School.