Data Security Basics for Cloud and Big Data Landscapes

Learn about security practices for Big Data, including network security, firewalls, Amazon AWS IAM, Hadoop Cluster Security, Apache Knox, and more.

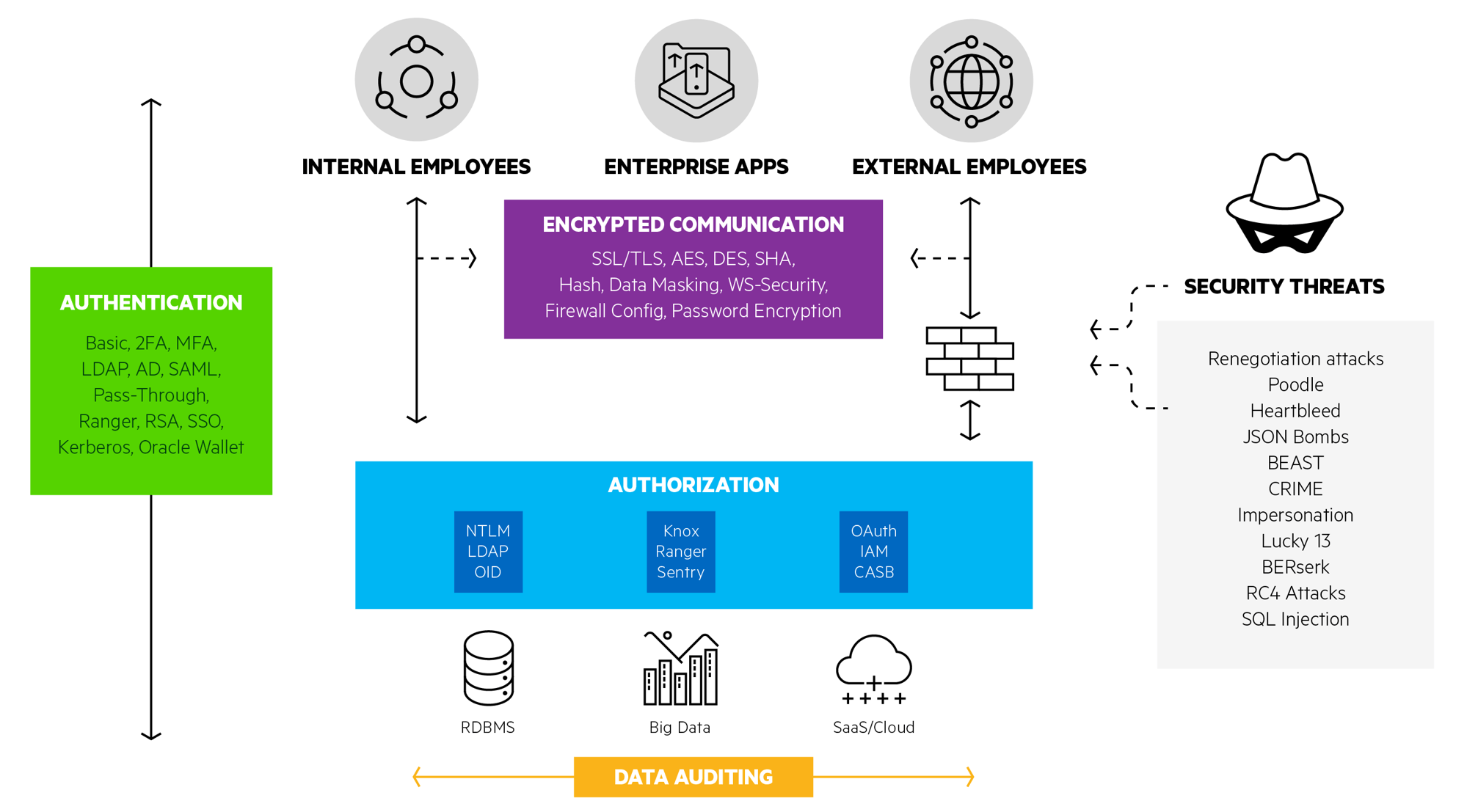

In the wake of increasingly frequent data breaches and emerging data protection laws like GDPR, enterprise security has become paramount when accessing cloud and big data stores across networks. As organizations continue to invest in business intelligence, big data, IoT and cloud, IT teams are introducing increasingly complex security mechanisms to authorize and encrypt access to data. Your analytics and data management tools need to be able to securely access data spread across different systems without introducing security risks.

Our recent webinar discussed what it takes to securely access data from rapidly evolving enterprise data access layers, advances in end-to-end data security and the latest technologies for big data and cloud.

For more on cloud and big data security, let’s meet Phil Prudich. Phil is a Product Owner in Research and Development at Progress. He’s been involved with DataDirect for over 12 years working on data connectivity and ODBC and JDBC with a focus on big data and NoSQL sources.

Let’s discuss some frequently asked questions that Phil addressed on our recent webinar in relation to the technologies involved in authentication, encryption, authorization, and data auditing.

Interview with Phil Prudich

Phil, start by telling us about network security. Security used to be pretty straightforward with applications and databases all behind a firewall, but that’s not common anymore.

Phil Prudich: The network itself is a critical component in the security of data access. Firewalls and proxies have been around for a while, usually for restricting access to the network, both internally and externally, enforcing corporate network use policies, and so on. But they’re playing a bigger role today as many data sources are moving towards cloud deployments. So on the one hand, this means that you have local applications which have to traverse proxies over firewalls more frequently in order to get to that cloud-based data. And on the other hand, it means that you have cloud-based applications that often need access to data stored on-premises, and they need a way to cross that firewall. You've got to be able to handle that sort of calling back to on-premises sources without poking many holes in the firewall.

It sounds like it would be difficult to manage all these different systems securely in a distributed cloud/hybrid environment. What’s most popular way to handle that?

Phil Prudich: The market is moving towards a logically-centralized management system. An example of this is Amazon AWS’s IAM, or Identity and Access Management. This allows you to create and manage users in groups, as well as handle authentication and access control for many AWS services. Authentication is configurable to work in a number of different ways, so you can just pass through authentication or certificates, or use integrations with services like Google or Facebook. Users and group permissions can be configured to control access to AWS resources, for example, a particular EC2 instance, or S3 buckets. Much of this functionality could be done in a patchwork way already, but Amazon’s trying to group these into a single place to make managing both your security and your compliance with policies easier to administrate.

How are you seeing security implemented on Big Data platforms? Let’s talk about Hadoop specifically, since we hear about it frequently.

Phil Prudich: To begin with, at the lowest levels of Hadoop, you have the Hadoop Distributed File System, or HDFS. It’s a lot like a regular file system in that we have the usual tools of file and directory permissions available to provide some level of security, a lot like a normal file system. It’s not the most user or administrator-friendly approach to security. When we’re talking about accessing any single node in the cluster, we’ve seen a lot of customers with Hive instances configured for Kerberos, or SSL, or both. But the most compelling question here is not the low level Hadoop file system, it’s really how do we secure the cluster as a whole?

And how does that work? How do you handle security at the cluster level?

Phil Prudich: The goal, from a user point of view, is that if I log in, the data I access should be based on my identity, not the identity of the app server. That way, I can be seeing the data that I’ve been given permission to, and say the company’s CEO may see a different set. Hadoop addresses this through something they call Hadoop delegation. It’s a lightweight complement to Kerberos. The individual processes that are executing across your Hadoop cluster are getting permission to act on behalf of the client making a request as that user. Same concept as Kerberos delegation, but happens to be much less network-intensive, much more lightweight because Kerberos doesn’t scale real well in Hadoop clusters.

Why is it that Kerberos doesn’t scale well in Hadoop clusters?

Phil Prudich: Generally, it comes down to how the clusters execute jobs. In order to check if you’re authorized to do something with Kerberos, you have to go to the KDC and get a ticket, which gives you permission to do something. In a Hadoop cluster you can have thousands of nodes that get a job all at once, which would then turn around and inundate the KDC with ticket requests. So it becomes hard to scale up for that, it effectively becomes a DoS- a Denial of Service attack - on your KDC. So they came up with Hadoop delegation as a less chatty implementation.

Can you tell us a bit about the popular Apache projects for big data security?

Phil Prudich: There are several Apache projects that are trying to provide some sort of central security administration. For example, Apache Knox is a gateway deployment which serves as a single point of entry in front of your entire cluster. Apache Ranger is more integrated into the entire cluster when compared to Knox and it provides fine-grained access, attribute and role-based authorization, as well as auditing. It also provides some file encryption and key access functionality. Finally we have Apache Sentry which was developed at about the same time as Ranger and there’s a fair amount of overlap between these two. We talk more in-depth on each of these technologies in the webinar, so I recommend listening to that for more details.

Phil, thanks so much for your time. For more comprehensive detail on Enterprise Data Security including the Cloud and Big Data topics discussed above, plus OpenSSL, native encryption and more, watch the full webinar.