NucliaDB: Your AI

Knowledge Engine

Production-grade knowledge infrastructure handles ingestion, enrichment, indexing and retrieval so teams can deploy reliable, high-precision AI experiences without building and maintaining complex data pipelines.

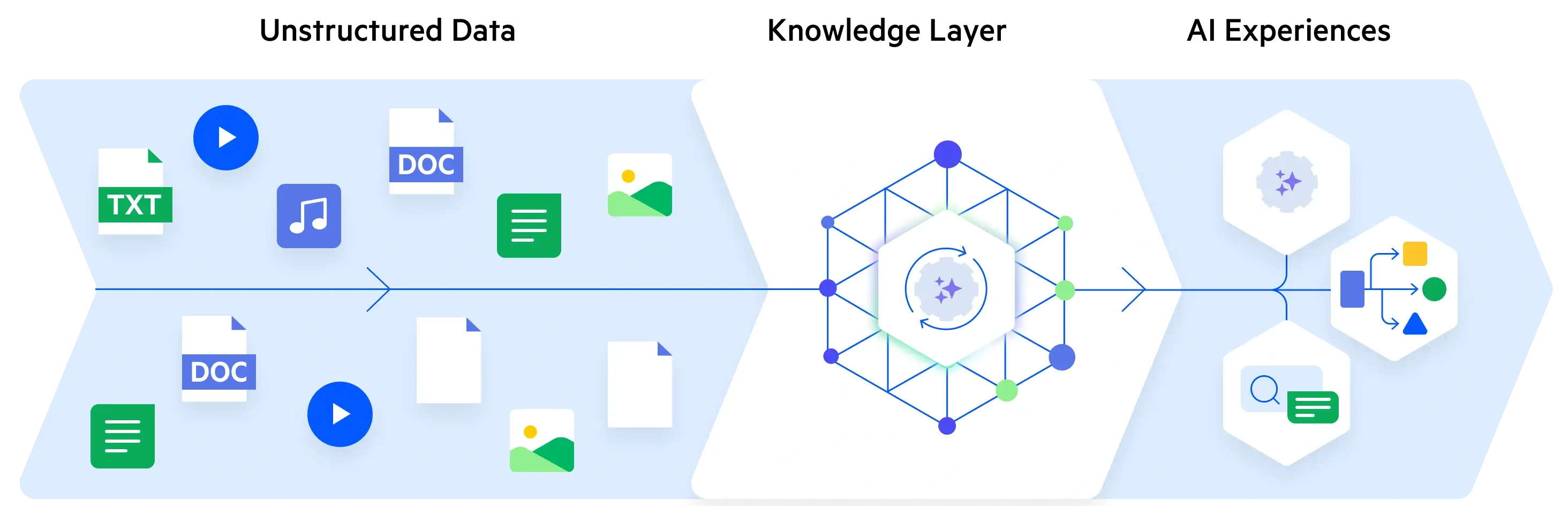

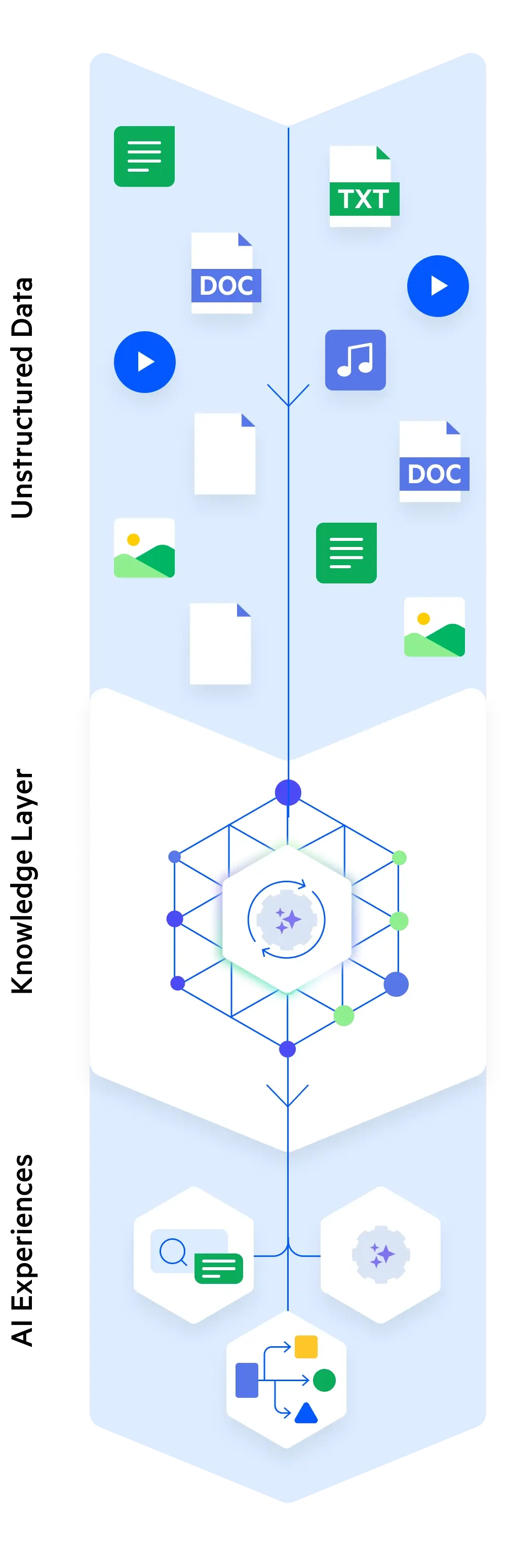

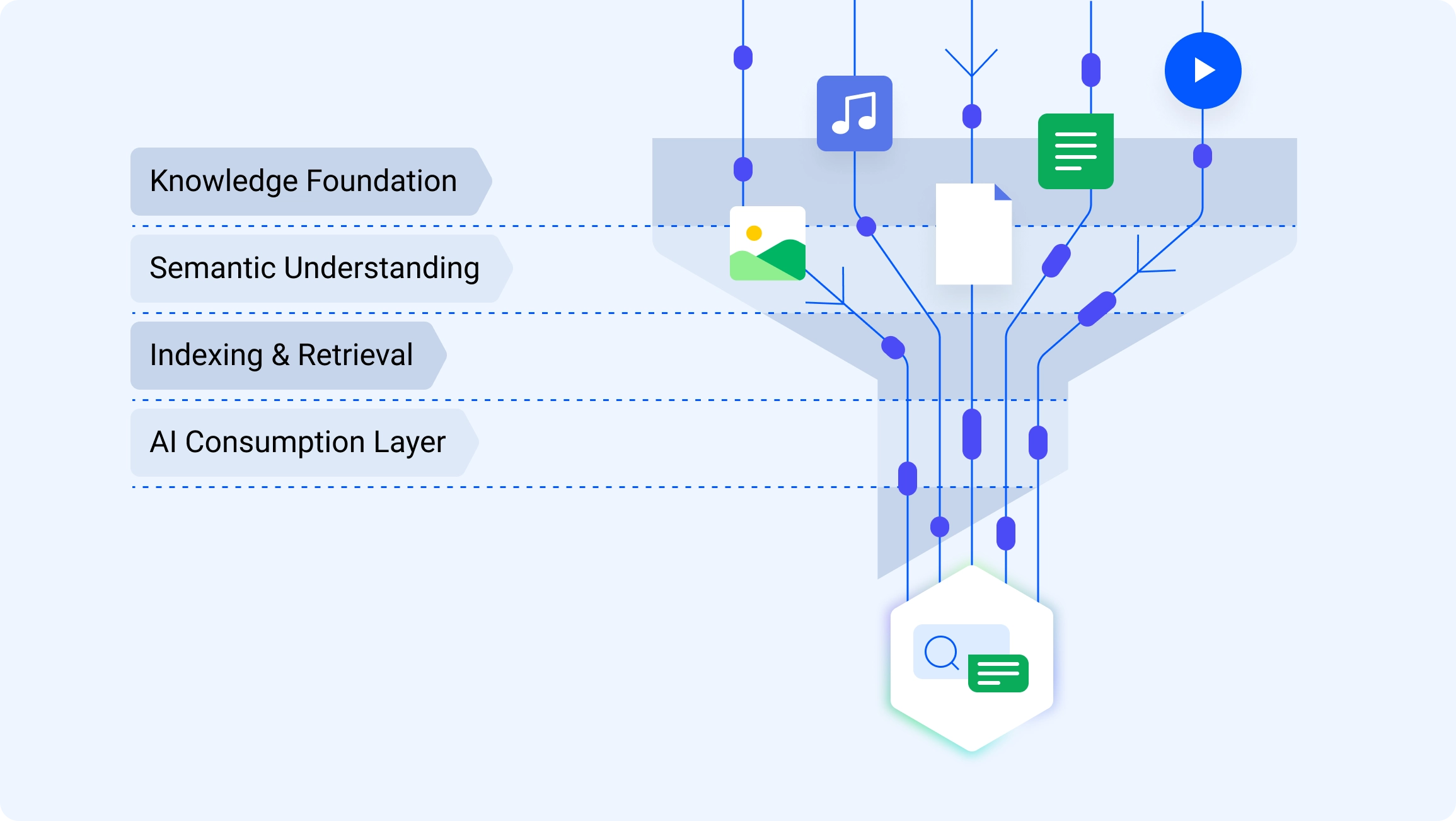

Turn Raw Enterprise Content Into AI-Ready Knowledge

Built on a production-grade knowledge infrastructure, the Progress® Agentic RAG solution removes the hardest parts of deploying retrieval-augmented generation (RAG) at scale. Instead of stitching together ingestion pipelines, schedulers, embedding jobs and indexing logic, teams can connect their enterprise content and have it automatically ingested, enriched, indexed and kept up to date.

The Progress Agentic RAG solution:

- Automatically transforms raw documents, videos and audio into AI-ready knowledge

- Eliminates manual preprocessing and data normalization steps

- Enables faster, more accurate retrieval through multi-layer indexing

- Allows you to choose embedding models that align with your accuracy and cost goals

- Empowers teams to focus on AI behavior and outcomes instead of data engineering

Key Benefits

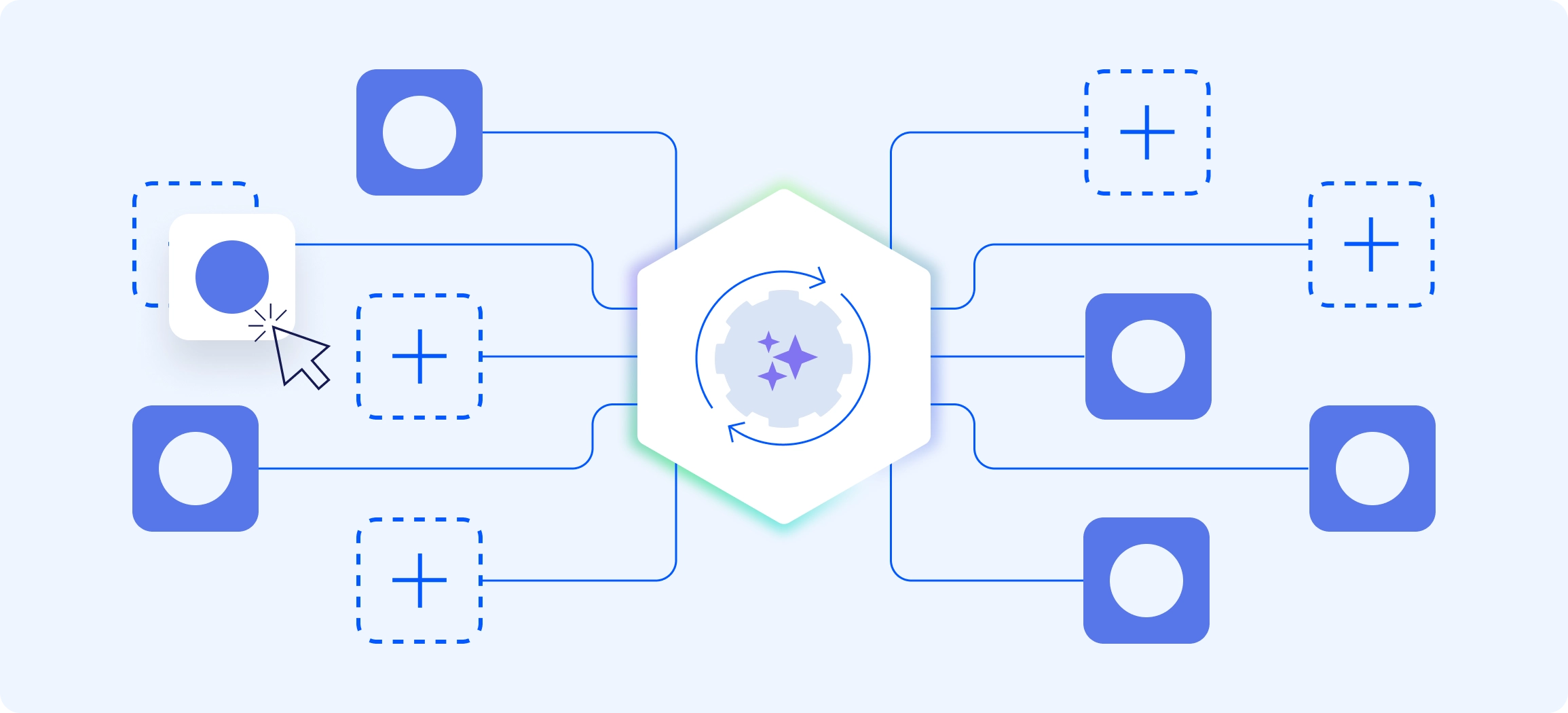

Maintains Architectural Flexibility as Models and Embeddings Evolve

Delivers AI-Ready Knowledge Automatically, Not Manually

Powers Multiple AI Applications from a Single Knowledge Layer

Technical Specifications

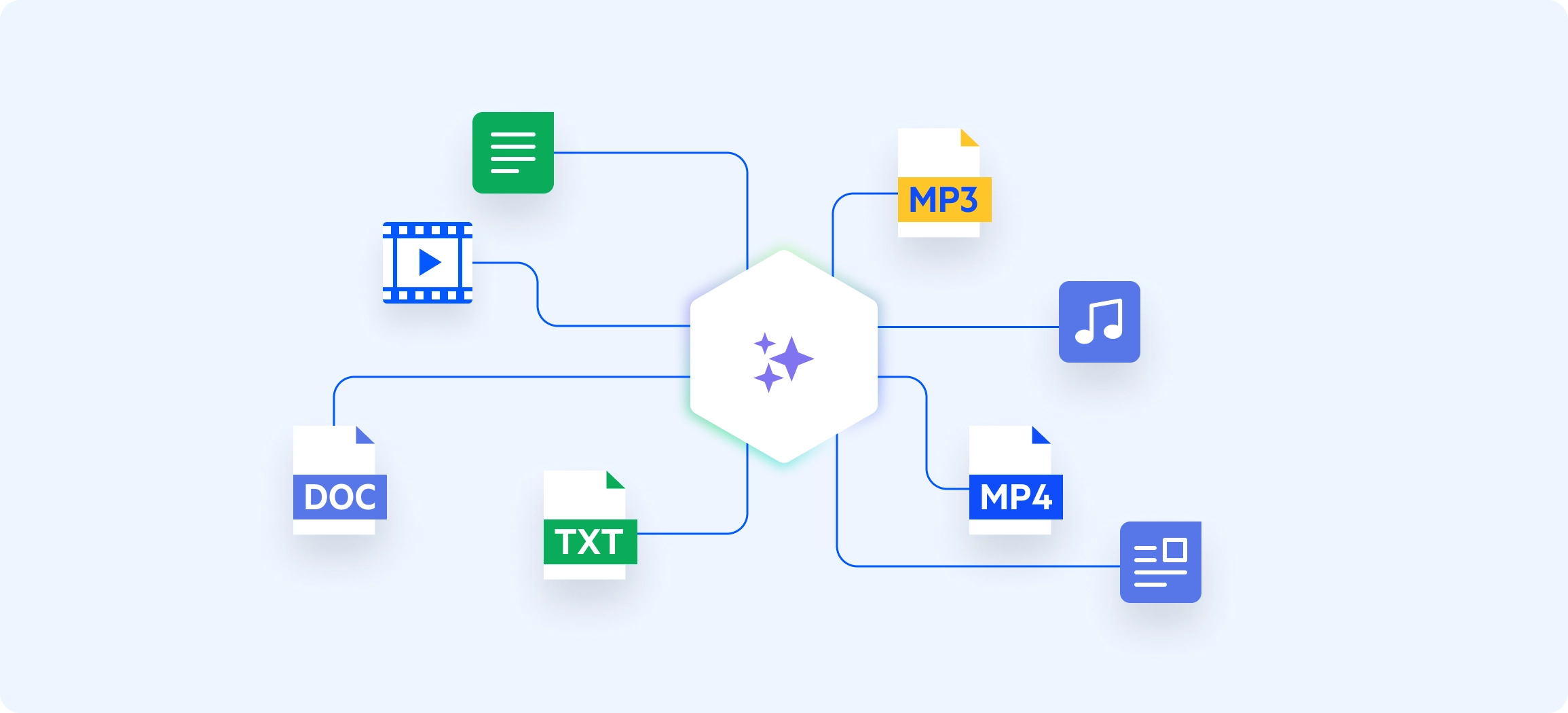

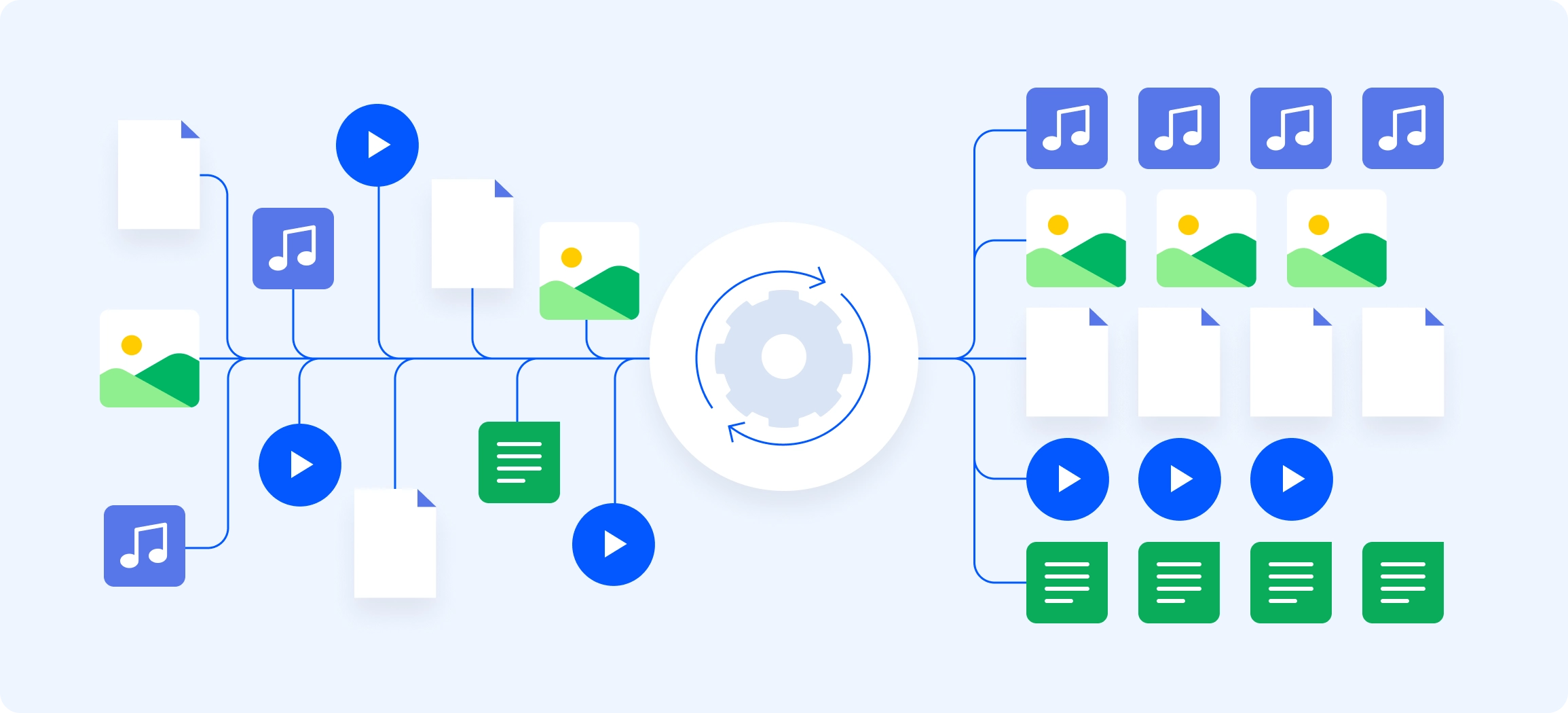

Built on a multi-modal knowledge foundation, the Progress Agentic RAG solution can ingest and index text, documents, audio and video into a unified, searchable knowledge layer. This enables consistent retrieval across formats, so teams can ground AI experiences in the full breadth of enterprise content—not just what’s easiest to parse.

Eliminating custom ingestion pipelines by automatically indexing enterprise content, enriching it and preparing it for AI use, the solution negates the need for manual preprocessing and reduces ongoing operational effort.

Instead of indexing data generically, the solution automatically structures content for AI workloads at ingestion. Multi-layer indexing prepares enterprise knowledge for immediate use for high-precision retrieval, search and agent reasoning without the need for additional configuration or tuning.

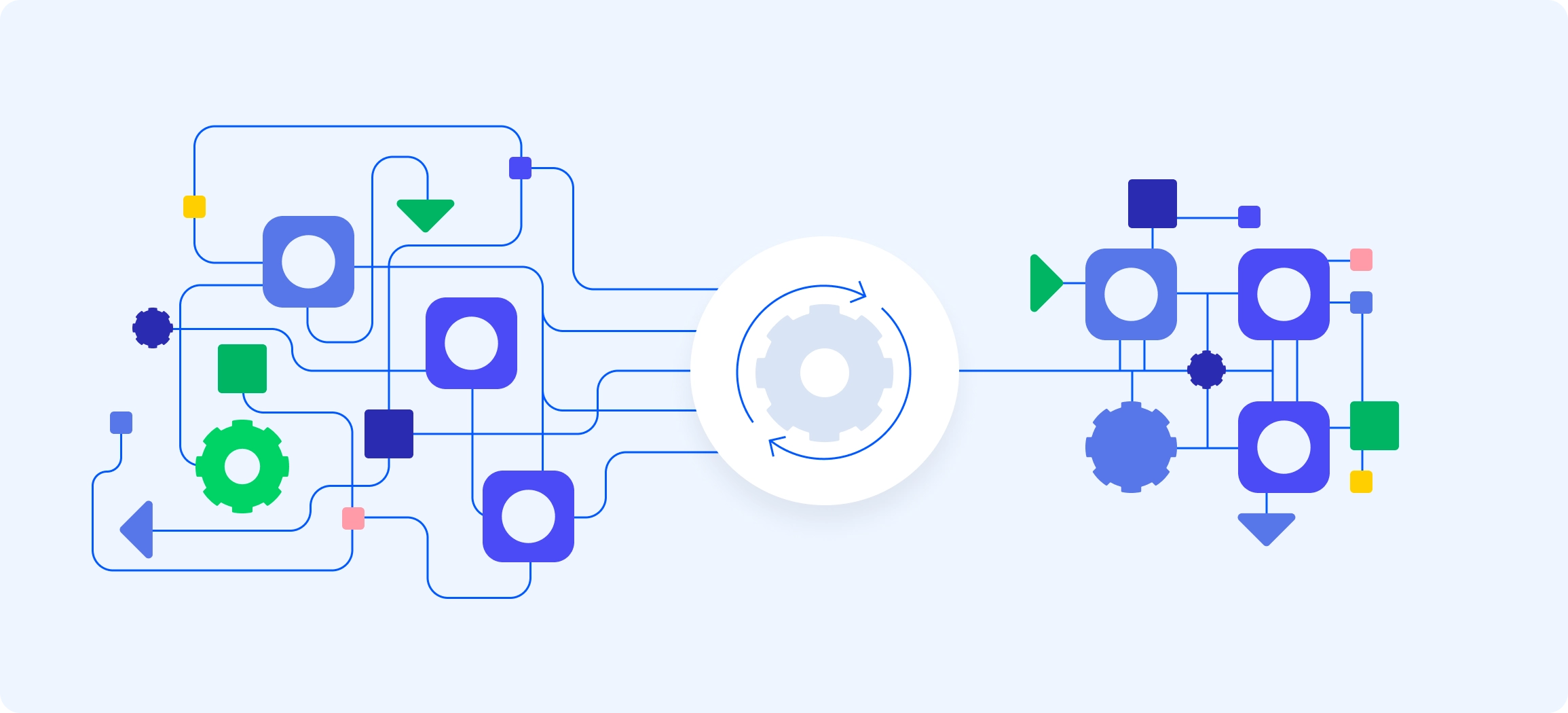

By abstracting ingestion, enrichment and indexing complexity, the solution significantly reduces the engineering effort required to deploy RAG systems. Teams spend less time maintaining data pipelines and more time designing retrieval strategies and AI behavior.

Designed to evolve alongside AI innovation, the solution’s modular, flexible knowledge infrastructure supports new retrieval strategies by embedding models and AI capabilities. This architecture reduces the need for costly rework, making it simpler and protecting your long-term investment.

FAQs

Ready to Get Started?

Index files and documents from internal and external sources to fuel your company use cases with LLMs with high-quality RAG-as-a-Service.