Build an AI Strategy That Evolves with You by Leveraging RAG

The Progress® Agentic RAG solution offers a flexible, end-to-end pipeline—where each stage can be tuned or swapped without a rebuild. It allows you to scale into new AI experiences, optimize for cost and performance and be future-ready as your strategy evolves.

A Modular Foundation that Grows with Your AI Strategy

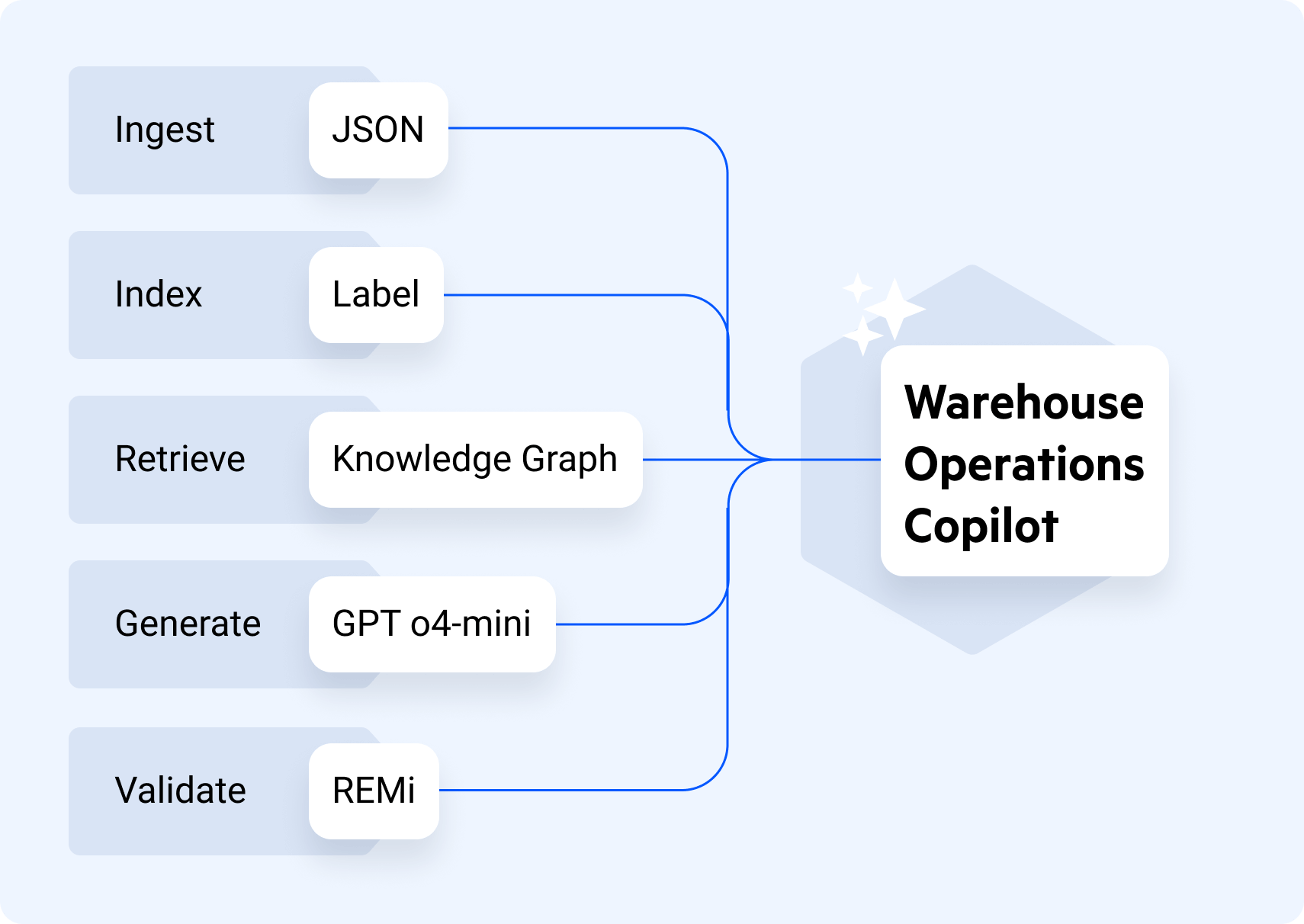

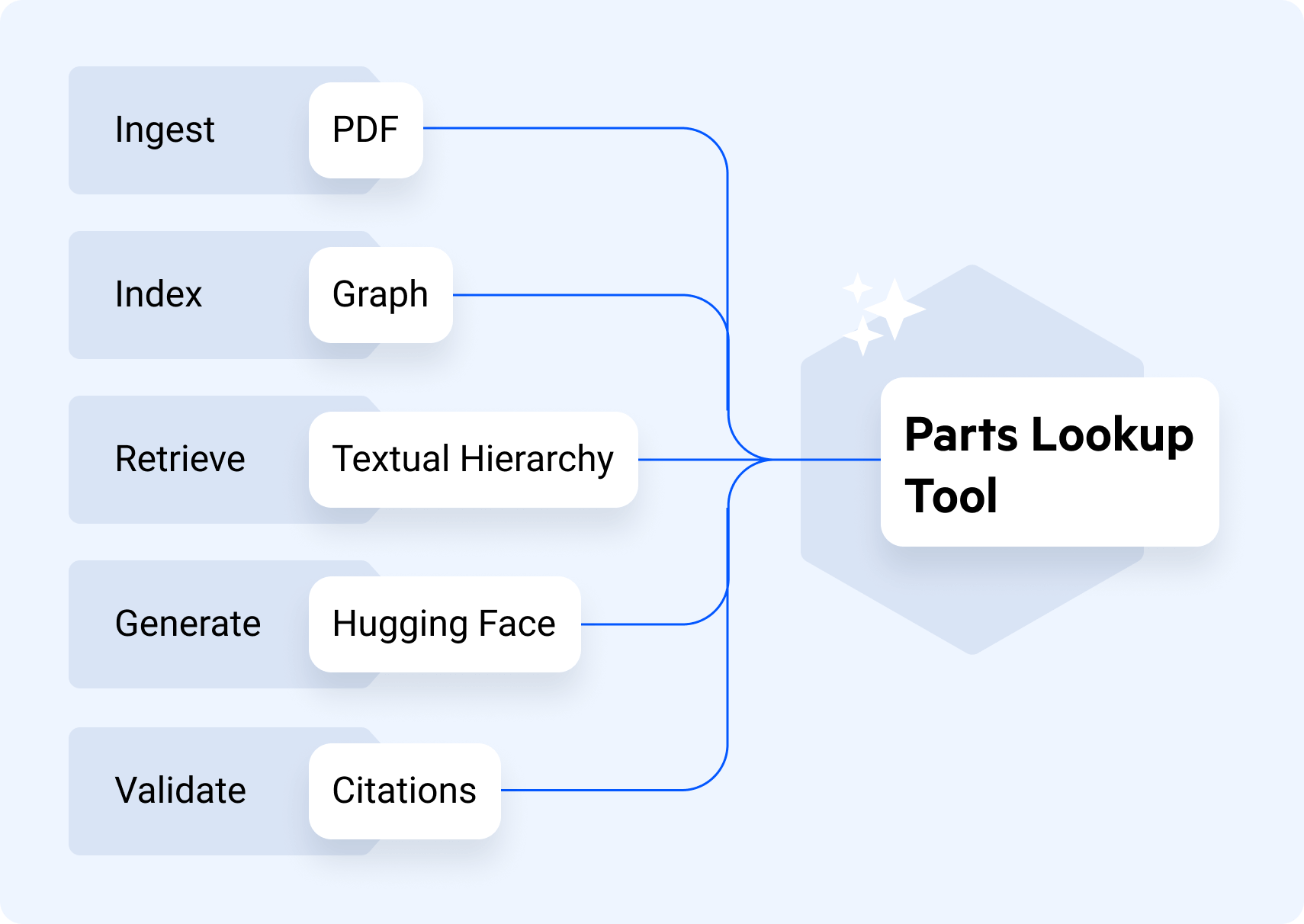

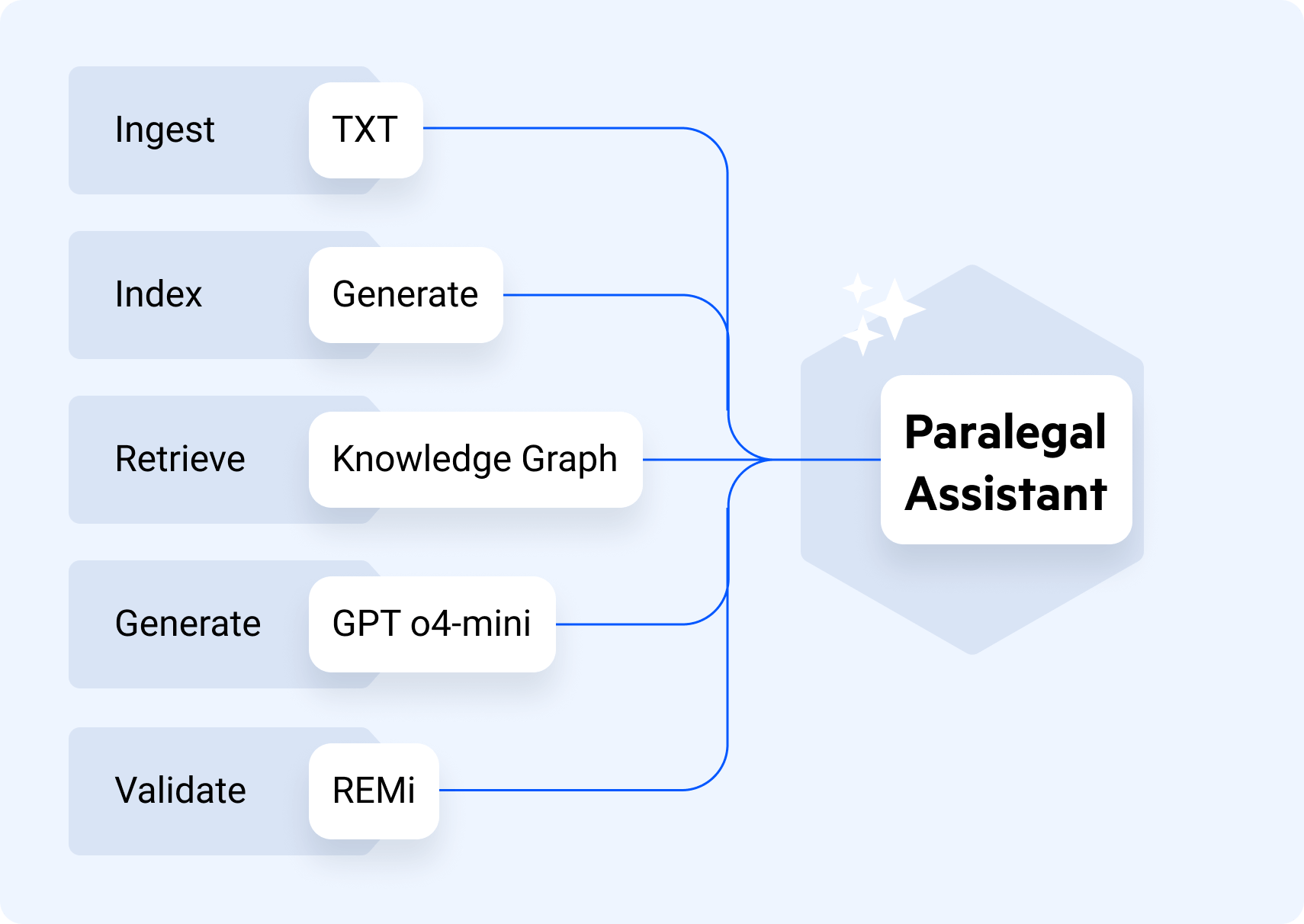

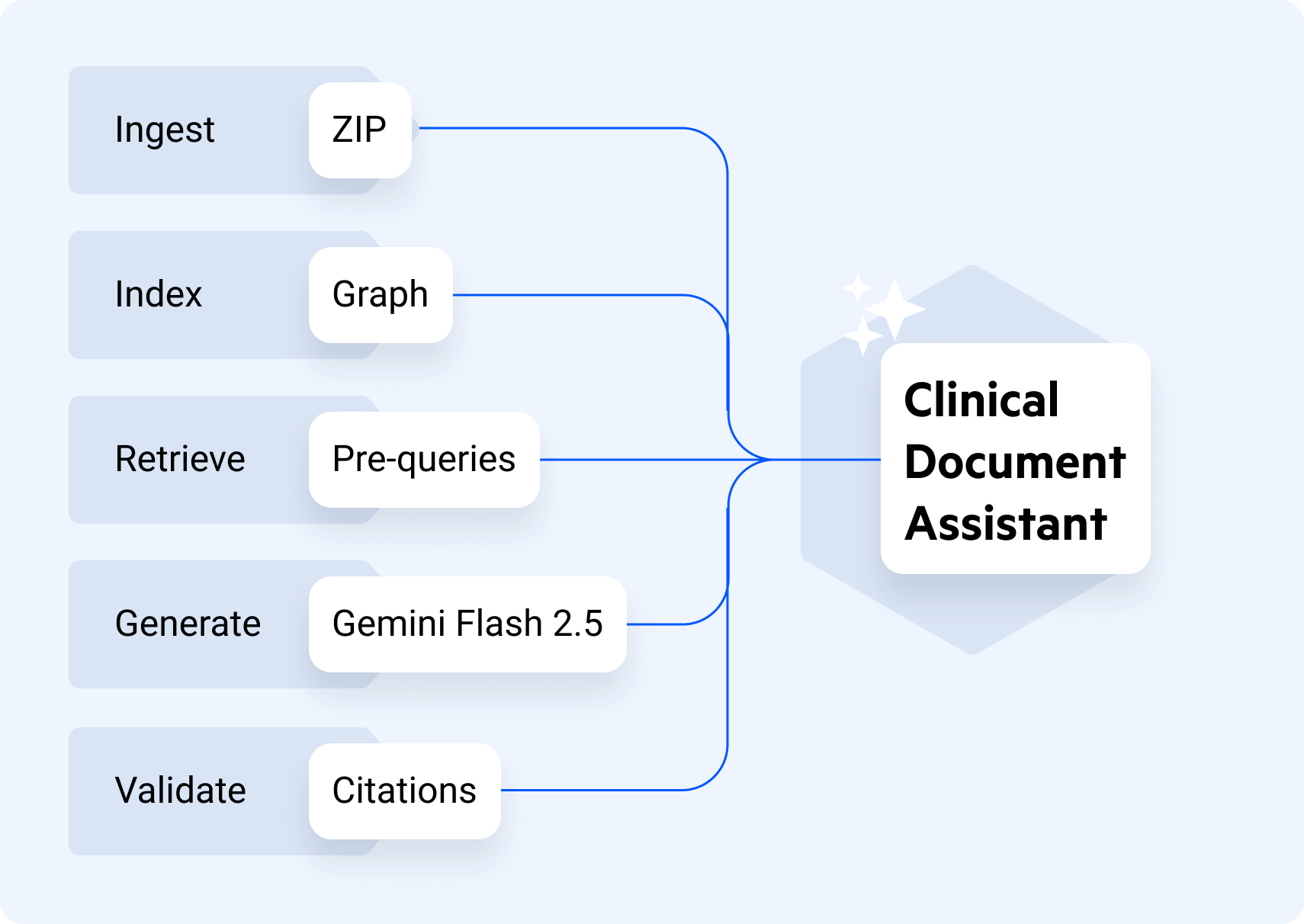

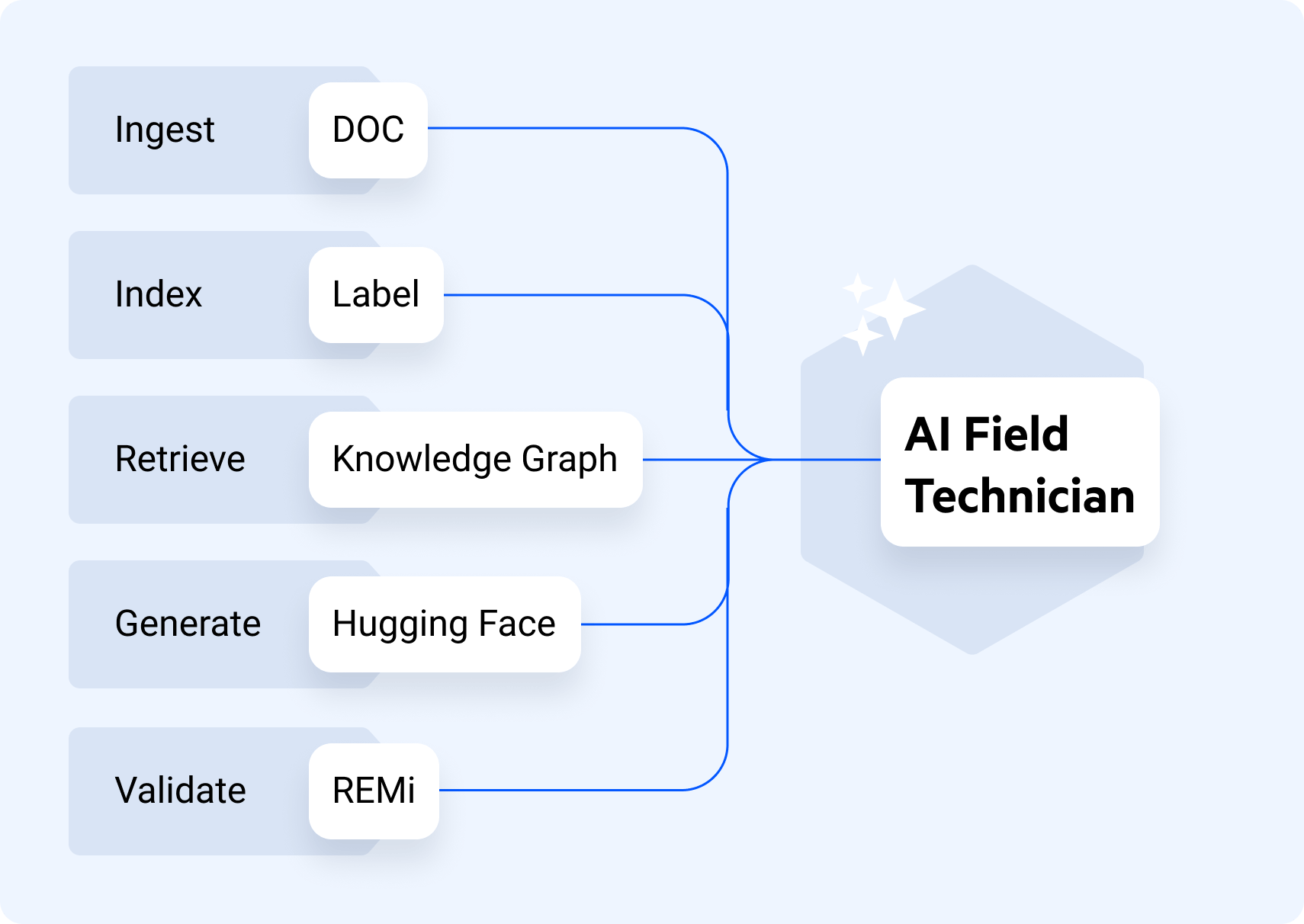

The agentic Retrieval-Augmented Generation (RAG) technology embedded in the solution enables the flexibility necessary to evolve your generative AI (GenAI) pipeline over time by adapting models, workflows and quality controls without re-architecting or starting from scratch via:

- Discrete modules for ingestion, retrieval, LLM selection and validation

- LLM-agnostic design that supports commercial, open-source and domain-specific models

- One core pipeline foundation that can scale for new AI use cases

- Validation layers that help maintain accuracy, governance and trust at scale

- Dynamic ability as models and technologies change so that long-term investments are protected

Key Benefits

Accelerate Time to Value Across AI Use Cases

Reduce Risk and Cost with a Future-Ready Architecture

Build Multiple AI Solutions with One Unified Pipeline

Technical Specifications

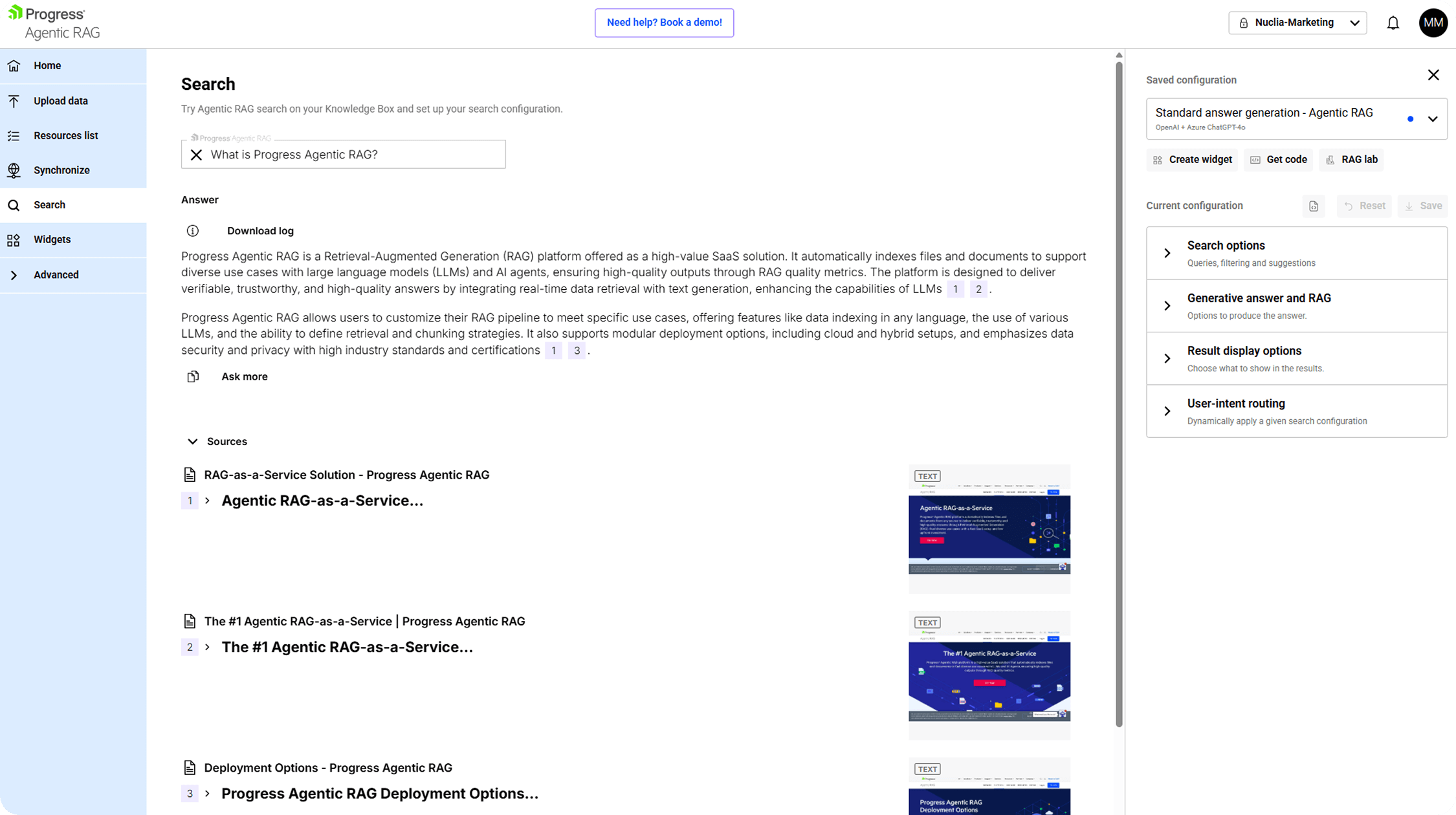

The Progress Agentic RAG solution enables 30+ retrieval strategies, including semantic search, exact match, neighboring paragraph and knowledge-graph hops, allowing customers to tune their information for every use case. Each retrieval module can be adjusted independently and provides fine-grained control over relevance, precision and cost.

The solution also allows for swapping in different LLMs from OpenAI, Anthropic, fine-tuned internal models and others without the need to reindex content or rewrite pipelines. This enables you to protect your AI strategy by adopting new models as they emerge, while maintaining performance, privacy and budgetary controls.

The no/low-code environment within the solution empowers teams to configure ingestion pipelines, retrieval settings and LLM selection. Technical and business users alike can iterate quickly, launch new assistants or search workflows in days—reducing pressure on engineering teams.

The solution’s pipeline begins with a sophisticated ingestion layer that includes Optical Character Recognition (OCR), speech-to-text, table extraction, entity recognition, semantic segmentation and more. This intelligent data processing creates cleaner, richer, more structured content for retrieval and reasoning.

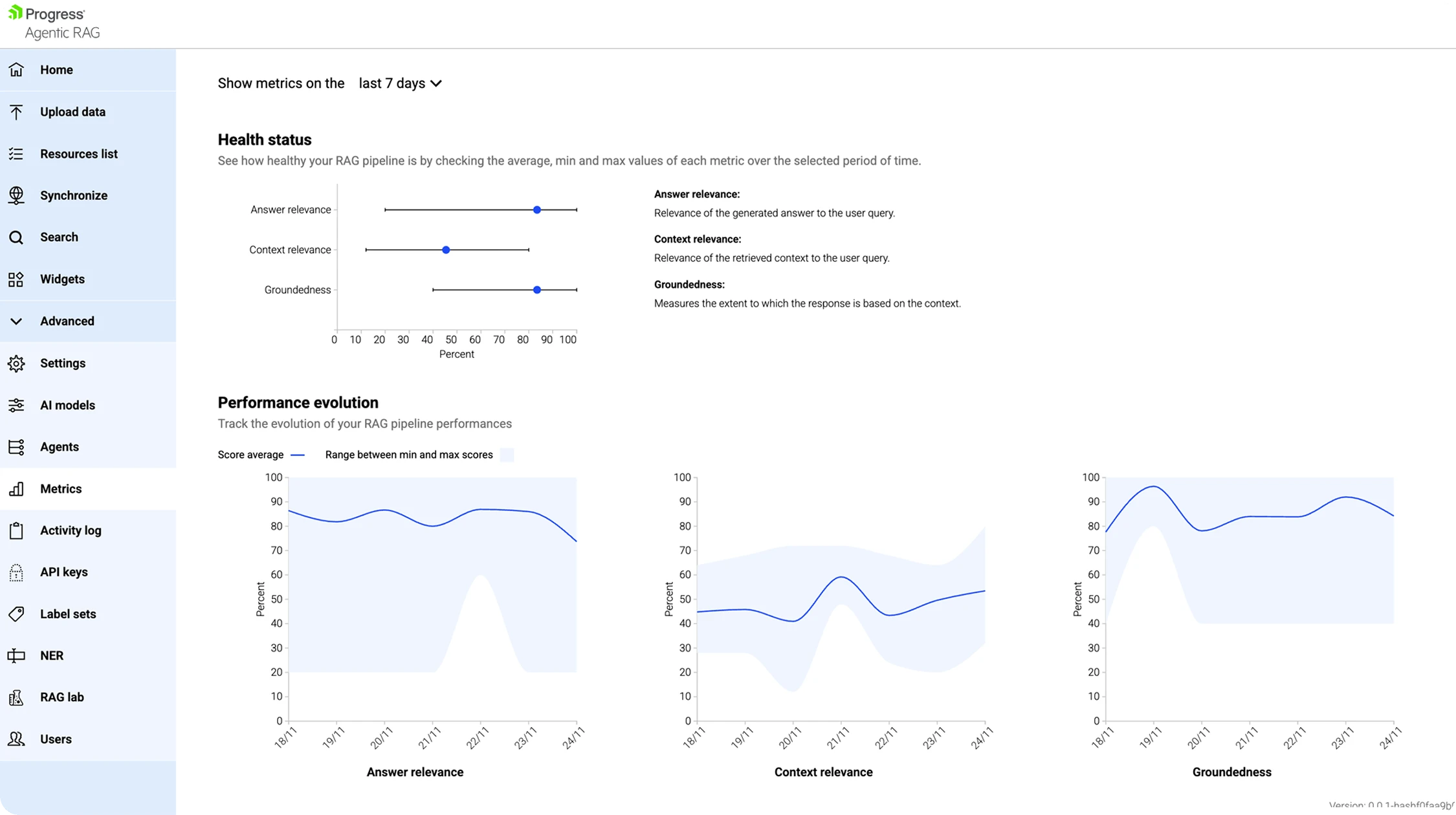

Quality and trust are built into the pipeline through agentic validation modules that confirm groundedness, check context and assess accuracy using built-in REMi metrics. This translates to every answer being transparent, traceable and aligned to enterprise-quality standards.

Ready to Get Started?

Index files and documents from internal and external sources to fuel your company use cases with LLMs with high-quality RAG-as-a-Service.