GenAI on Your Terms

Gain choice, control and cost efficiency with an all-in-one RAG pipeline. Choose the optimal Large Language Model (LLM) for each workflow. Reduce token costs, improve accuracy and scale safely with built-in governance, quality metrics and model-level flexibility.

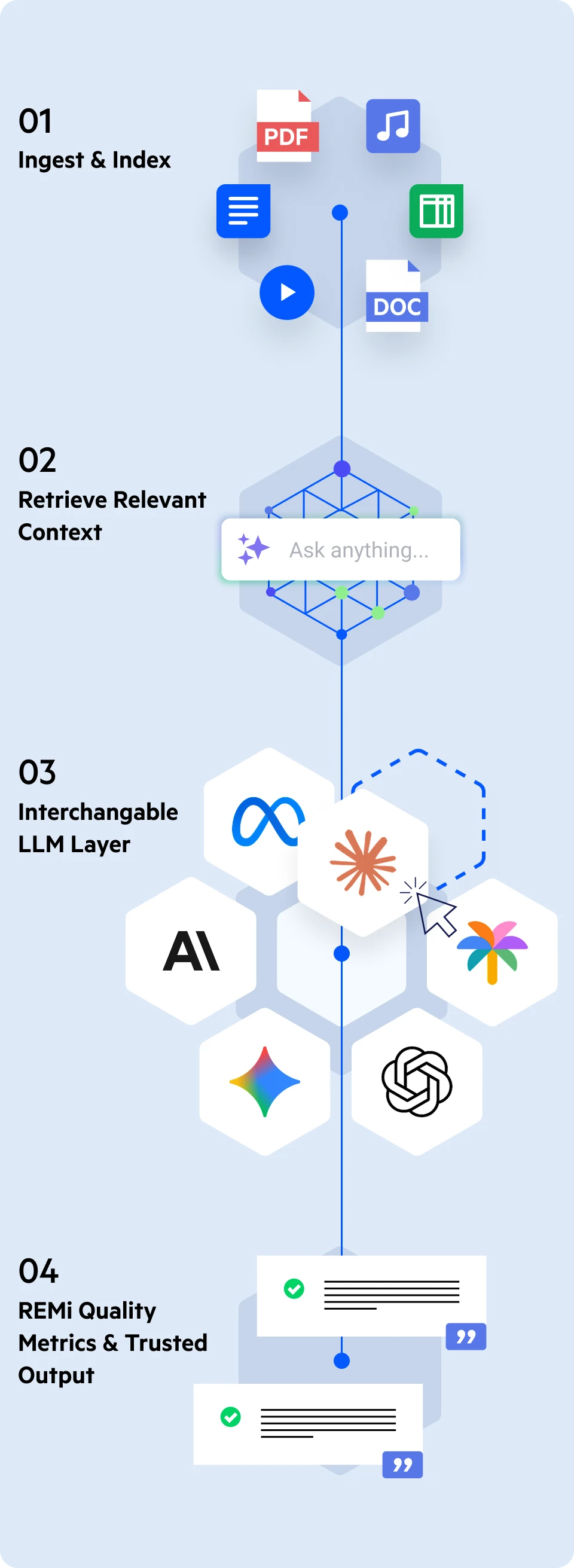

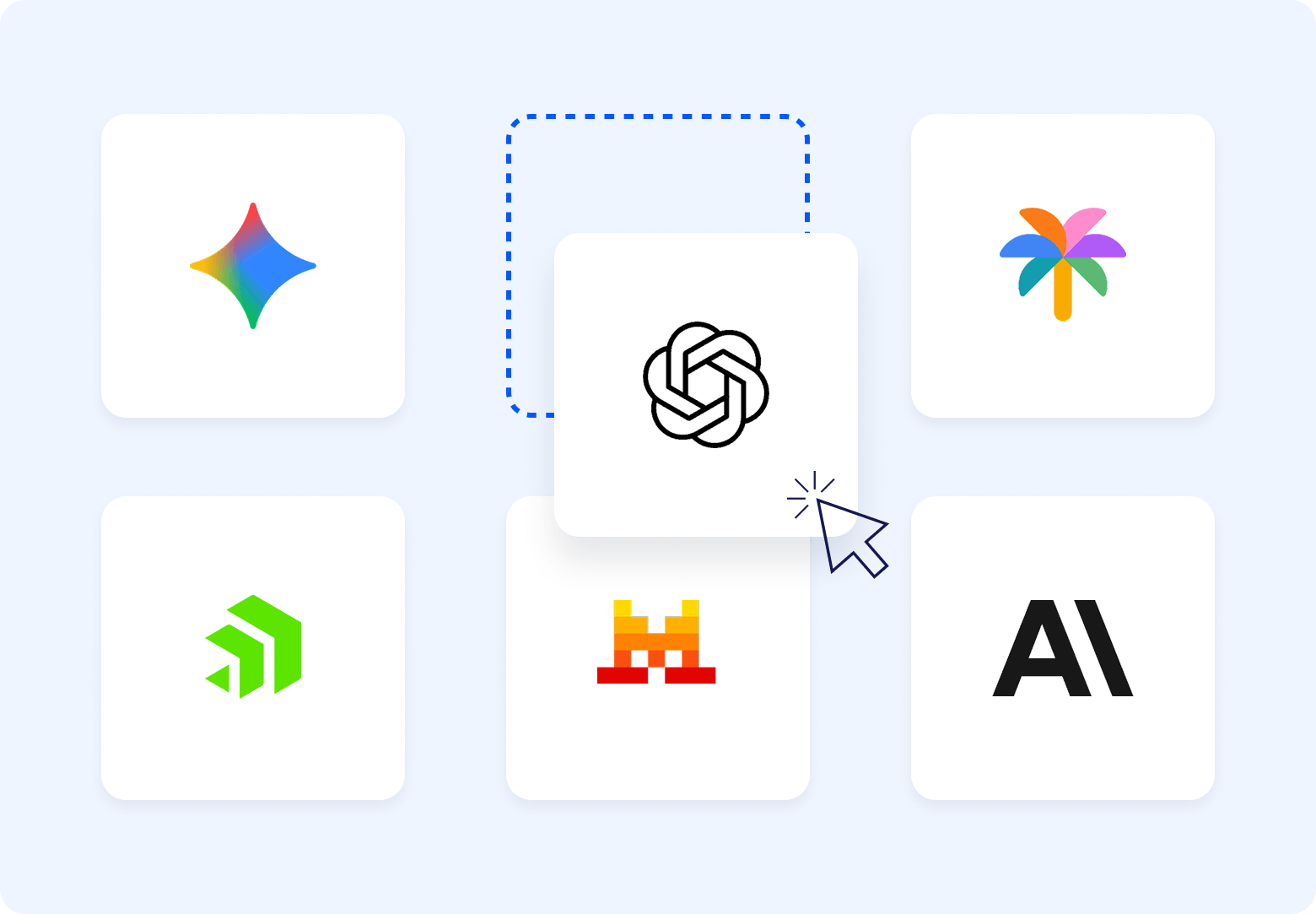

LLM-Agnostic by Design

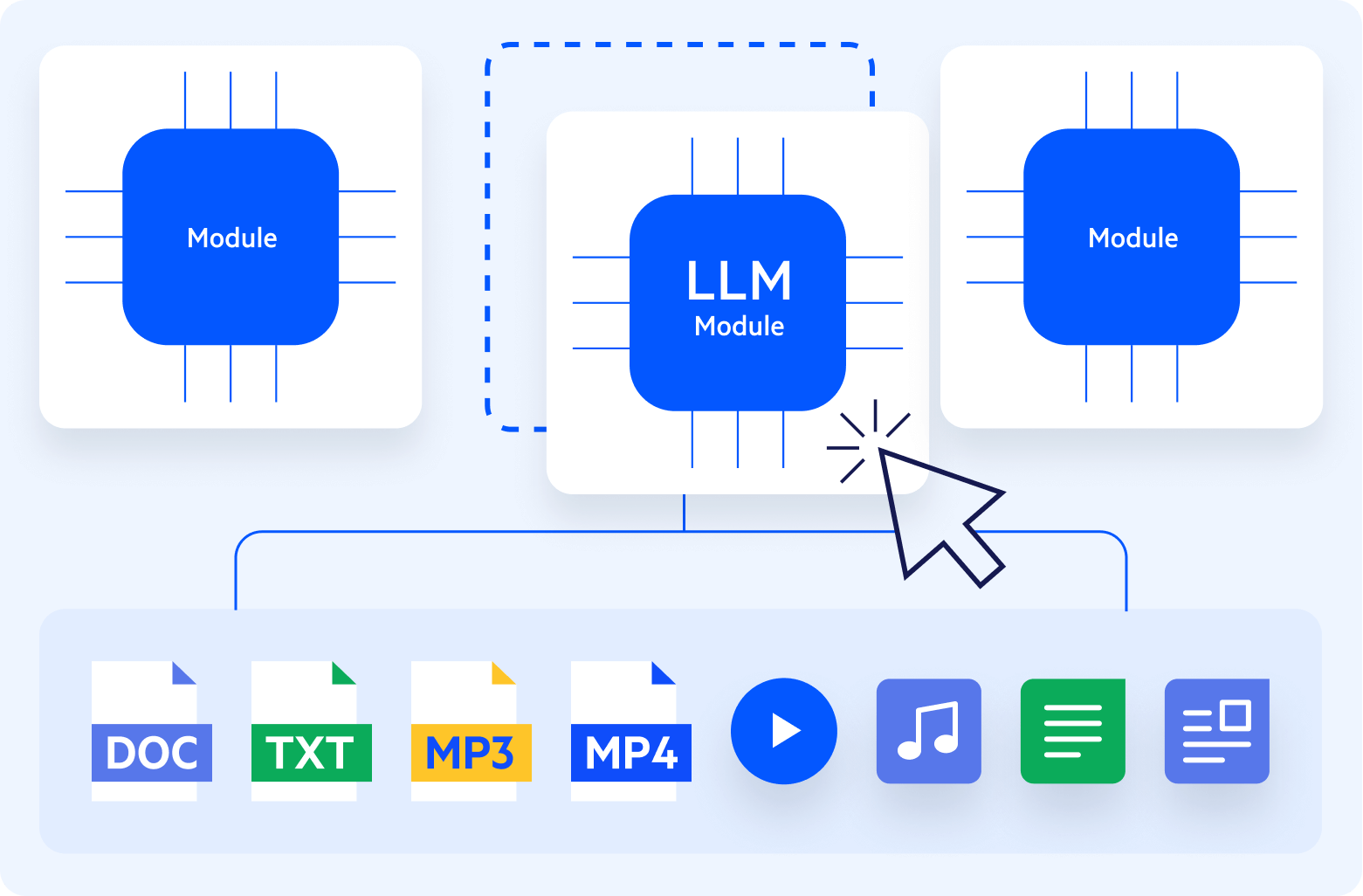

The Progress® Agentic RAG solution lets you choose the best language model for each workflow, without rebuilding your pipeline. Because the retrieval, chunking and validation layers are decoupled from the model, you can switch between models on demand. Whether you’re optimizing for price, accuracy, privacy or performance, you get full flexibility with zero reindexing and or engineering rework needed, giving you a future-ready AI foundation.

Delivers Trusted, Future-Ready AI on Your Terms

A secure, verifiable AI backbone that adapts to your models, your workflows and your compliance needs today and in the future.

LLM-Agnostic Architecture

- Use a wide variety of LLMs without reindexing or rebuilding workflows

- Swap models at any time based on cost, accuracy, performance or governance requirements

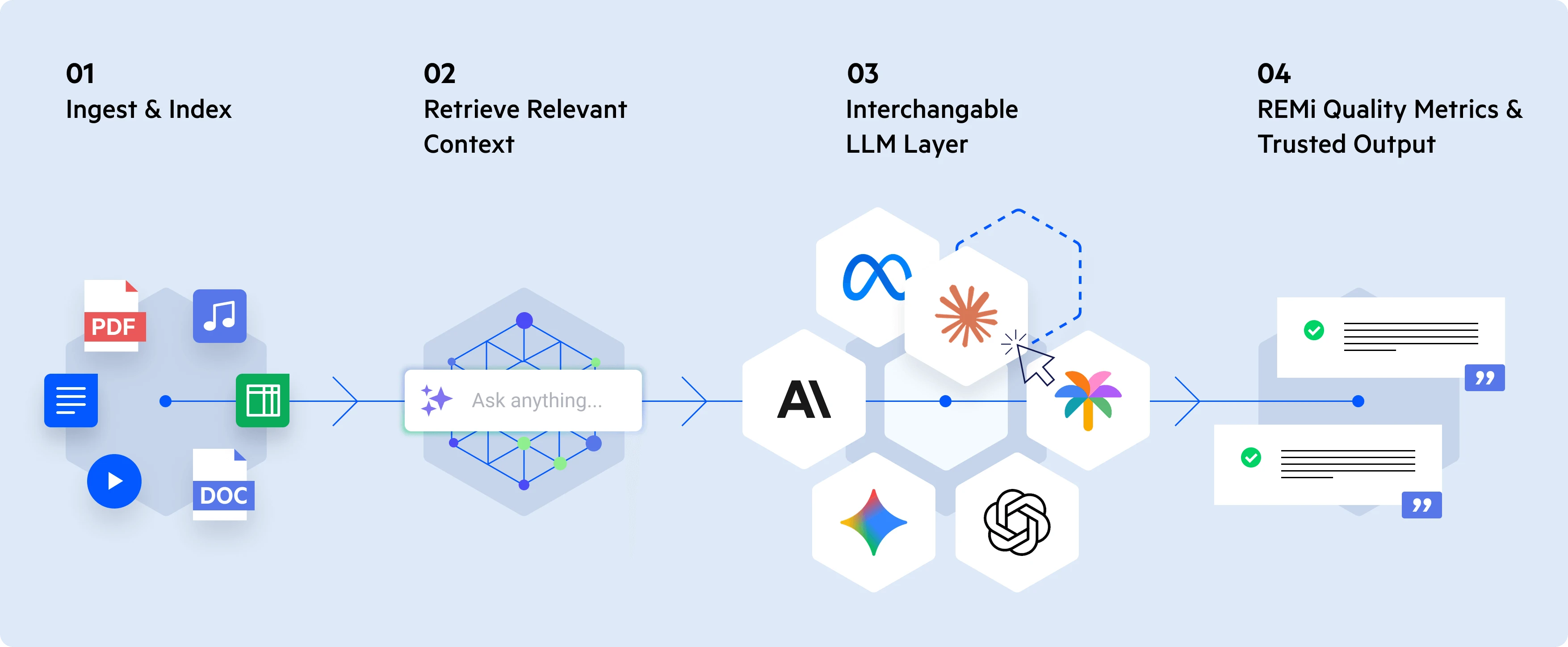

Modular, End-to-End Retrieval-Augmented Generation (RAG) Pipeline

- Deploys AI experiences quickly and adapts them as needs evolve with a fully integrated, no/low-code pipeline that handles ingestion, indexing, retrieval and generation

- Independently configures content ingestion, semantic chunking, retrieval logic, model invocation and quality controls.

Benefits Grid

Advanced Retrieval Strategies

With 30+ retrieval strategies, the Progress Agentic RAG solution is designed to return the most relevant and trustworthy information for any query. From semantic search to neighboring paragraph retrieval, these strategies make it so the context fed to the LLM is always optimized for accuracy.

Semantic Chunking & Smart Segmentation

Instead of breaking documents into arbitrary, fixed-length slices, the solution uses semantic chunking and smart segmentation to preserve meaning, structure and context across formats like PDFs, videos, audio files and docs. The system automatically identifies natural boundaries like paragraphs, sentences, topics and timestamps to create precise, contextually coherent chunks.

AI Quality & Trust

The Progress Agentic RAG solution delivers end-to-end transparency for every AI-generated answer through source citations, retrieval logs and governance-ready audit trails. Each output includes a clear citation pointing back to the exact sentence, paragraph or timestamp used, enabling users to verify accuracy instantly.

Frequently Asked Questions

Ready to Get Started?

Index files and documents from internal and external sources to fuel your company use cases.