REMi: Better Retrieval. Better Answers.

Provable Results.

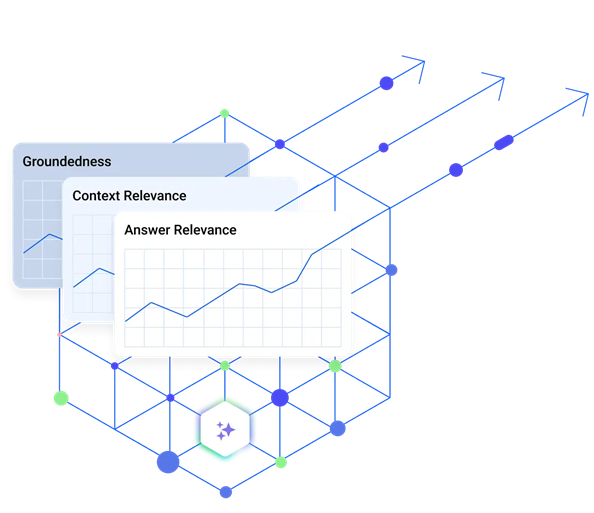

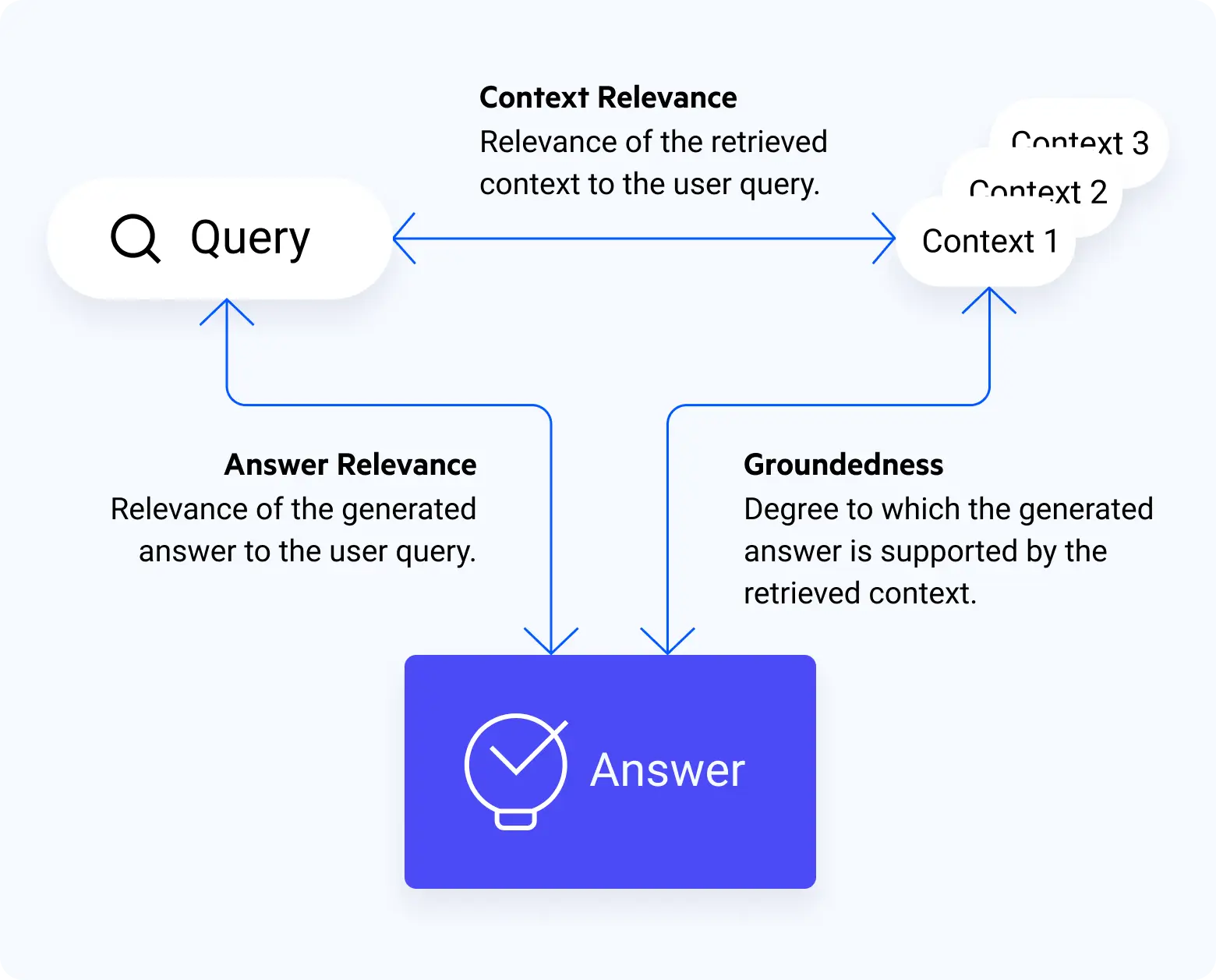

The RAG Evaluation Metrics (REMi) model brings objective evaluation to every step of the RAG pipeline so you can measure context quality, answer quality and groundedness of responses.

What Is REMi?

REMi gives business leaders clear, objective confidence in AI responses by measuring how relevant, grounded and contextually accurate each answer is against their knowledge. Built specifically for retrieval-augmented generation (RAG), it continuously monitors answer quality, highlights knowledge gaps and provides audit-ready analysis so AI can be trusted and scaled with confidence across the organization.

Key Capabilities

Trust You Can Measure

REMi replaces guesswork with clear signals that show when AI answers are substantiated and dependable.

Reliable AI That Gets Better, Not Riskier

- Builds confidence by verifying answers are relevant, source-aligned and supported with the right context

- Continuously tracks performance over time so improvements are visible and provable

Objective Confidence in Every Answer

- Replaces subjective judgment with clear signals that show when answers are relevant and reliable

- Enables teams to scale AI usage knowing answer quality is continuously validated

Key Benefits:

Answer Audit Every Time

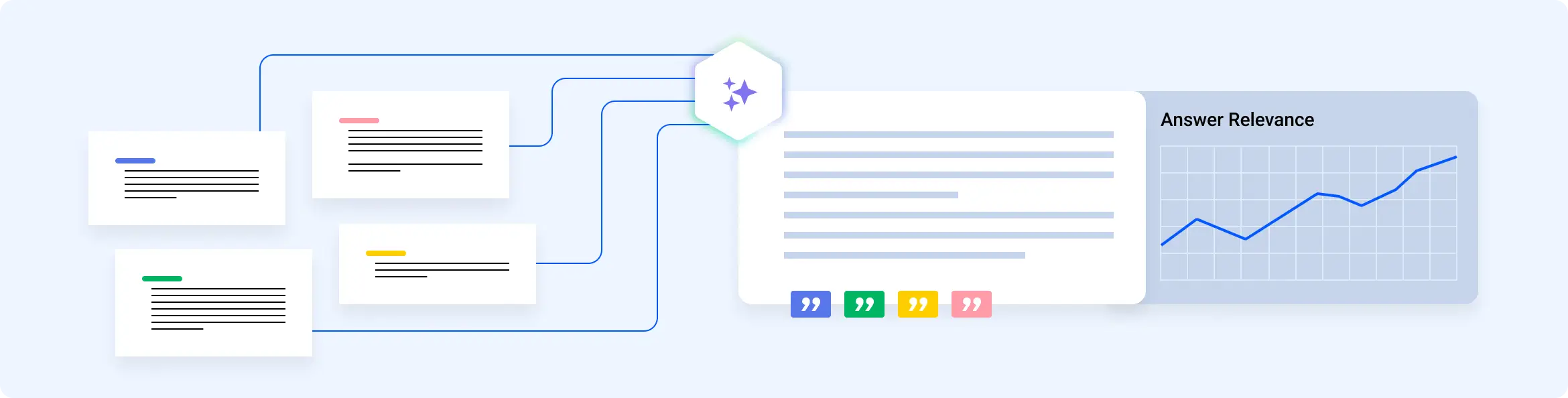

The Progress® Agentic RAG solution enables reviewable, traceable AI responses by showing how each answer was generated, what context supported it and how well it was grounded, so teams can audit outcomes, support accountability and stand behind AI-driven decisions.

AI That Says “No”

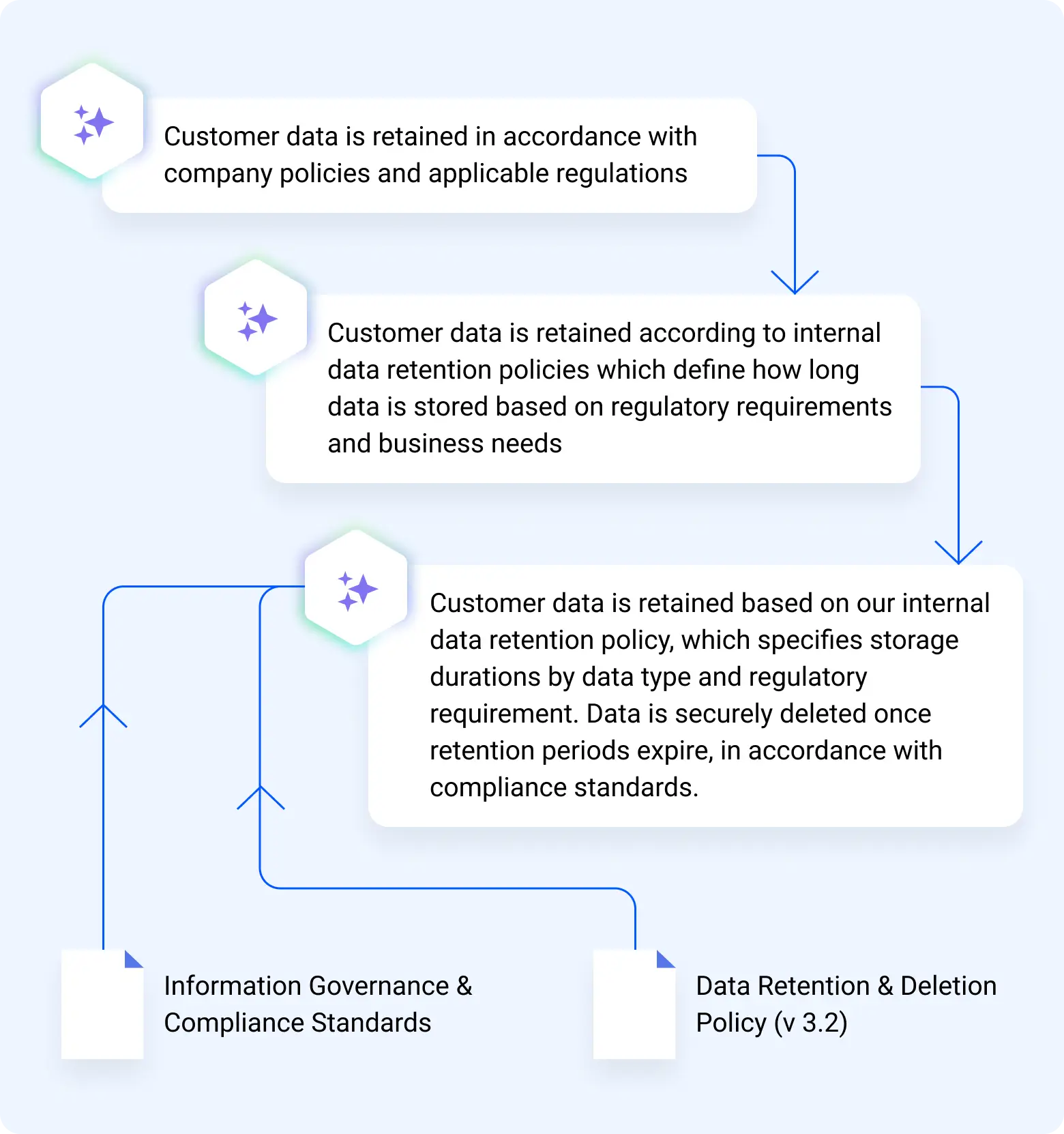

REMi surfaces questions that lack sufficient context and enables users to flag low-quality answers, resulting in less conjecture by the system when knowledge is missing. By recognizing uncertainty and incorporating feedback, AI responses become more trustworthy and safer to use over time.

Evidence-Based RAG Optimization

REMi turns evaluation insights into action by showing what to refine across the RAG pipeline from retrieval strategies and embeddings to model and prompt selection. With modular architecture and a no/low-code solution, teams can easily test and tune changes using Prompt and RAG Labs, improving answer quality through measured iteration instead of trial and error.

Ready to Get Started?

Index files and documents from internal and external sources to fuel your company use cases.