Progress Agentic RAG Features

With agent features like specialized RAG evaluation model, Named Entity Recognition, AI Search Assistant and customizable prompts, you can customize our agentic RAG pipeline to generate accurate, explainable and enterprise-ready answers in a few hours.

AI Search and

Generative Answers

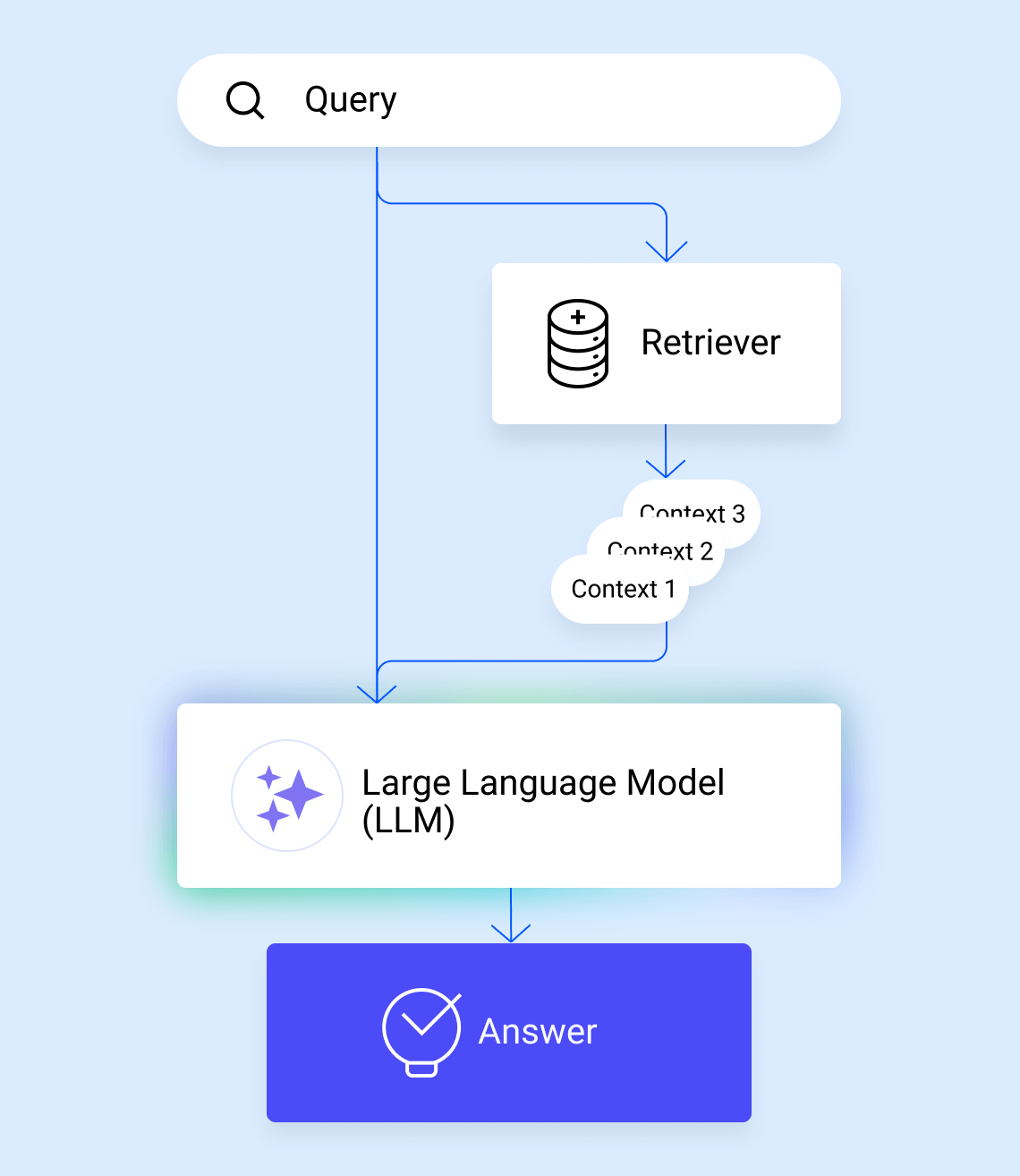

Progress® Agentic RAG AI Search enhances traditional search functionality by integrating semantic analysis and large language model capabilities. When a user submits a query, Progress Agentic RAG platform semantically interprets it to identify both keyword relevance and contextual meaning. It also retrieves the most pertinent indexed data and sends that context to an LLM to generate a precise, human-like response. The system provides transparency by displaying the exact data sources used in the answer, and if no relevant data is found, it refrains from generating a response. This unique approach maintains accuracy and avoids the hallucinations so common in generative outputs.

EXPLORE GENERATIVE AI.png?sfvrsn=2ab26eb3_3)

Named Entity Recognition

Named Entity Recognition technology is a natural language processing method that classifies entities (terms) into predefined categories, such as names, dates, locations, organizations, etc. With Progress Agentic RAG out-of-the-box capabilities, you can define your own Named Entities from 16 types and automatically index them to generate your own Knowledge Graph from your data.

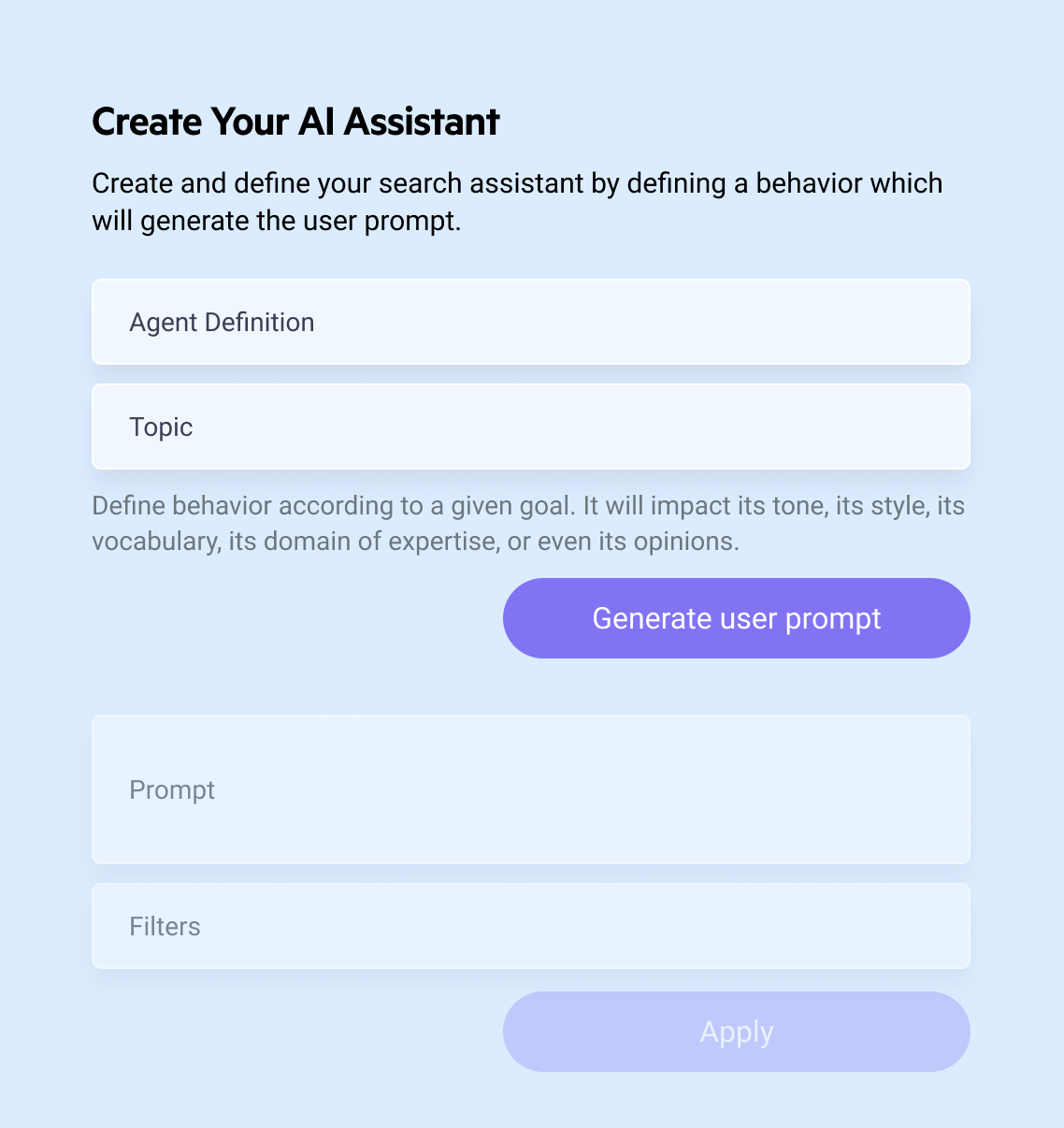

AI Search Assistant

Progress Agentic RAG AI Search Assistant is a simple tool that can be customized and set up according to specific use cases. The agent (in simpler terms, chatbot) interacts with your customers or employees and can behave like an employee support tool, a customer service agent or even a trained troubleshooting agent.

This boosts productivity, clarifies communication and delivers an overall better experience.

RAG evaluation

model: REMi

REMi is our proprietary RAG evaluation model that assesses the quality and performance of Retrieval Augmented Generation (RAG) pipelines. It evaluates how effectively a RAG system retrieves relevant information and generates accurate responses based on that information. Unlike traditional LLM (Large Language Model) evaluations, REMi is fine-tuned specifically for RAG scenarios, providing focused metrics such as answer relevance, context relevance, and groundedness.

And More Features...

RAG Lab

The first-ever RAG environment that allows you to discover the optimal RAG output for your specific use case. Progress Agentic RAG Lab simplifies the process of deploying RAG by giving you full control to test different search and retrieval strategies, embeddings, prompts and LLMs used and deploy many other configurations, saving you time, dependencies, money and errors.

Data Anonymization

Progress Agentic RAG data anonymization is an out-of-the-box feature that automatically detects and removes sensitive personal information from your data. With it, you can comply with regulations like GDPR, while promoting data privacy and security for

your organization.

AI Summarization

Progress Agentic RAG AI Summarization feature will automatically generate AI summaries from all types of data. From videos to large PDFs, our platform can create detailed overviews, regardless of file length. For deeper and more industry-centric Progress Agentic RAG summaries, you can customize sections, length and overall summary appearance.

AI Classifications

Classifications, or labels, are the way to categorize information using label sets, which simplifies processes used to retrieve information from NucliaDB. With Progress Agentic RAG, you can train your own AI Classification models and apply them to full resources (such as documents) or specific paragraphs from your data.

Generated Q&A

Organizations have a huge amount of idle, siloed information not being effectively used. Progress Agentic RAG features enable organizations to use their already-existing information in the form of Q&As to automatically answer questions available in their data repositories. Thanks to the understanding of the real meaning of the user’s query, Progress Agentic RAG answers provide the right information, even if the question is formulated differently.

Prompt Lab for LLM Validation

The Agentic RAG Prompt Lab enables you to test how the different LLMs behave and answer questions with your own prompts, using your own data. Save hours of experimentation, align the LLM with your company needs and increase your

product value.

Multiple LLMs in Just a Click

Integrate with multiple LLM providers, such as Google Gemini, OpenAI, Mistral, Anthropic, Llama and DeepSeek. Change from one to another in a single click.

User Prompts

Define the prompt and the behavior of the answers provided by the language model from Progress Agentic RAG’s Dashboard. Use our default prompt examples or create yours and see them applied immediately.

AI Agents

Agentic RAG-as-a Service improves workflows by automating repetitive actions, elevating search, and activating knowledge. Progress Agentic RAG features are designed to deliver reliable and scalable functionalities to AI Agents.

Explore AI AgentsContinuous Training

and Model Adapters

Fine-tune your own LLM or create an “Adapter” for the most popular LLM players to create your own version of ChatGPT, Antrophic, Gemini, and more. Because Progress Agentic RAG process runs continuously, it translates into better answers, understanding and training of customers’ specific knowledge domains.

Multilingual Capabilities

Robust multilingual capabilities, allowing users to perform searches and generate answers across multiple languages. This feature is particularly beneficial for organizations with a global audience, as it breaks down language barriers and enhances accessibility to information. Combined with its ability to train private LLMs using only your data, you can depend on high-quality, relevant and accurate information retrieval and answer generation, tailored to the specific needs of your organization.

Synthetic Questions Generation

Progress Agentic RAG Synthetic Question Generation automatically generates questions and answers from content. Given a specific document, the platform automatically generates every possible question and answer that can be asked and answered from the provided material. This guides users to ask the right questions. Some popular search engines call this functionality “Did you mean?” or “User also ask for …” when answering user queries.

Integration With Other Progress Products

OpenEdge

Go beyond the limits of structured data by connecting OpenEdge applications to related unstructured content, unlocking opportunities and value from data you already own.

Explore OpenEdge

Ready to Get Started?

Index files and documents, from internal and external sources, to fuel your company use cases with LLMs assuring RAG quality.