AI in Compliance-Driven Industries: A Regional Perspective (USA, EU, Asia)

Industries like finance, healthcare and law are using artificial intelligence increasingly but rules and opportunities vary depending on the region. Because these fields have strict regulations, companies must be careful to follow privacy, safety and ethical standards while using AI.

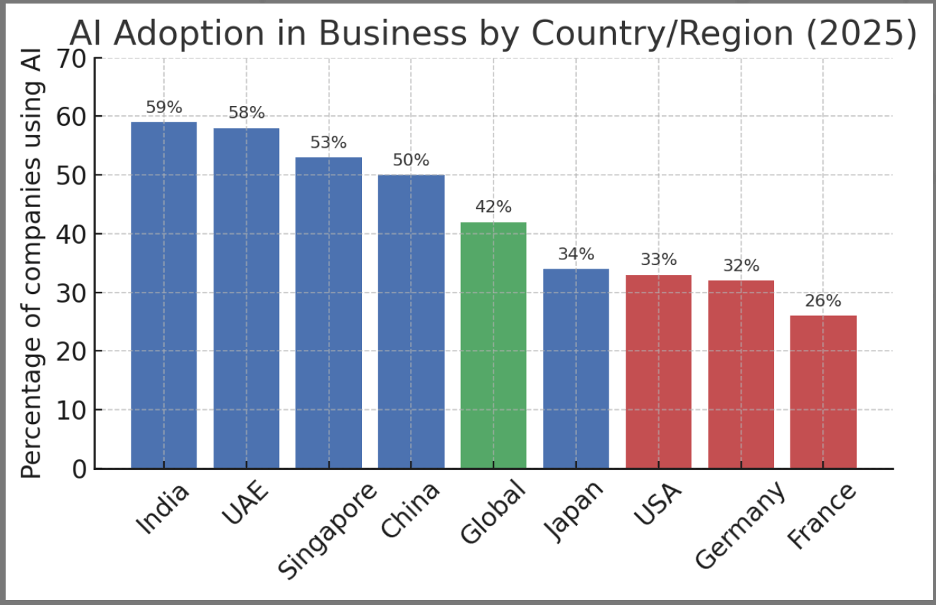

Around the world, businesses are adopting AI at different rates. Countries like India, the UAE, Singapore and China see over half of companies using AI, while in the U.S. and large European countries, only about one in three are doing so. (Source)

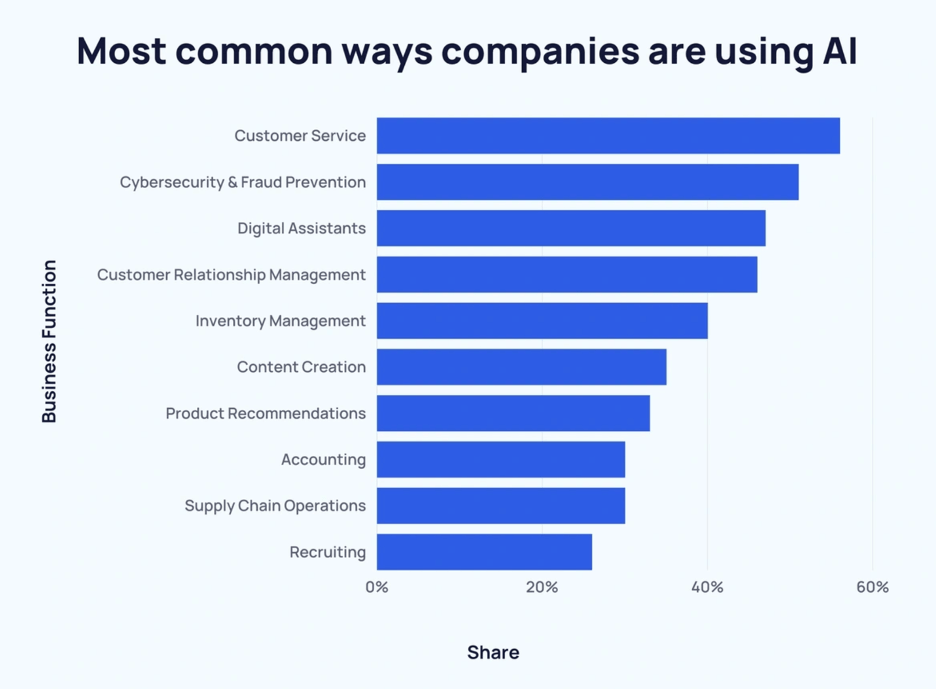

As AI continues to develop, businesses are discovering more ways to use it in their daily operations. Right now, the most popular use is in customer service, with 56% of business owners using AI to handle customer support tasks.

AI in the U.S.: Innovation Amid Complex Regulations

The United States is making major advances in AI across industries like finance, healthcare and law. However, instead of a single national law, the U.S. regulates AI through a mix of industry-specific rules and state laws. This patchwork system creates both opportunities and challenges for businesses adopting AI.

Finance: AI for Risk Management and Compliance

Banks and financial firms in the U.S. have been quick to use AI for tasks like detecting fraud, monitoring suspicious activity, assessing risk and improving customer service. AI helps these institutions meet legal obligations under laws like the Bank Secrecy Act and Know-Your-Customer (KYC) rules by scanning transactions and spotting irregularities.

Generative AI is now being tested for summarizing reports and forecasting risks. These tools can boost efficiency but also raise concerns about transparency, fairness and privacy. In response, U.S. regulators like FINRA and FSOC have flagged AI as a growing risk, urging firms to make sure AI systems don’t discriminate or violate consumer protection laws. Firms are expected to maintain human oversight and proper documentation.

Healthcare: AI in Diagnosis and Patient Care

U.S. healthcare providers are using AI for everything from analyzing medical images to predicting patient issues and supporting surgery. On the admin side, AI helps with tasks like drafting notes and answering patient questions reducing the burden on doctors.

But because AI tools handle sensitive patient data and can directly affect health outcomes, they face strict rules. The FDA regulates some AI tools like medical devices, while HIPAA helps keep patient data private. However, many existing rules weren’t built for AI that keeps learning after deployment. Agencies are now releasing new guidelines and some states (like California) require that patients be told when AI is used in their care and be given the option to speak to a human instead.

Legal Services: Efficiency and Ethical Risks

AI is rapidly being adopted in the legal field to help review documents, draft contracts, research case law and prepare legal memos. Generative AI tools like large language models can save lawyers time but they also come with risks.

Some AI tools have generated false legal information—leading to real-world consequences, such as lawyers facing penalties for using fake case citations. Courts have warned that attorneys must verify anything AI produces. Additionally, privacy is key: law firms must be careful not to share confidential data with public AI tools. Many are setting internal rules and using private AI systems to stay compliant.

While there’s no national law regulating AI in legal practice yet, state rules are starting to appear. Colorado, for instance, has labeled legal AI as “high-risk” and will require transparency and audits when its new law takes effect.

Regulatory Outlook: State-Led, Agency-Guided

Unlike the EU, the U.S. doesn't have a single national AI law. Instead, it relies on agency rules and existing laws around discrimination, privacy and safety. States are stepping in—over a dozen passed AI-related laws by late 2024.

Examples include:

- Colorado: Requiring risk checks for AI used in high-risk areas like finance or law.

- California: Demanding clear labels for AI-generated content and requiring healthcare AI disclosures.

Federal agencies are also active. The FDA regulates AI medical devices, the FTC warns against deceptive AI use and FINRA watches over financial AI systems. The White House has released guidelines and executive orders promoting responsible AI but these aren’t laws.

A key resource is the NIST AI Risk Management Framework, which many companies follow voluntarily to assess and manage AI risks.

Looking Ahead: Balancing Innovation and Trust

In the near future, AI laws in the U.S. will likely remain fragmented. While there’s bipartisan interest in Congress, federal legislation is still in the works. For now, businesses are encouraged to stay ahead by building strong AI governance programs, testing for bias and promoting transparency.

AI holds enormous promise across U.S. industries—from faster medical breakthroughs to smarter legal research and safer financial systems. But to fully unlock this potential, organizations must build trust by creating AI systems that are fair, secure and compliant with evolving laws.

European Union: Leading the Way in AI Regulation

The EU is setting the global standard for how artificial intelligence should be safely and ethically used. Through the AI Act, adopted in 2024, the EU is putting strong rules in place to make the AI systems fair, transparent and trustworthy—especially in heavily regulated sectors like finance, healthcare and law.

AI in European Finance: Innovation Under Strict Oversight

Banks and financial firms in Europe are using AI to fight fraud, assess credit risk, automate trading and manage portfolios. AI also helps with regulatory tasks like reporting and compliance, especially under rules like MiFID II and GDPR. For example, AI can scan massive amounts of transactions to detect suspicious activity and support “Know Your Customer” checks.

But the EU demands that these AI systems follow strict rules:

- They must not discriminate in lending or insurance decisions.

- Personal data must be handled carefully under GDPR, often requiring consent and offering people the right to know how decisions are made.

- The AI Act labels many financial AI tools—like those used for credit scoring—as high-risk, meaning they’ll need testing, documentation and must be listed in an EU database before they can be used.

European financial authorities, like the European Central Bank (ECB) and EBA, expect banks to have strong oversight and risk controls for AI. While these requirements can increase compliance costs, EU officials argue they will help build trust and allow AI to scale safely in finance.

AI in European Healthcare: High Potential, High Regulation

Hospitals and medical companies in the EU are turning to AI for:

- Analyzing medical images (like X-rays and MRIs).

- Helping doctors diagnose conditions.

- Supporting drug development and personalized treatments.

- Managing hospital workflows and triaging patients through chatbots.

Many of these tools are already regulated under the Medical Devices Regulation (MDR) or In Vitro Diagnostic Regulation (IVDR). If AI is used in diagnosis or treatment, it must be safe, tested and approved (via CE marking). The AI Act adds more requirements, classifying most healthcare AI as high-risk. This means:

- Data used to train AI must be accurate and fair.

- AI must include human oversight and be explainable.

- Developers need to prove the system works safely throughout its lifecycle.

Health data is especially protected under GDPR, which requires strong privacy safeguards and patient consent. Patients also have the right to know if AI influenced their care decisions.

With over 140 existing rules already touching on AI in healthcare, compliance is complex. But the EU sees strong oversight as key to unlocking AI’s full potential in medicine while keeping patient safety and rights front and center.

AI in European Legal Services: Careful Use with Ethical Boundaries

Law firms in the EU are beginning to use AI to:

- Review documents and contracts.

- Conduct legal research.

- Automate repetitive legal tasks.

Some public legal systems, like in Estonia and Spain, have even experimented with AI tools in court settings. But European leaders are cautious. For instance, AI that tries to predict a judge’s decision or an individual’s future behavior is likely to be banned or tightly restricted under the AI Act due to ethical concerns.

Key compliance and ethical rules include:

- Respecting GDPR and client confidentiality laws.

- Avoiding reliance on AI that can't be verified.

- Ensuring lawyers remain responsible for the quality of legal advice—even if AI helped produce it.

Most European firms are setting up internal controls like AI ethics committees and strict review processes for AI-generated work. The focus is on using AI to support—not replace—human legal professionals, with a strong emphasis on transparency and accountability.

The EU AI Act: A Risk-Based Legal Framework

The EU AI Act is the first major law globally to regulate AI across all sectors. It groups AI systems into categories based on risk:

- Unacceptable risk: Banned (e.g. social scoring, manipulative systems).

- High-risk: Allowed but highly regulated (e.g. healthcare diagnostics, financial scoring).

- Limited risk: Must follow transparency rules (e.g. AI chatbots).

- Minimal risk: No regulation (e.g. AI in video games).

High-risk systems will need to:

- Use reliable and unbiased data.

- Have technical documentation and logs.

- Be registered in a public database.

- Include human oversight and transparency.

General-purpose AI tools like GPT-4 are also addressed. They must disclose when content is AI-generated and take steps to reduce potential risks.

The Act comes into full effect in 2026, giving businesses time to prepare. However, any company—inside or outside Europe—must follow the rules if they want to offer AI services in the EU.

Beyond the AI Act: A Network of Supporting Laws and Agencies

The EU is aligning the AI Act with other existing laws:

- GDPR protects data privacy.

- Medical Devices Regulation (MDR) covers health tech.

- Financial laws like PSD2 and MiFID II apply to AI in banking.

- A new AI Liability Directive will make it easier to seek damages if AI causes harm.

To support responsible innovation, the EU is also offering:

- Regulatory sandboxes where companies can test AI tools under supervision.

- Funding for AI research and responsible development.

Despite fears that strict rules could slow innovation, many European businesses see compliance as a strength—helping them offer “trustworthy AI” products that customers and regulators can rely on.

Looking Forward: A Trust-First Approach to AI

Europe’s strategy is to lead with safety, ethics and public trust. By setting high standards now, the EU hopes to unlock AI’s potential across finance, healthcare and law—without sacrificing human rights or public confidence.

The "Brussels Effect" means the EU’s rules may influence global companies to raise their standards too. As other countries watch closely, the EU is showing what responsible AI governance could look like on a global scale.

Asia: Balancing Rapid AI Growth with Emerging Regulation

Asia is experiencing a surge in AI adoption, especially in finance, healthcare and legal/government services but the region is highly diverse. While some countries lead with sophisticated AI strategies (like Singapore, Japan, South Korea), others are still shaping their regulatory frameworks. The common thread: encourage innovation but build guardrails to avoid harm.

AI in Asian Finance: Innovation with Growing Scrutiny

Use Cases:

- AI powers fraud detection, AML compliance, robo-advisory, algorithmic trading and credit scoring (often using alternative data).

- Fintech in India, Indonesia and Vietnam uses AI to expand access to credit and mobile payments.

- Tech giants in China revolutionized digital finance with AI, prompting tighter regulation after concerns over bias and algorithmic abuse.

Regulatory Trends:

- Singapore and Hong Kong use soft law approaches like AI ethics principles (e.g., Singapore’s FEAT principles – Fairness, Ethics, Accountability, Transparency).

- China has introduced stricter rules, including oversight for AI in financial decision-making and algorithms that influence public opinion.

- Regulators in Japan and India are evaluating risks tied to AI in lending, insurance and market trading.

Compliance Outlook:

- Growing focus on data privacy, fairness and AI model accountability.

- More AI-specific financial regulations are expected across the region as governments catch up to industry innovation.

AI in Asian Healthcare: High Impact, Evolving Regulation

Use Cases:

- AI is used for diagnostics (medical imaging, triage tools), hospital management, robotic surgery and drug discovery.

- Telehealth AI helps extend care to remote or rural areas, especially in India and Southeast Asia.

- Countries like China, Japan and South Korea lead with smart hospital initiatives and approved AI software for clinical use.

Regulatory Trends:

- Japan updated its medical laws and passed a Next-Generation Medical Infrastructure Act.

- South Korea enacted a Digital Medical Devices Act and Basic AI Act, both adopting a risk-based framework like the EU.

- China regulates medical AI through device approvals (NMPA) and personal data protections (PIPL).

- Singapore released AI healthcare guidelines for safe deployment.

Compliance Outlook:

- Major themes: promoting AI accuracy, doctor oversight, data protection and local validation.

- Countries are launching regulatory sandboxes to test healthcare AI before full rollout.

- Regional variation is high but most nations are moving toward more structured AI oversight.

AI in Legal and Government Services: Cautious but Growing Adoption

Use Cases:

- Singapore, Hong Kong and Japan use AI for document review, contract analysis and legal translation.

- India is exploring AI to reduce court backlogs and aid legal research.

- China uses “Smart Court” systems and AI research assistants, though judges remain responsible for final rulings.

Regulatory and Ethical Considerations:

- Most countries rely on existing confidentiality and data protection laws, though AI-specific legal rules are emerging (e.g., China's judicial AI guidelines).

- Singapore encourages voluntary frameworks and internal governance.

- In Asia, there’s cultural and legal caution about replacing human judgment in justice systems.

Compliance Outlook:

- AI use must preserve transparency, fairness and human control.

- Public sector AI (e.g., for tax compliance, legal translation) is expanding but with a need for bias mitigation and rights protection.

- Asia may innovate in certifying trusted AI tools via public-private partnerships.

Regulatory Landscape: A Patchwork Moving Toward Convergence

- Asia has no single AI law across the region but national laws are evolving fast.

- Countries are referencing EU-style risk-based approaches, OECD principles and global best practices.

- AI sandboxes, sector-specific guidelines and proposed national AI acts are tools governments are using to keep pace.

Emerging Themes Across Asia:

- Pro-innovation stance in most countries, tempered by growing regulatory maturity.

- Increasing emphasis on ethical AI, explainability and cross-border standards (e.g., through ASEAN, APEC, GPAI).

- AI is seen not just as a business tool but as a driver of social development (e.g. financial inclusion, rural healthcare access).

Looking Ahead: Asia’s AI Future

- Finance: AI will enable smarter banking, better fraud prevention and financial inclusion—but tighter rules are coming.

- Healthcare: AI will reshape diagnostics and hospital operations—but only if trust and safety keep pace.

- Legal/Government: Gradual AI adoption will support legal research and public services—under human oversight.

With strong regional diversity, Asia’s path forward will not be uniform but the general direction is clear: embrace AI’s benefits, manage the risks and develop governance that helps align AI with public interest and regional values.

Conclusion: Building a Responsible AI Future in Regulated Sectors

As AI continues to redefine the financial, healthcare and legal landscapes, one thing is clear: regulation is no longer a question of “if” but “how.” The United States, European Union and Asia each represent distinct yet converging paths toward AI governance. The U.S. leans toward innovation-first, with emerging regulatory patchworks focused on addressing key risks. The EU, in contrast, has taken a bold, rule-based approach with its AI Act, setting a global benchmark for AI oversight. Asia presents a dynamic middle ground from China’s top-down controls to Singapore’s sandbox-enabled innovation forming a diverse but increasingly cohesive regulatory ecosystem.

Despite these differences, a global consensus is forming around core principles: fairness, transparency, accountability and safety.

Across all regions, regulators and industry leaders are recognizing that embedding these values into the AI development lifecycle from design through deployment is essential. Financial institutions are establishing AI ethics and compliance teams, healthcare providers are validating AI systems against clinical safety standards and legal professionals are setting internal protocols to promote responsible AI use.

For developers and product teams, this means proactively designing AI systems that are explainable, privacy-compliant and bias-aware; especially for high-risk applications like lending, diagnosis and legal decision support. It also means staying agile: tracking evolving rules, engaging with regulators and building governance frameworks that align with both local and international expectations.

Looking ahead, AI’s potential in regulated industries remains enormous. In finance, smarter AI could help detect and prevent fraud in real time across global networks. In healthcare, it could accelerate diagnosis and personalize treatment at scale. In law and government, AI could streamline case management and improve access to justice; all while maintaining human oversight and legal integrity.

To unlock these benefits without eroding trust, a collaborative, adaptive approach to regulation is essential. Policymakers must remain open to iteration as technology evolves. Industry must step up with transparency and responsibility. And globally, regulators can continue learning from each other sharing best practices, aligning standards where possible and innovating in governance as quickly as AI innovates in technology.

In sum, we are moving toward an AI future that is not just powerful but principled; one that upholds the public interest while enabling innovation. If regulators, companies and civil society continue to work together across borders, AI can become not only a transformative force but a trusted one especially in the sectors where trust matters most.

Megha Jain

Lead User Researcher

I am a seasoned researcher with a background in design and a strong ability to identify gaps in the digital product ecosystem. My work as a Lead User Researcher focuses on creating solutions that serve both users and organizations improving ease of use while building scalable and profitable product models. With experience across the full product lifecycle, from zero to one through large-scale growth, I bring both strategic perspective and hands-on research expertise. I’ve also built research practices and teams from the ground up, embedding user-centered design within organizational culture.