Where can ChatGPT help R&D? My answer: in the paper trail.

In R&D, the most valuable asset is what the organization already knows. Every lab notebook, report, presentation and dataset represents a fragment of the institutional memory: what’s been tried, what’s worked, what’s failed and why. Unlike most business records, this knowledge has enduring value. It can inform new discoveries, support IP prosecution or help avoid repeating past mistakes.

I learned this lesson firsthand during my very first project at DuPont. It was an ambitious collaboration with MIT at the cutting edge of materials science. And yet, what got me jump-started was an 80-year-old company report which detailed how a remarkably relevant study was done almost a century ago.Unlocking that value with AI isn’t just a matter of clever prompting—it’s a matter of context engineering. This emerging discipline focused on designing the full information ecosystem around a model: what it sees, when and in what form, enabling models like ChatGPT to perform real, domain-specific tasks reliably. In R&D, that means making decades of technical knowledge available in a way that AI can interpret and use.

But historical R&D knowledge is notoriously diverse. Some of it lives in spreadsheets or databases; much more is locked away in reports, slide decks or even paper notebooks. Terminology also evolves fast—materials safety datasheet (MSDS) is just safety datasheet (SDS) now. Meanwhile, crucial context is often undocumented, assumed or lost when team members move on.

R&D also operates with a unique tension—sensitive information must be protected with strict access controls—and yet relevant knowledge must be shared across disciplines, regions and generations of work.

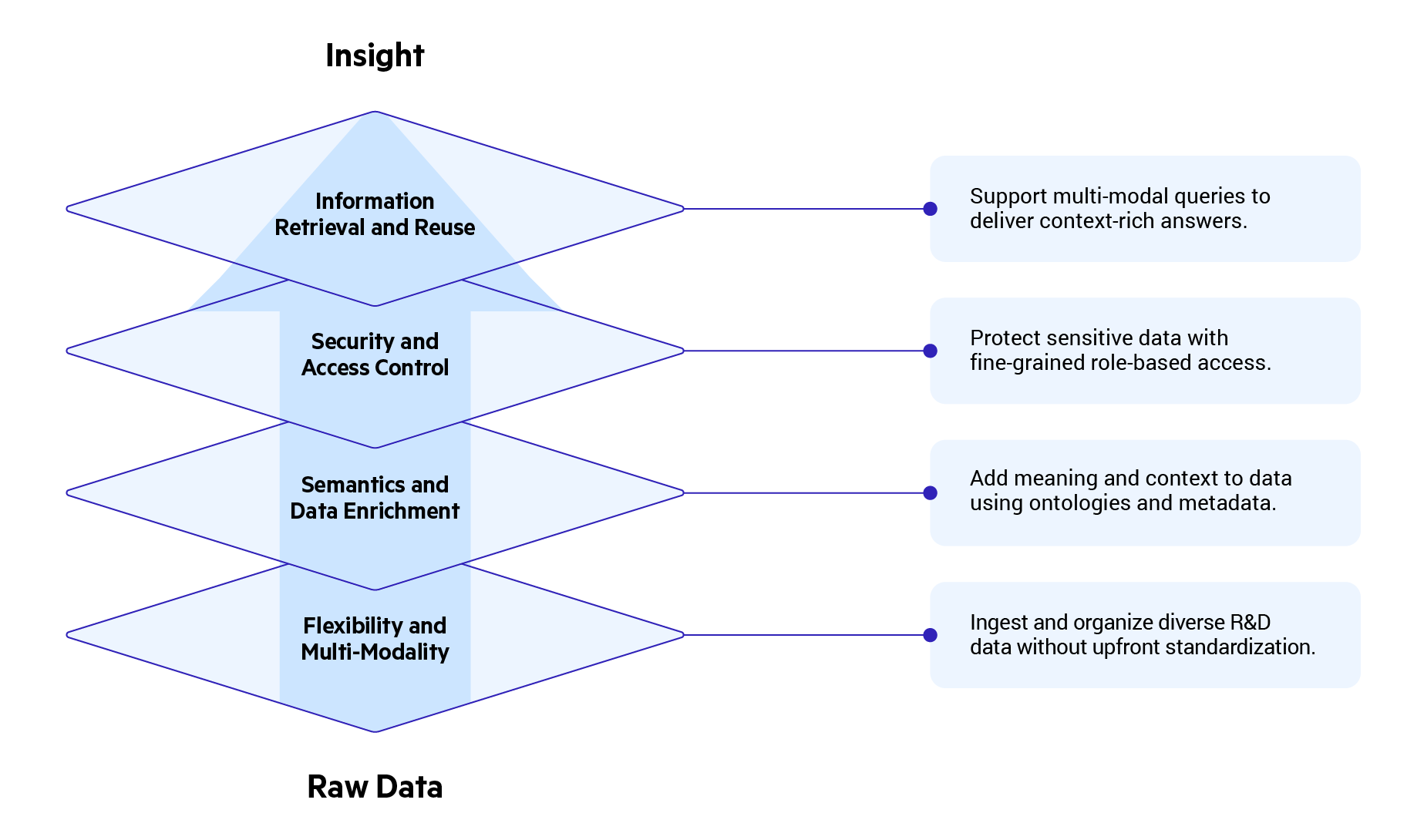

So, for AI to deliver meaningful, trustworthy answers, the paper trail has to be engineered into context—indexed, linked and surfaced exactly as the model needs it—all while the organization’s IP remains secured. That is the essence of AI context engineering for R&D: curating and packaging the right slice of institutional memory for every query. Achieving this requires more than plugging in a model; it demands an underlying platform built on four core capabilities:

Flexibility and Multimodality

Effective AI context engineering begins with the ability to capture and organize R&D data, which is inherently diverse—ranging from structured datasheets to unstructured documents, scanned notebooks, images, videos, analytical spectra and more. It’s not only multiformat, but also dynamic, domain-specific and constantly evolving as materials, methods and technologies advance.

To build retrieval-ready context for AI, all of this information must be stored, regardless of its format or origin. Instead of premature standardization upfront, it is often more effective to use systems that can ingest data in its native format and support progressive harmonization over time. This approach enables greater flexibility, facilitates integration across silos and allows for ongoing adaptation without disrupting existing R&D workflows.

The Progress MarkLogic platform exemplifies this methodology with a multi-model architecture that supports structured, unstructured and binary data side by side—enabling R&D teams to bring diverse information together in a single, AI-ready environment.

Semantic Layer and Progressive Data Enrichment

To make diverse R&D data meaningful and actionable, without overhauling existing systems, organizations can apply a semantic layer. Sitting on top of raw content, the semantic layer helps systems interpret not just the format of information, but its meaning. It links concepts, standardizes terminology and provides contextual relationships that enable AI to understand how disparate pieces of data connect.

For example, it can clarify that “EtOAc” and “ethyl acetate” refer to the same substance, or that a catalyst mentioned in one study is functionally related to a reaction pathway described in another. These connections help to restore the crucial context that is often lost or taken for granted.

The Progress Semaphore platform supports this approach by providing a framework for managing domain ontologies, aligning terminology and enriching content with semantic metadata. It helps transform disconnected data into a machine-readable knowledge graph for AI contextualization

Robust Security and Granular Access Control

R&D knowledge is not only valuable—it’s highly sensitive, with implications for intellectual property protection, regulatory compliance and competitive advantage. A data platform must enforce strict access boundaries aligned with the need-to-know principle.Additionally, effective access management requires fine-grained access control at the level of documents, sections, fields or metadata to strike a careful balance: protect sensitive IP and prevent accidental exposure, while enabling broad access to support data sharing and reuse.

The MarkLogic platform is built with these needs in mind. Its security model supports role-based access, redaction and compartment-level protections, battle tested in highly regulated and mission-critical environments. This allows organizations to deploy AI solutions confidently—while driving secure, responsible innovation.

Information Retrieval and Reuse

With AI, the goal isn’t just to find data—it’s to deliver an answer grounded in prior work. To support real scientific inquiry, retrieval must go far beyond keyword matching. It needs to understand the user’s intent, incorporate relevant scientific background and determine what kind of context will best support a meaningful response.Achieving this demands flexible, multi-modal querying capabilities. Sometimes a full-text search is appropriate; others call for SQL queries, semantic reasoning or vector similarity search. In many cases, a combination of all these approaches is needed to gather and assemble the right context.

This is where the MarkLogic platform excels, with its state-of-the-art full-text search, relational index and semantic graph and vector similarity queries within the same platform. With the Optic API feature, AI can combine these query types, enabling complex, mixed-mode retrieval strategies from a single interface. This flexibility is foundational to building retrieval pipelines in real-world R&D environments.

Final Thoughts

R&D organizations are sitting on decades of invaluable knowledge, but turning that paper trail into a productive AI partner requires context engineering—the deliberate design of systems that transform diverse, sensitive and complex scientific information into structured, meaningful context that AI can understand and use. As AI moves from experimentation to production, the future of R&D belongs to those who don’t just ask better questions, but who build the systems that deliver better context.

Fan Li

R&D AI & Digital Consultant | Chemistry & Materials

With over 15 years of experience, Fan has designed, built, and supported enterprise-grade R&D AI and digital solutions with robust security for Fortune 500 chemical and biotech companies. He speaks the language of both lab scientists and IT professionals, bridging research and technology through broad expertise. Passionate about empowering R&D teams, Fan leverages the latest IT advancements—especially generative AI—to enhance efficiency, improve knowledge access, and drive innovation.

His work spans a wide range of R&D challenges, from developing custom data analysis tools for exploratory research to building enterprise-scale AI systems that unify siloed scientific information and automate discovery processes. Whether setting strategic direction, building hands-on solutions, or training research staff, he brings a practical, results-driven approach tailored to science-driven organizations.