There are many buzzwords and phrases used across industries for marketing, sales and general business-oriented communication. Some need to be retired; however, there are a few that stand the test of time, particularly those that convey value.

For example, “sales to finance” (a.k.a. S2F) is used in media and entertainment to capture a large chunk of a digital distribution process, spanning contract sales through financial billing and invoicing. In manufacturing, “art to part” covers a cycle that starts with back-of-the-napkin sketches of ideas captured in a lunchroom (or bar), through design, prototyping, sourcing, manufacturing and assembly.

But the one I like the most these days is “concept to consumer.” A book with that phrase in the title tops the list in a Google search, and it dives deeply into the world of product development. This post is not a review of that book. It’s mentioned to recognize that others coined and use this phrase in a somewhat related context. Rather, this post traces the journey (through use cases) from concept to consumer in the context of rapidly changing environments (business and technology) and vast amounts of data that is collected along the way, and how a data hub can help in that journey.

Product Lifecycle Data Challenges

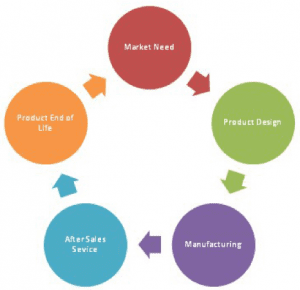

Concepts that successfully emerge from back-of-the-napkin sketches generate mountains of information at every stage of their product lifecycle (PLC). That information is often needed at some, if not all, of the other stages of the PLC, as illustrated below.

Once an idea moves beyond the napkin, it typically forms the basis of marketing and product requirements documents (MRDs and PRDs, respectively). Subsequently, MRDs and PRDs can reference other documents that pertain to engineering, sales, finance and other enterprise functions. The repositories that contain these documents are valuable systems of record (SoRs), but they often become data silos, slowing down 360 initiatives centered around products, suppliers, customers and other key business entities.

The various file formats alone (.docx, .ppt, .xlsx, .dxf, .jpg, .dwg, .3ds, etc.) present integration challenges for applications that merely search for information. As we’ll see in the use cases, connecting documents among this data in order to answer even simple queries like, “How many suppliers are there for a given part?” is a bigger challenge. This is because each enterprise function (engineering, sales, finance, etc.) and supply chain partner invariably creates a new (and different) entity identifier—along with new and different metadata pertaining to the part, subassembly or final assembly in question—in order to do their specific tasks.

Along with label identifiers, the data synchronization problem is exacerbated by different data types (integer, string, various date and currency formats, etc.) and different structures for the same information (e.g., is an address contained in one field or multiple fields to capture street, city, state and zip). In addition to reconciling label and structural information, there are semantic inconsistencies that often need to be fixed (e.g., does the “# of items” field represent the actual number of parts in an inventory database or the number of boxes containing a given number of parts?).

Added to this are business processes that require 360 views of one or more entities (customer, part, assembly, supplier, etc.) that are tracked in these various SoRs. A great example is a theme park that was required to maintain 8,000 anchor points on a periodic basis within the park. Anchor points literally are hooks, or fastening points, where trained workers can brace themselves in order to maintain rides and attractions. Maintenance procedures require access to information contained in design documents (e.g., AutoCAD 2D drawings and 3D models), asset tracking documents (e.g., Maximo), learning management system (LMS) documents to track worker training and certification, and a variety of documents for process management contained in Excel files or SharePoint repositories.

This represents complex data and application integration challenges, which, in this case, impact consumer safety along with enterprise costs and operational efficiency. Let’s see how this proliferation of data manifests itself as challenges in other common use cases.

Manufacturer of Automobile Safety Equipment – In a company that manufactures auto safety components, an engineer might label a part the primary initiator during the design phase. The primary initiator is an explosive pill-like component which, under controlled conditions, inflates an airbag. The airbag is inflated via its parent assembly the design engineer labels— you may have guessed, a primary inflator. Along the way, the engineer, or the software in which he works, likely assigns an identifier to both the initiator and inflator. It is not uncommon, and in fact is typically the case, that as parts such as these move through the various software systems used by each enterprise function along the PLC, they acquire additional identifiers and metadata for function-specific management. Canonical identifiers and metadata may emerge, through master data management (MDM) initiatives. However, unless solid data governance polices are active throughout the enterprise, finding standard labels for the thousands, if not millions, of parts used in today’s global supply chains is too much to hope for.

At some point in the life of these components, an airbag failure occurs. In order to prevent and/or limit injury or loss of life, a traceability expert will need to determine the root cause of the failure, so that immediate remediation steps can be set in motion throughout the supply chain. It becomes crucial that the canonical name—and all related names for primary initiator and primary inflator, including replacement parts, which may come from partners—is known.

Manufacturer’s Factory – When IoT outfitted test machines feed streams of information to a digital twin, and the signals indicate a pending problem, all components of that assembly under test must be identified along with all dependent assemblies and processes. The value of the digital twin, driven by integrated data, is to ward off added cost, delays or safety issues that might occur without the twin’s predictive power. If pending problems aren’t caught, not only are opportunities to reduce costs of failure missed, but product liability goes up. This negatively impacts a company’s brand (arguably its most valuable asset) and possibly increases safety risks to consumers (no argument as to whether this must be avoided).

Pharmaceutical Company – When a logistics analyst in a pharmaceutical company needs to connect information from SAP (ERP relational tables), carrier and shipper management information (also in relational tables), and lane data forms from the content management system (CMS), the identifiers from the relational systems are not easily joined with identity information contained in document-oriented lane forms under CMS control. Lack of visibility not only leads to distribution inefficiency but can also impact the pharm company’s compliance requirements.

Consumer Products Company – When a support person from a consumer products company, like high-end mountain bicycles, receives an urgent ticket, information from CRM, sales and marketing systems must be merged with structured and unstructured information from support’s ticketing system to provide a customer 360 view. Such a view would include not only basic customer profile information, but the customer’s purchase and support history along with product information that can include design specs, maintenance procedures and even the original back-of-the-napkin sketch for the product from the archive. Such information not only drives positive customer experiences, but also forms the basis of product improvement, new product development and potential upsells. Although support calls deal with problems, they are customer engagement points that can be turned into opportunities. Opportunities range from customer loyalty through excellent service (“Wow! That company fixes my problems fast!”), to revenue gains from immediate upsells if the situation presents itself appropriately. Lack of a 360 customer view not only decreases the probability of successful outcomes during support calls, but increases the probability of negative outcomes ranging from an unhappy customer, to a lost customer, to negative impact on the brand.

Media Company – When a marketing professional for a media company analyzes a film’s or video’s performance in the marketplace, detailed knowledge of the content must be combined with market research that includes static buyer profiles and dynamic buyer behavior such as Facebook posts or tweets about the content.

Such advanced techniques are needed because it would be difficult to distinguish the motivations behind a Facebook “like” from an 18-year-old male who lives with his parents in the suburbs for a specific film, from the “like” coming from a 28-year-old female who lives by herself in the big city. Without this insight, recommendation engines fail and new content (feature film and TV show) launches are less successful.

A Solution for Integrating Data

Meeting the requirements for each of these use cases requires an enterprise to become data-driven. A data-driven enterprise treats data as an asset, which drives tactical decision-making through operational reporting dashboards and strategic decision through high-quality analytics, which more and more rely on machine learning.

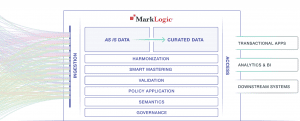

The Operational Data Hub (ODH) has emerged as an architectural pattern to help enterprises become data-driven. With reference to the diagram below, the key characteristics of an ODH are:

- Ingest – the ability to ingest data from a variety of upstream sources as is, i.e., without requiring months of relational database schema development

- Access – immediate access to ingested, indexed data, through search, semantic or SQL queries

- Agile mastering and governance – the ability to master semantically similar data from multiple sources (rapidly and incrementally) into canonical representations for downstream applications and data services, while maintaining data lineage and provenance

- Semantic modeling – the ability to drive data harmonization and semantic searches through relationships established with taxonomies and ontologies

- Semantic enrichment –- the ability to enrich ingested data through entity extraction, geo-encoding and natural language processing (NLP), giving data more meaning and connectivity to other business entities

- Curation – the ability to curate data for downstream analytics, including reporting, AI/machine learning, transactional applications and business processes

- Security – the ability to provide secure, redacted information to authorized users and applications

- Scalability – the ability to adjust pay-as-you-go, cloud-based platform resources on the fly

Given these features, how might the challenges encountered in the use cases above be addressed with an ODH? Let’s revisit a couple of them.

Pharmaceutical CompanyRevisited – Enabling joins between semi-structured lane data documents (where entity identifiers are non-specific) and relational systems with specific identifiers, requires a semantic analysis of the lane documents to extract pertinent information. This information, along with semantic reference data, is used in a fuzzy matching scoring system to automatically match SAP and carrier identifiers (from the relational data models) to their corresponding lane form identifiers (from the document data models). This is an integral part of an agile master data management (MDM) approach that delivers rapid, incremental value to business units without having to wait for enterprise MDM initiatives to be deployed.

Now, queries such as “did the package reach its destination at the right time, with the right quality and with any temperature excursions?” (all critical to pharma companies) can be answered immediately, saving valuable time, increasing product safety, reducing risk of non-compliance and reducing costs.

Media Company Revisited – It’s not a stretch to say all media companies have descriptive metadata tagging initiatives in progress in order to “know their company’s products.” These initiatives use humans or image recognition systems to identify and tag not just talent (actors and actresses) and characters, but also locations, logos, plots and storylines for each time coded slice of their entire collection of film or video. For some notable media companies, this can amount to close to a century of content. Likewise, as much data as possible is being collected by these media companies about their consumers, hopefully with the good intentions of serving them better. This type of “know your product” combined with “know your customer” analytics drives recommendation engines and new product (content) development.

Using semantic triples to capture descriptive metadata, consumer profiles (relatively static) and consumer behavior (relatively dynamic), data analysts can better determine if someone “likes” a specific actor or actress, while someone else “likes” the storyline or theme. A “like” for the same film, from different individuals, requires semantically oriented analytics to hit the mark when making “you might also like” recommendations to the 18-year-old male and 28-year-old female. This custom targeting fulfills direct to consumer, long-tail, customized engagements and successful new product (content) development that all media and entertainment companies are trying to achieve.

Tying It All Together

Initiatives like “sales to finance,” “art to part” and “concept to consumer” are found across every industry. The global nature and complexity of these product (or service) lifecycles cause many of them to stumble, if not fail outright. While a variety of operational factors come into play, the primary challenge that confronts every enterprise is leveraging data as a strategic asset.

That challenge is best met when data synchronization, silo integration, agile mastering, security and governance is handled at the data layer, not programmed into the application layer or into external systems. Application and external data management raise the chances of creating yet other silos, adding to architectural brittleness, complexity and inflexibility. Enterprises lose their agility and run the risk of failure in today’s dynamic business, technical and regulatory environments.

The Operational Data Hub has emerged as a proven pattern to help companies leverage their data as strategic assets and become agile in environments where change is constant.

To learn more about the ODH and how MarkLogic has used it to help customers with data-integration challenges, check out our Data Hub Service—a fully automated cloud service to integrate data from silos—making it fast and easy to deploy a data hub.

Michael Malgeri

Michael Malgeri is a Principal Technologist with MarkLogic. He works with companies to match their business requirements with MarkLogic’s enterprise NoSQL database and semantic features. He helps organizations reduce costs, automate processes, find new opportunities and create applications that bring high value to businesses and their customers. Michael focuses on the media and entertainment industry, where content providers, distributors and related companies are seeking to leverage the power of data in order to capture new opportunities driven by expanding global information consumption.

Michael holds Master’s Degrees in Computer Science, Business and Mechanical Engineering. He's been a Certified Project Management Professional since 2011.