Connect to On-Premises databases from Google Composer

Introduction

Google Cloud Composer is a fully managed workflow orchestration service built on Apache Airflow and operated using Python. It enables you to author, schedule and monitor workflows as directed acyclic graphs (DAGs) of tasks.

In this tutorial, we will walk you through how you can connect to an on-premise datasource Hive behind a private firewall from Google Cloud Composer using the Progress DataDirect Hybrid Data Pipeline.

Setting Up Progress DataDirect Hybrid Data Pipeline

Follow these easy instructions to get started.

- Install Hybrid Data Pipeline in your DMZ or in the cloud by following the below tutorials for:

- To connect to On-Premises databases, you need to install an On-Premises agent on one of your servers behind the firewall that lets the Hybrid Data Pipeline Server communicate with the database.

- To install the Hybrid Data Pipeline’s On-Premise Agent and configure it with the cloud service where you installed Hybrid Data Pipeline Server, please follow the below tutorials. If your Hybrid Data Pipeline Server is in:

- Download and install the Hybrid Data Pipeline JDBC connector.

Once you have everything set up, navigate to http://<server-address>:8080/d2c-ui or https://<server-address>:8443/d2c-ui to view the Hybrid Data Pipeline UI.

- Log in with the credentials d2cadmin and provide the password you used while installing the Hybrid Data Pipeline Server.

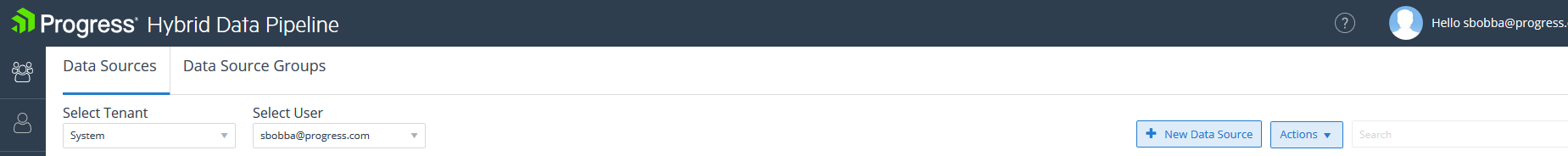

- Once you have logged in, create a New Data Source by clicking on New Data Source button as shown below.

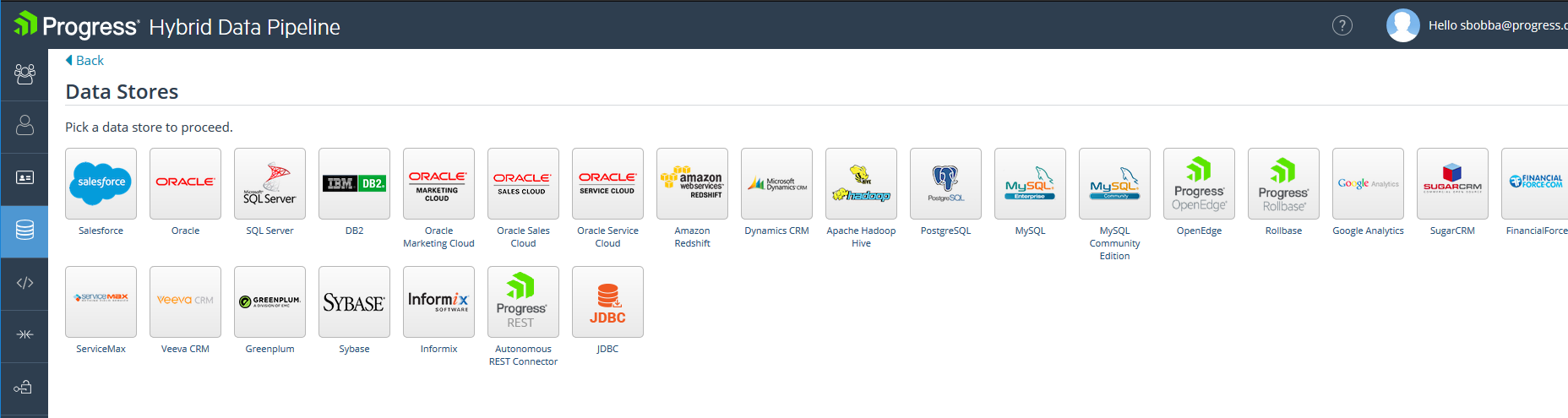

- You should now see list of all Data Stores as shown below. Choose Hadoop as your data store.

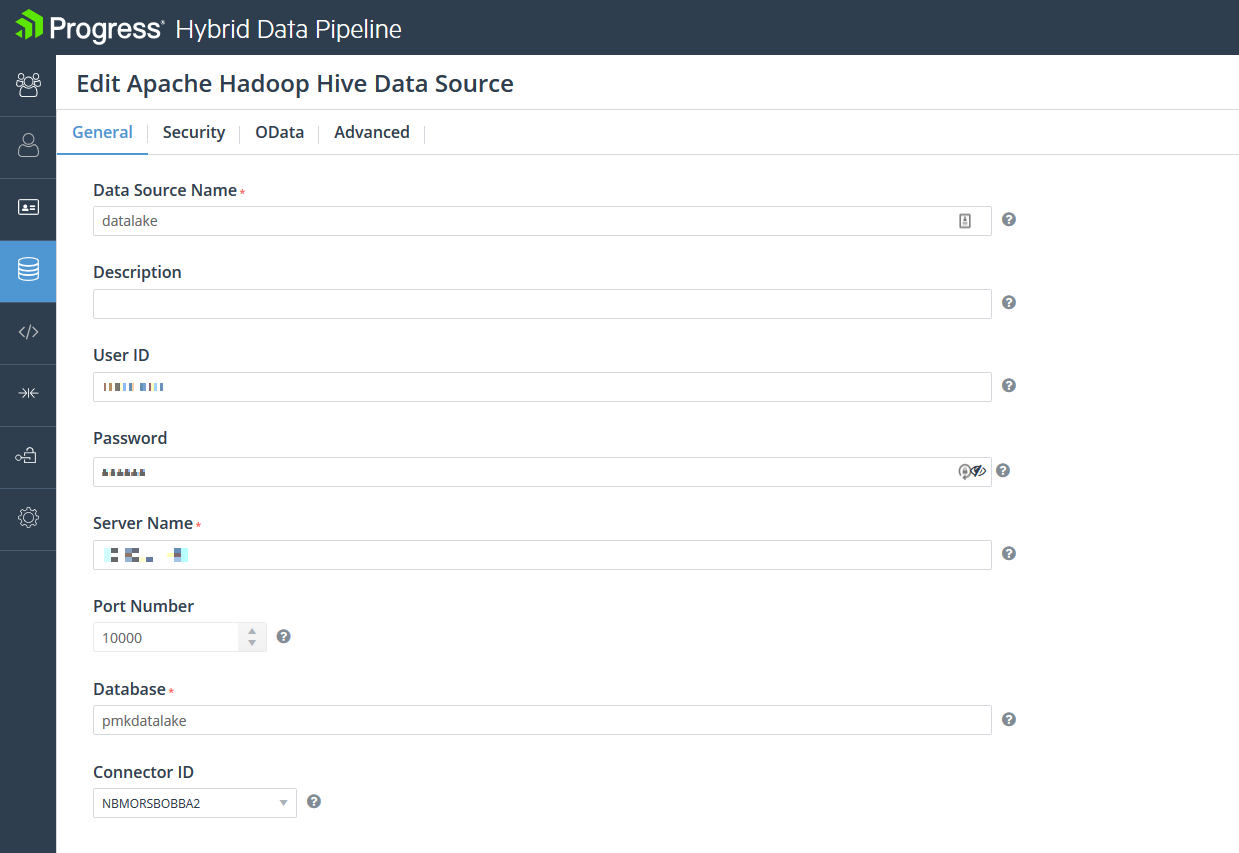

- On the Configuration, fill out all the connection parameters that you would generally use to connect to your Oracle database and set the Connector ID. The Connector ID is the ID of the on-premises connector that you have installed for this server. If you have installed and configured the on-premise connector, you should automatically see the Connector ID In drop down.

- Now Click on Test Connection and you will be able to connect to your Hadoop Hive data source On-Premise. Click on UPDATE button to save the configuration.

Configure Google Cloud Composer

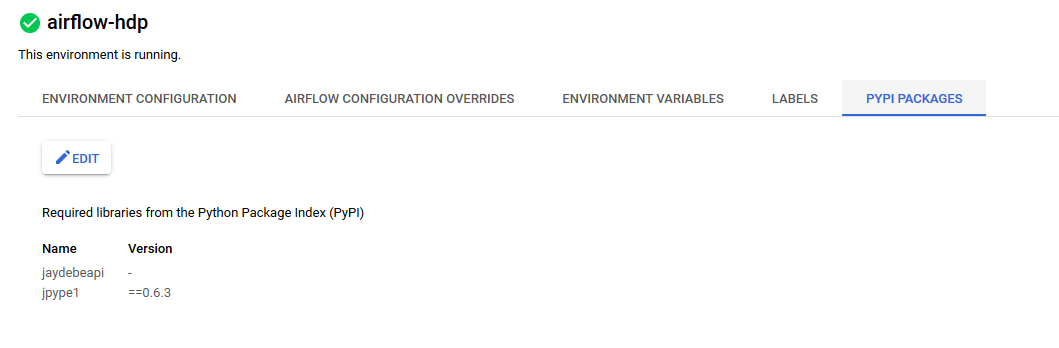

- After you have created your Google Cloud Composer environment, go to PYPI packages tab and add the below packages to your nodes.

- Jaydebeapi

- Jpype1==0.6.3

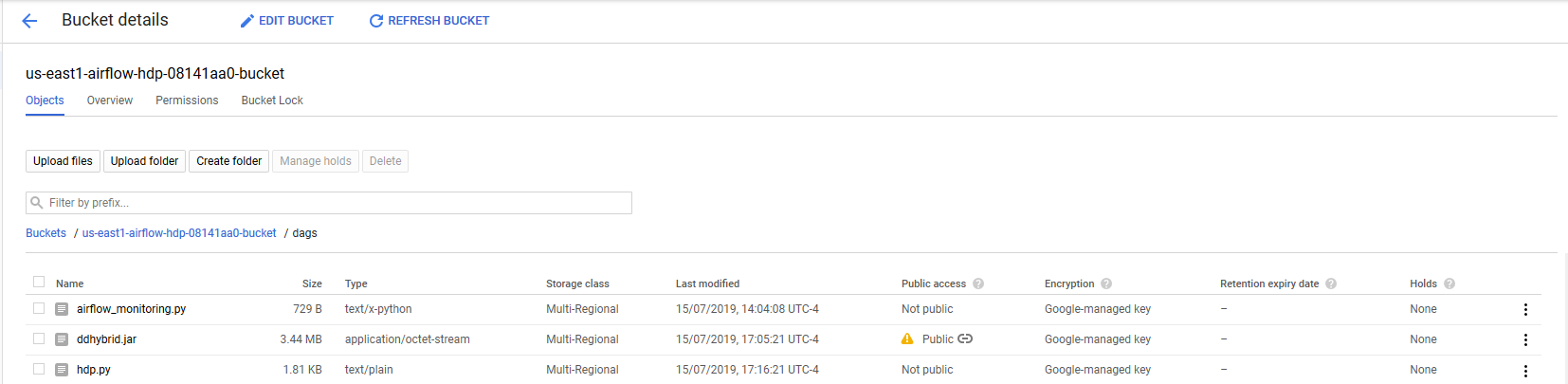

- Next go to the Environment configuration and from there open your DAGS folder in Google Storage.

- Upload the DataDirect Hybrid Data Pipeline JDBC connector to this folder.

- Note that all the items copied to DAGS folder will be available at the path /home/airflow/gcs/dags/ on the cluster.

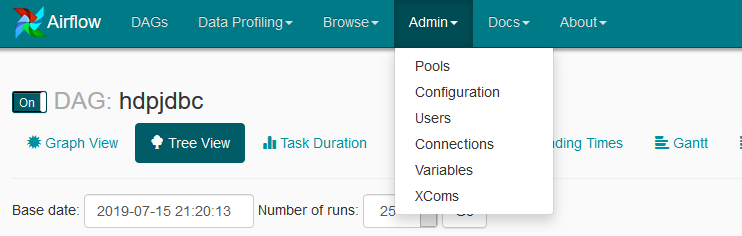

- Go to Airflow Web UI and under Admin menu -> Create New Connection.

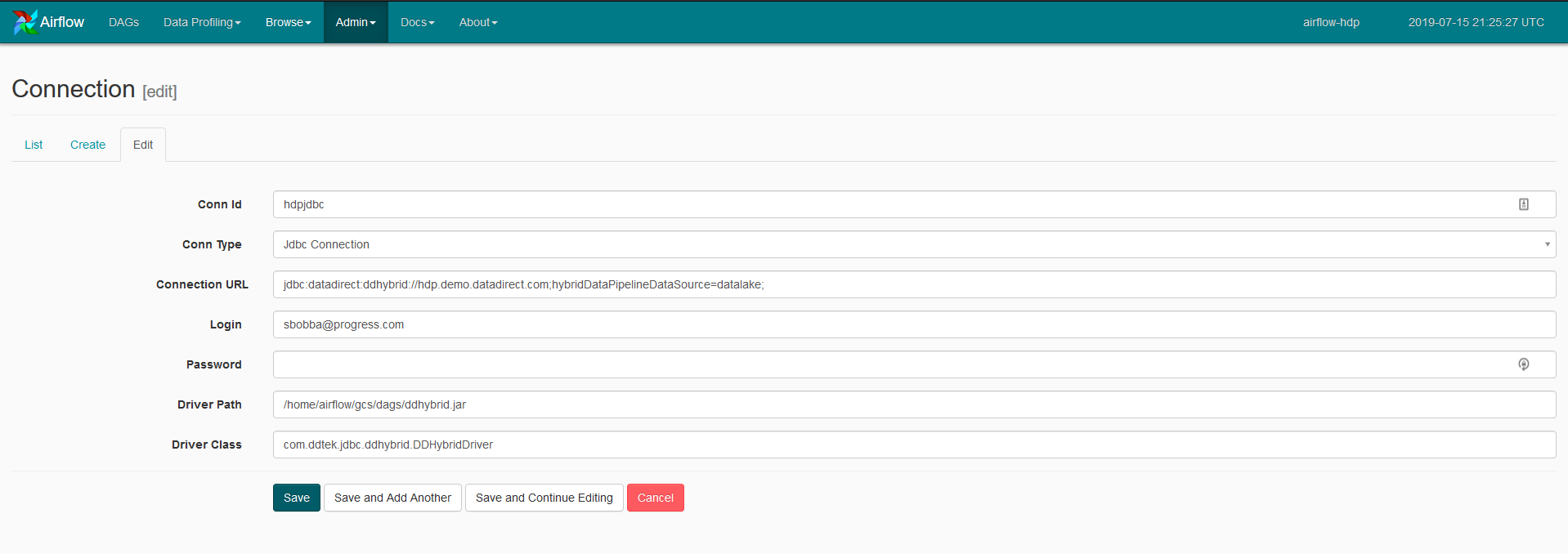

- Provide the following details in your configuration:

- Conn Id: hdpjdbc

- Conn Type: Jdbc Connection

- ConnectionURL: jdbc:datadirect:ddhybrid://hdp.demo.datadirect.com;hybridDataPipelineDatasource=datalake

- Login: <Your HDP username>

- Password: <Your HDP Password>

- Driver Path: /home/airflow/gcs/dags/ddhybrid.jar

- Driver Class: com.ddtek.jdbc.ddhybrid.DDHybridDriver

Creating a DAG

- For this tutorial, we will be reading data from on-premises Hadoop instance and display the records in DAG logs.

- Below is the DAG Python program. Save it to a Python file, for example hdp.py. Alternatively, you can access the code on GitHub.

fromdatetimeimporttimedeltaimportairflowimportosimportcsvfromairflowimportDAGfromairflow.operators.python_operatorimportPythonOperatorfromairflow.operators.dummy_operatorimportDummyOperatorfromairflow.hooks.jdbc_hookimportJdbcHook#Creating JDBC connection using Conn IDJdbcConn=JdbcHook(jdbc_conn_id='hdpjdbc')defgetconnection():JdbcConn.get_connection('hdpjdbc')print("connected")defwriterrecords():id=JdbcConn.get_records(sql="SELECT * FROM customer")with open('records.csv','w') as csvFile:writer=csv.writer(csvFile)writer.writerows(id)defdisplyrecords():with open('records.csv','rt')as f:data=csv.reader(f)forrowindata:print(row)default_args={'owner':'airflow','depends_on_past':False,'start_date': airflow.utils.dates.days_ago(2),'email': ['airflow@example.com'],'email_on_failure':False,'email_on_retry':False,'retries':1,'retry_delay': timedelta(minutes=5),# 'queue': 'bash_queue',# 'pool': 'backfill',# 'priority_weight': 10,# 'end_date': datetime(2016, 1, 1),}dag=DAG('datadirect_sample', default_args=default_args,schedule_interval="@daily")t1=PythonOperator(task_id='getconnection',python_callable=getconnection,dag=dag,)t2=PythonOperator(task_id='WriteRecords',python_callable=writerrecords,dag=dag,)t3=PythonOperator(task_id='DisplayRecords',python_callable=displyrecords,dag=dag,)t1 >> t2 >> t3

- Upload the Python program to the DAGS Folder in Google Bucket.

Running the DAG

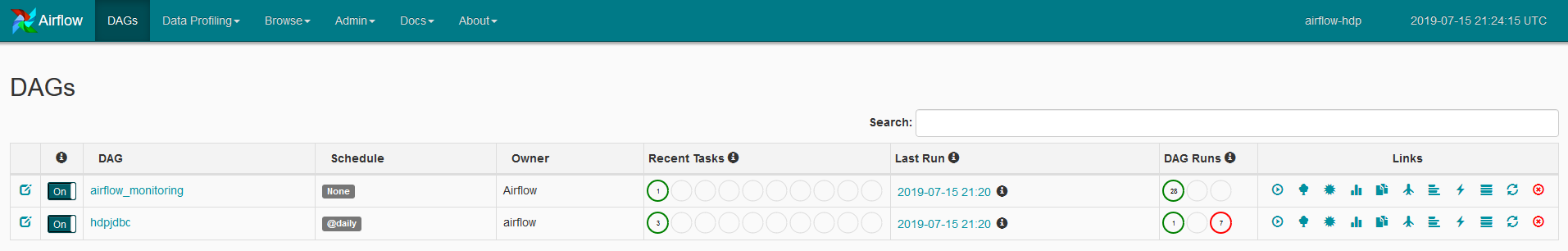

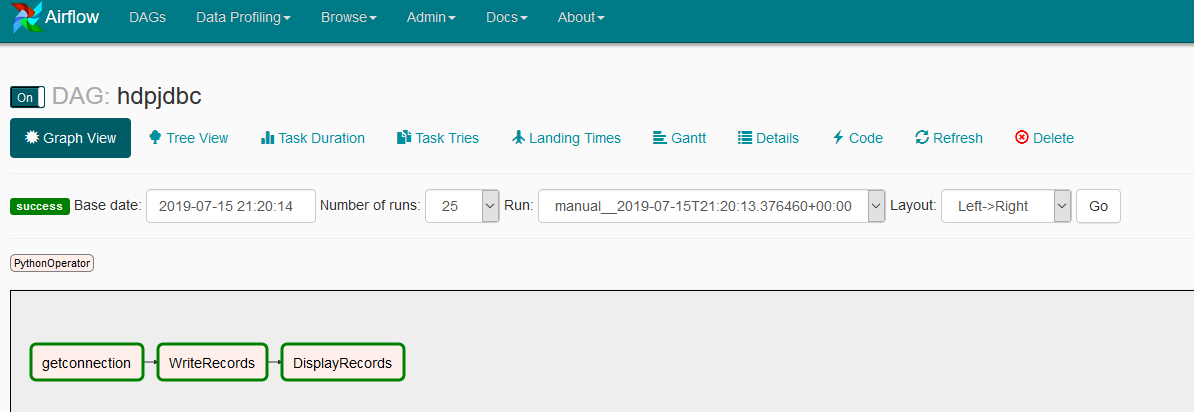

- On the Airflow Web UI, you should see the DAG as shown below.

- Click on the trigger button under links to manually trigger it. Once the DAG has started, go to the graph view to see the status of each individual task. All the tasks should be green to confirm proper execution.

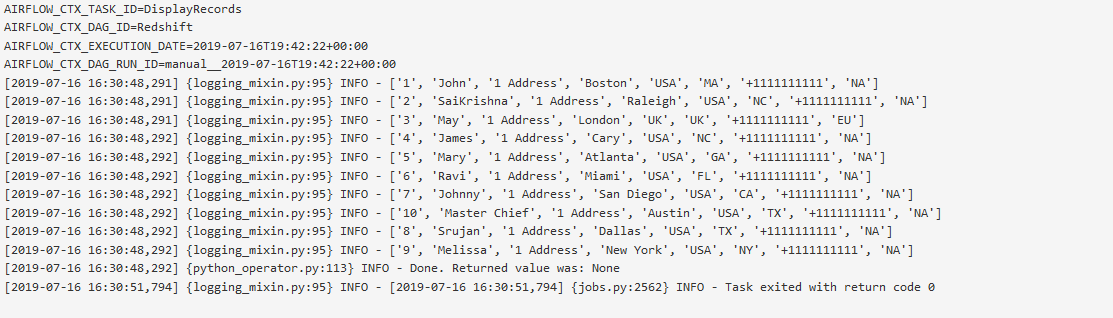

- After the execution, if you click on the task DisplayRecords and go to logs, you should see all of your data printed there as shown below.

We hope this tutorial helped you to get started with how you can access on-premises Hadoop Data from Google Cloud Composer. Please contact us if you need any help or have any questions.