Although most ODBC and JDBC connectors talk to databases and most of them issue SQL queries in the database’s native dialect, things don’t have to work that way. If the back end’s native protocol is something other than SQL, a connector can still translate the SQL provided to it into code in the language or command syntax native to the target system.

In the case of some NoSQL databases, this brings a lot of value, because some of them don’t offer full SQL implementations, and others offer none at all. Some use JavaScript natively, and others have their own language. Put a standard database connector in front of these databases, though, and suddenly enterprise developers can use those databases with their existing skill sets.

Essentially, such connectors place tabular database abstraction layers on top of schema-less NoSQL databases. And that technique can be extended beyond NoSQL databases, or databases of any stripe. In fact, database abstraction layers can also be created for applications, a concept that forms the foundation of a holistic approach to application data integration.

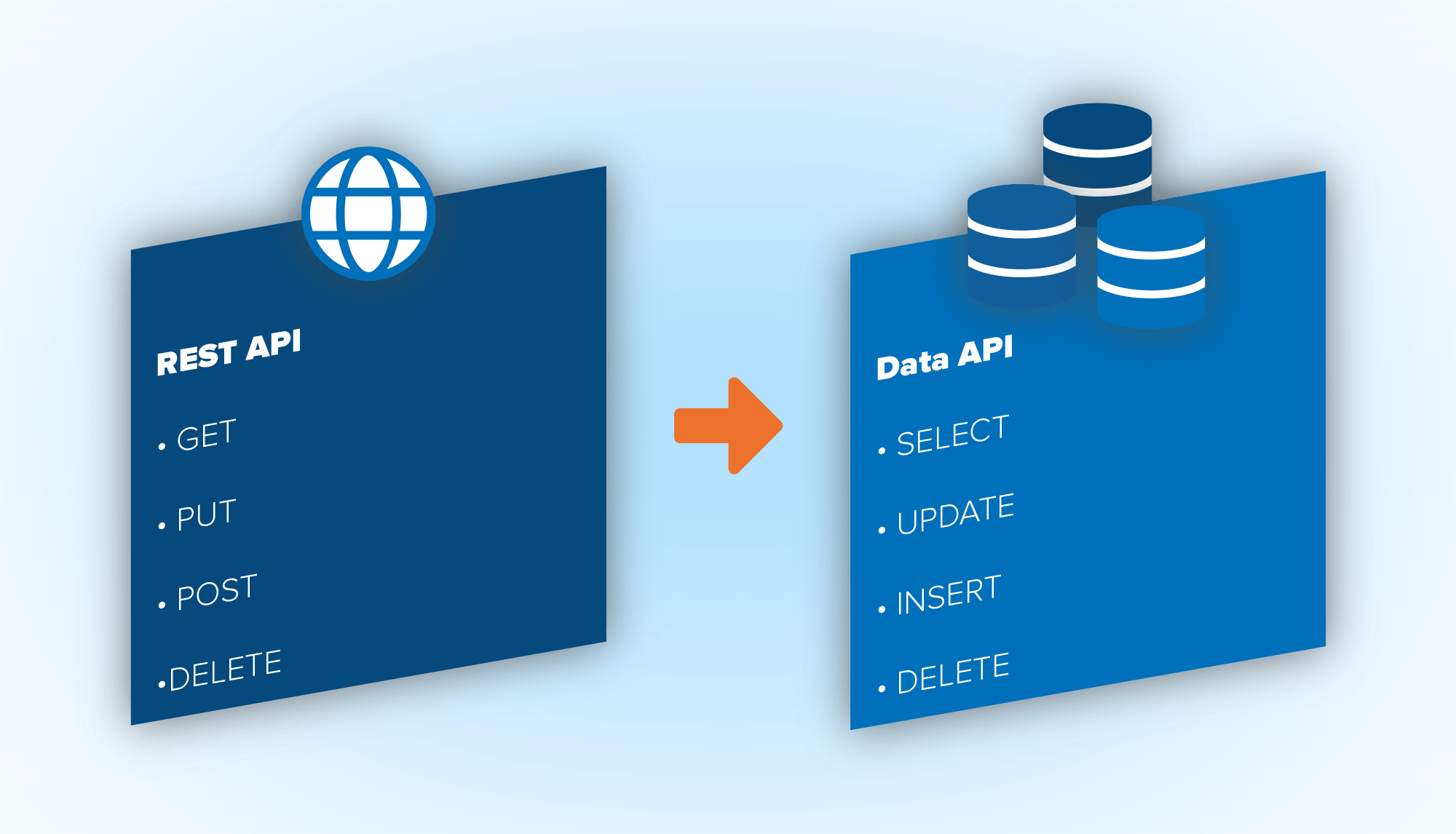

Figure 2. A database abstraction layer for a REST API

Case in Point: Abstracting the REST API using the Database Metaphor

That concept, though, may feel very abstract, so let’s take an example to make it more concrete: a customer relationship management (CRM) application. Virtually all CRM apps have their own APIs, and we can imagine that a given SaaS-based CRM product would have a REST API. This, in fact, could have been any REST API that was built by your in-house developers.

That API might include commands for seeing lists of customers, or lists of opportunities, and those commands might have parameters whose values could be set to filter the list that came back. For example, we might be able to use the API to bring back a list of all customers assigned to a given salesperson, or all opportunities created during the current calendar week.

It’s great that that API is there, and we could use it to bring customer information into our analysis, and join it with customer data from other sources, in order to get an integrated view of those customers. But an even better approach would be to translate these APIs into the database metaphor – ODBC or JDBC connector – instead.

Customers and opportunities could then be modeled as tables, with each of their attributes modeled as columns in the tables. Instead of using an API command to retrieve lists of those customers, we could just run a SQL SELECT query, using a WHERE clause to filter the Salesperson “column” of the Customers “table” or the CreatedDate “column” of the Opportunities table.

We could then have an application that accepted such SQL requests, queried the CRM system’s native API via the database abstraction layer, retrieved the results as a set of objects, and returned all of their data in the form of result sets.

Querying with a WHERE clause on CustomerId or OpportunityId would bring back data for a specific customer or opportunity, rather than a whole set of them. INSERT queries could be used to create new customers or opportunities; UPDATE and DELETE commands could be used to maintain existing such entities.

Ramifications and Replications

All popular BI tools, such as Tableau, Power BI, Qlik, Cognos and MicroStrategy, support ODBC and/or JDBC connectors.

Using this approach would mean developers wouldn’t need to learn the CRM system’s native API, because the connector would act as an interpreter that converted queries from its SQL abstraction layer into that native API, for the developer.

Analysts/BI users have it even better, because there’s little for them to do, beyond connecting to the CRM system via the driver. After all, all popular BI tools, such as Tableau, Power BI, Qlik, Cognos and MicroStrategy, support ODBC and/or JDBC connectors.

Once connected, a list of tables would appear, allowing users to select them, and to start aggregating numerical information in those tables (like deal size) over various categories (like salesperson or fiscal quarter) and visualizing the results.

An interesting feature of data connectors is that many of them will inevitably provide database abstraction layers over applications which, in turn, provide application abstractions over physical databases. And while that fact may create a temptation to imagine the outer tabular abstraction layer is actually a direct passthrough to the inner physical database, that is neither a common nor recommended implementation.

While the physical database and the abstracted data connector interface each feature tables, rows, and columns, and share the SQL language for programmatic control, they should not be conflated. The data connector approach provides an abstraction layer, by design, whereas the database is a physical repository. The connector interface will likely feature a simple schema, optimized for consumption and intuitive adoption; the physical database will be based on a more complex design, optimized for performance and efficiency.

The symmetry in primitives, required skillsets, and compatible tools, but with a great difference in design and mission, merely underscores the versatility and universal applicability of the tabular data metaphor.

Economies of Scale

With such a compelling model in place, adding other applications (marketing campaign management, for example) using the same approach avoids the major disruption for developers to learn additional APIs. Instead, the new application is treated like just another database, with its own catalog of tables and columns. The same techniques could be used to connect, analyze, and visualize the data.

For integration, “tables” from each of the applications could be virtually “joined” in order to integrate comprehensive information about specific individual entities. This could be done in code, in an analysis tool, or both.